Dynamic pipelines in GitLab are a subset of child pipelines. They have the same features as child pipelines — the difference is how you create the file.

One of the most difficult aspects of writing pipelines is catering for permutations. You often find yourself repeating the same workflows, with only minor differences. This is especially frustrating when you have to target many different environments.

We’re all used to crafting our workflow files, then checking them into version control. They’re fixed entities that we must build with every variation in mind. But that doesn’t have to be the case — we can generate workflow files on demand. With dynamic pipelines, this is exactly what’s possible.

The first example will use a

bashscript to generate the dynamic pipeline files. Later examples will use a Python script.

A basic example

The following is a gentle introduction to creating a dynamic pipeline.

You can find the source code in the reference repository.

We define everything in .gitlab-ci.yml:

create-dynamic-pipeline-file:

script: |

cat <<EOF > dynamic-pipeline.yml

dynamic-job:

script:

- echo "This job is created dynamically"

EOF

artifacts:

paths:

- dynamic-pipeline.yml

trigger-dynamic-pipeline:

needs: [create-dynamic-pipeline-file]

trigger:

include:

- artifact: dynamic-pipeline.yml

job: create-dynamic-pipeline-file

In the create-dynamic-pipeline-file job, script uses here document notation to create a basic job. The result is output to a file and stored as an artifact.

The trigger-dynamic-pipeline job depends on the previous job and triggers the child pipeline. This is almost identical to the basic child pipeline example.

Note that you must specify the creating job’s name twice. Once for

needsand again to tell the trigger which job the pipeline came from.

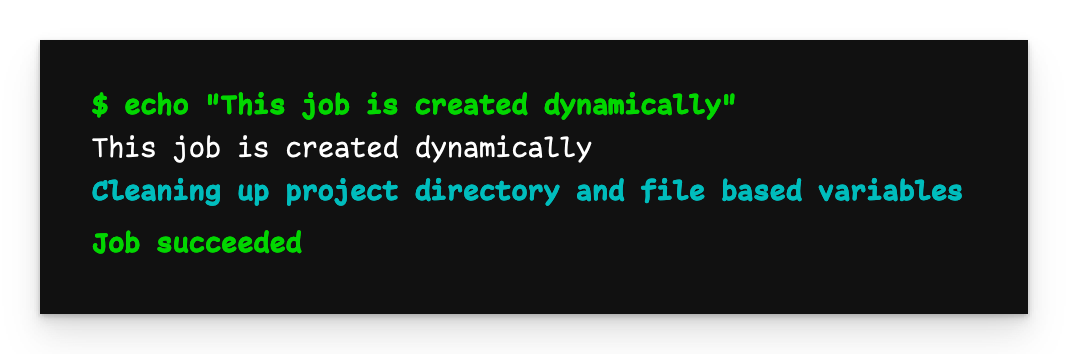

Dynamic child pipeline output

The output of the child pipeline is what we specified.

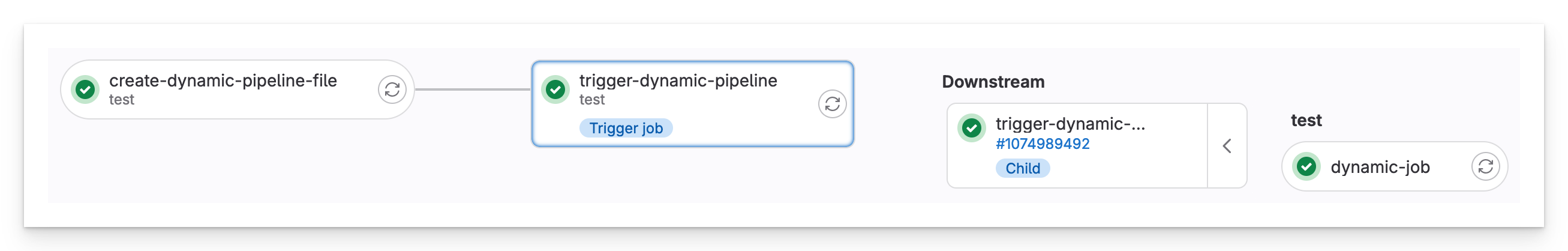

The dynamically-created pipeline file has become a child pipeline

The pipeline view is almost the same as when triggering a regular child pipeline — with the addition of first creating the job.

A Python-based dynamic pipeline

This next example uses Python to generate the child pipeline file.

You can find the source code in the reference repository.

Again, we start with .gitlab-ci.yml:

default:

image: python:3.7

create-file-job:

script: |

pip install -r requirements.txt

python generate_pipeline.py

artifacts:

paths:

- dynamic-pipeline.yml

trigger-dynamic-pipeline:

needs: [create-file-job]

trigger:

include:

- artifact: dynamic-pipeline.yml

job: create-file-job

This is the code for the Python generate_pipeline.py file that creates the pipeline code:

import yaml

pipeline = {

"dynamic-pipeline": {

"script": [

"echo Hello World!"

]

}

}

with open("dynamic-pipeline.yml", "w") as f:

f.write(yaml.dump(pipeline, default_flow_style=False))

Notice that, by using a language with more features, the YAML structure can be defined in a form other than text. This makes programmatic manipulation of the YAML much easier.

Conversely, it makes debugging your final pipeline code more difficult.

Lastly, we need requirements.txt:

PyYAML

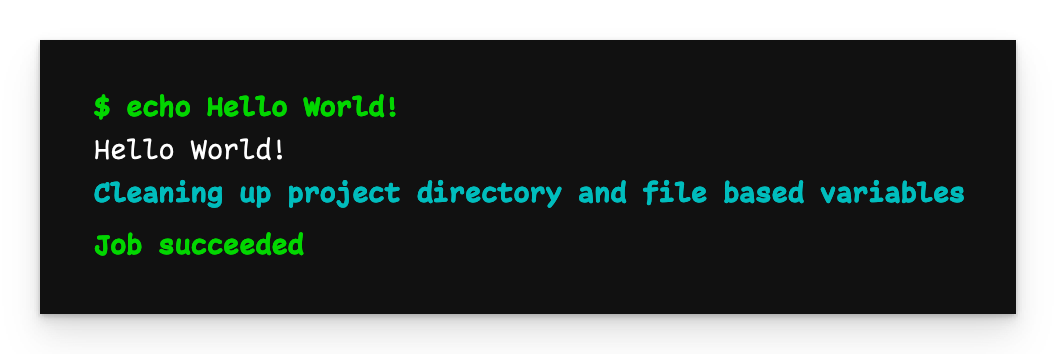

Pushing our code generates the following output:

Output of the Python-generated dynamic pipeline

Moving the bulk of your pipeline generation to a script allows you to use functions, variables, loops, and other programming tricks to reduce complexity.

Passing arguments to the pipeline generator

Defining complex pipelines in static files usually means creating convoluted rules. Child pipelines relieve us of some of this burden, but you must still pre-define everything. Having a script generate the minimum necessary pipeline code is a better approach. Using variables gives you fine control over what your script outputs.

You can find the source code in the reference repository.

Let’s expand on generate_pipeline.py and have it take a few arguments:

import yaml

import argparse

parser = argparse.ArgumentParser()

parser.add_argument("--filename", help="Name of the file to write to")

parser.add_argument("--environment", help="The environment to deploy to", default="dev")

args = parser.parse_args()

pipeline = {}

pipeline["dynamic-pipeline"] = {

"script": [

"echo Hello World!"

]

}

environment = {

"needs": ["dynamic-pipeline"],

"script": [

f"echo Deploying to {args.environment}"

]

}

pipeline[f"deploy-to-{args.environment}"] = environment

print(pipeline)

with open(args.filename, "w") as f:

f.write(yaml.dump(pipeline, default_flow_style=False, sort_keys=False))

Note that starting in version 3.7, Python preserves dictionary key order (see here).

We can use variables to rework the names of the jobs and build the final file.

Now the .gitlab-ci.yml file passes arguments to the script:

default:

image: python:3.7

variables:

PIPELINE_FILE: dynamic-pipeline.yml

ENVIRONMENT: test

create-file-job:

script: |

pip install -r requirements.txt

python generate_pipeline.py --filename=$PIPELINE_FILE --environment=$ENVIRONMENT

cat $PIPELINE_FILE

artifacts:

paths:

- $PIPELINE_FILE

trigger-dynamic-pipeline:

needs: [create-file-job]

trigger:

include:

- artifact: $PIPELINE_FILE

job: create-file-job

In this instance, the filename and target environment arguments are variables. These could come from the GUI or even an upstream pipeline. Depending on your needs, the generated pipeline need only contain the most essential code. You also don’t need to write convoluted rules.

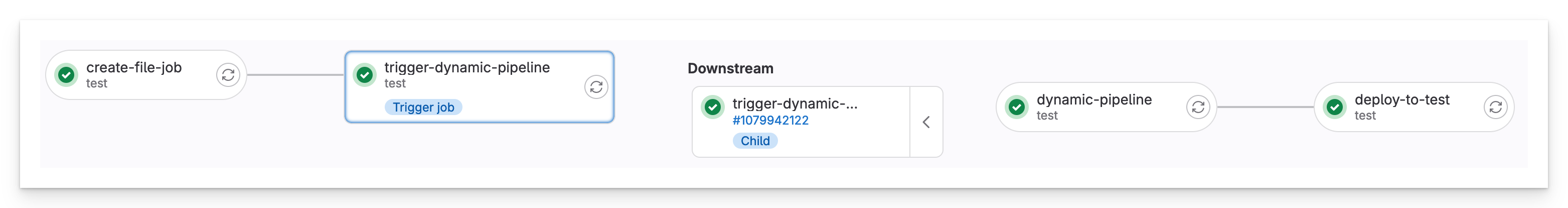

The dynamic child pipeline, including dependencies

Notice how we can even set up dependencies between the dynamic jobs.

The output of the ‘deploy-to-test’ job

We see from the output that the job targeted the ‘test’ environment according to the variables.

Conclusion

Generating your pipeline with code elevates your builds to the next level. Often, the discrepancy between writing code and writing YAML pipelines is quite big. If you’ve ever found yourself wishing you could write your pipelines as code, dynamic pipelines is the answer.