Blog

No Rules, Just Data: How My Chess Engine Learned the Game on Its Own

They say you should never build something that can outperform you at your own game. Naturally, I ignored that advice.

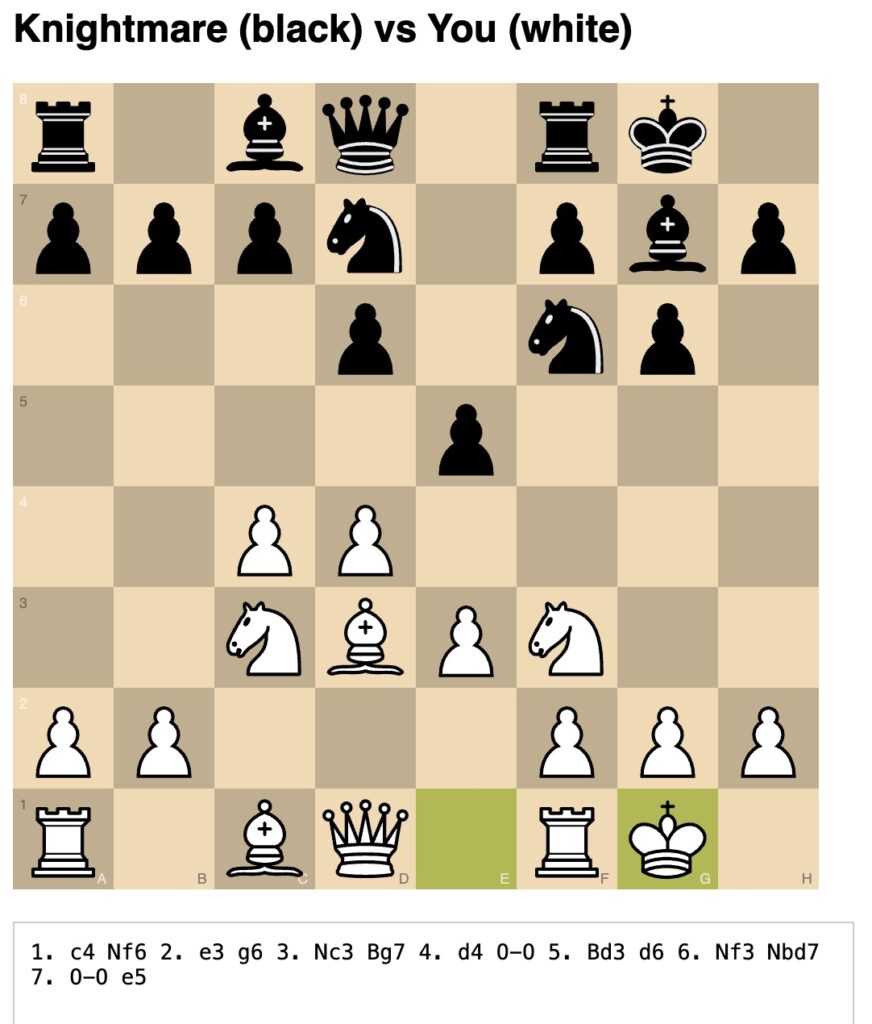

This is the story of how I built Knightmare, a deep learning-powered chess engine that leverages data of thousands of human and machine games, a custom neural network, and a dash of Monte Carlo Tree Search and what happens when you mix machine learning and a lot of FEN strings.

[caption id="attachment_88470" align="alignnone" width="307"] data driven chess engine[/caption]

data driven chess engine[/caption]

Source code

TL;DR and want to head straight to the source code? View Knightmare’s source code on Github

You can play Knightmare in your browser. It’s open source, fast enough to respond in seconds, and humbling enough to make you question your ELO.

Play on HuggingFace Play on Cloud Run

History

I love chess. Unfortunately, chess does not love me back. Back in the days my ELO approximated 1700 and I played at club level at Schaakclub Utrecht and dabbled in computer chess, tinkering with Crafty, and running chess bots of various strengths. Other hobbies took over and nowadays when I play chess it’s never classical (where do people get the time?!), but solely bullet. I get absolutely butchered by 1200 ELO bullet players all the time. I forgot all my opening repertoire and my self-confidence was in the gutter. So rather than improve my tactics or study openings like a normal person, I did what any tech nerd with questionable priorities would do: I trained an AI to play chess better than I ever could.

The project had two goals:

- Build a chess engine that understands position and strategy.

- Make it playable in the browser by anyone brave enough.

The Data Diet: 145,000 Games Later

My training data came from two sources:

- 100,000 human games from Chess.com, capturing the glorious blunders and brilliant moves of everyday players rated 1800 and above.

- 45,000 engine vs. engine games, annotated with Stockfish’s choice of the best move in every position.

These datasets were combined into a format the neural network could digest: a massive collection of chess positions, encoded as FEN strings, each paired with the corresponding best move.

For the human games, I didn’t just use the played moves. I recalculated the best move for every single position using Stockfish. Yes, that took a while. And yes, my CPU fan still hasn’t forgiven me.

FEN-omenal Preprocessing

Every chess position was converted into a tensor using a custom encoding scheme inspired by AlphaZero:

- 17 planes, each 8x8:

- 12 planes for pieces (6 per color)

- 4 planes for castling rights

- 1 plane for en passant

Every position is oriented from the current player’s perspective. White always sees the board from the bottom, even when it’s Black’s turn. This allows the model to learn symmetrical strategies from both sides more effectively.

The Brain: A Dual-Headed Neural Monster

Knightmare’s neural network is a deep convolutional model with 12 residual blocks. Inspired by AlphaZero’s architecture, it has two outputs:

- Policy Head – predicts the best move from a pool of legal ones.

- Value Head – predicts the likely outcome of the game (win, draw, loss) from the current position.

This dual-headed approach allows the model to weigh not just tactical moves, but also longer-term positional advantages

The Soul: Monte Carlo Tree Search

I wrapped the neural network in a Monte Carlo Tree Search (MCTS) engine. Here’s how it works:

- Expand: The model evaluates the current position and gives prior probabilities to the top 10 moves predicted by the engine

- Simulate: MCTS explores the most promising moves first, guided by these probabilities.

- Backpropagate: After each simulation, the result is fed back up the tree to improve future move selection.

By simulating 1000 games per move, Knightmare avoids short-term traps and focus on the bigger picture, just like a human would.

The Opening Book: Because Even AIs Need a Cheat Sheet

In the early game, Knightmare consults an opening book, a handcrafted library of well-trodden chess openings. If the position matches one in the book, it plays from there. Otherwise, it falls back to MCTS.

The book is stored in JSON format and indexed by simplified FEN keys, covering thousands of known variations

Making It Playable: The Front End

Training an AI to play chess is great. Letting others lose to it? Even better. Knightmare comes with a full web frontend, built using Chessground and Flask. You’ll start with a random color, and all the moves are kept in PGN notation for you to analyse afterwards. It’s a surprisingly fun way to learn what not to do in a chess game.

Train Your Own Knightmare

Knightmare isn’t just an engine, it’s a training playground. If you want to build your own beast, the training pipeline is ready to go. Just feed it your own games in FEN format alongside best-move labels, preprocess with data_preparation.py, and start training with a train.py. It supports checkpointing, validation, and automatic saving of the best model. Whether you’re experimenting with custom data, wanting to learn more about data science, or trying to beat Stockfish at its own game, Knightmare is your blank canvas.

Lessons Learned

Building an AI isn’t Magic. It’s Math (and Compute)

Training Knightmare wasn’t about hoping the network would "learn" chess. It was about encoding the rules, the data, and the evaluation metrics precisely enough to give it a fighting chance.

Symmetry Matters

Board orientation - always flipping to the active player’s POV - helps the network generalize far more efficiently. At first I taught it without flipping and white’s performance would outperform black’s performance by a significant amount. By flipping I didn’t only resolve that imbalance, but also improved predictions in general.

Value Heads Are Underrated

Predicting the outcome of a position gives the model strategic context that pure move prediction lacks.

Final Thoughts

What amazed me most was that Knightmare learned to play chess without ever being told what chess is. No hardcoded rules. No concepts of "control the center" or "develop your pieces." Just raw data: positions and best moves. From that, it learned how to attack, defend, trade, castle, and sacrifice, emerging with a coherent strategy simply by optimising toward outcomes. It didn’t learn chess by reading books. It learned chess by playing probability. And somehow, it got quite good at it.

I can beat it with careful play, but it will punish my mistakes without mercy. It shows me where I play too cautiously. Where I overextend. Where I tunnel-vision. It allows me to blitz out moves and experiment with openings without taking a hit to my EGO… or ELO. And honestly? That’s been the most valuable of all.

Written by

Dennis Vink

Crafting digital leaders through innovative AI & cloud solutions. Empowering businesses with cutting-edge strategies for growth and transformation.

Our Ideas

Explore More Blogs

AI Revolution in Data Extraction: Structuring Emails, PDFs, and Videos

Let us explore some of the real-world use cases, emerging from this conversation, showing how generative AI is redefining what's possible.

Klaudia Wachnio

Contact