Blog

MCP: future automation killer or a promise to be kept?

Ever since the rise of ChatGPT, AI has been making big steps; AI isn’t just answering questions anymore, but it has started doing things. However, existing applications weren’t built with a unified API to talk to different tools. This is where the Model Communication Protocol (MCP) comes into the picture.

MCP has been making waves in the AI and developer tooling space, and for good reason. It has all the marks and bearings of becoming the standardized, extensible way to connect tools, resources, and prompts to language models, bridging language interfaces with anything that can support its protocol. In other words, MCP provides the answer to the emerging integration challenge, enabling smarter, more automated processes.

But how far along is that journey? How much remains to be done? There are still rough edges to smooth and technical hurdles to address before MCP can be considered production-grade, especially for larger companies.

MCP: An all-around adaptive development interface

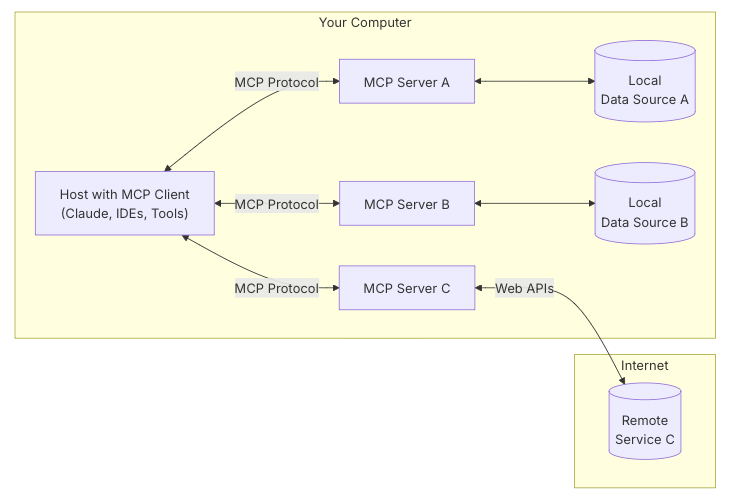

MCP can best be described as a protocol that allows agents (clients) to connect to external services in a standardized manner. These external services are defined as “Tools”, which basically represent actions that an agent can perform. These tools are defined via schemas describing inputs/outputs, enabling agents to dynamically discover, inspect, and invoke tools without requiring any customized integrations. Importantly, MCP doesn't replace existing protocols like REST; it acts as a layer above, allowing existing APIs to be wrapped and exposed consistently to AI agents.

As a result, MCP ensures that modularity and standardization tools are reusable for different services and agents within your organization. It provides a common language for agents that makes it much easier for your enterprise to build agentic applications

The core of MCP revolves around the following three core components:

- Hosts: Hosts are LLM applications (like Claude Desktop or IDEs) that initiate connections. Think of the host as the application that the end-user is using, such as Co-pilot or Cursor.

- Clients: Clients maintain 1:1 connections with servers, inside the host application; they basically act as an orchestrator.

- Servers: servers are basically the abstractions of external services, and provide context, tools, and prompts to clients.

Moreover, it is important to understand that there are essentially two ways in which a client can interact with the server:

- Remote MCP connections: MCP clients connect to MCP servers over the Internet, establishing a long-lived connection using HTTP and Server-Side-Events, where the client is authorised to resources on the user’s account using the OAuth protocol.

- Local MCP connections: MCP clients connect to MCP servers on the same machine, using stdio as a local transport method.

Seeing is believing

Developers are already adapting tools and models to work with according to the MCP specifications. And, given the protocol aims to become the standard, this opens up the possibility to keep adding new capabilities to solutions built with MCP in mind without extra (development) cost for the client.

In its essence, the MCP protocol provides a way through which a client can access tools, resources, and prompts through a server.

[caption id="attachment_87997" align="aligncenter" width="729"] Source: https://modelcontextprotocol.io/introduction[/caption]

Source: https://modelcontextprotocol.io/introduction[/caption]

For example, let’s assume you have an MCP server that offers search functionality (tool) within a set of files (resource). Your users connect to the server with a client and through the well-known chat interface, they start asking queries, answered by searching into the files.

When you add new functionality to the server, e.g., the ability to update (tool) a database (resource), users will be able to perform the new actions without updating their client.

This is really powerful as the client remains stable while the server can keep on adding new tools and resources (you’ll still need to let your user know).

Its simplicity means that MCP will act as an open standard for agents introducing a lingua franca similar to how REST is the de facto protocol to exchange data on the web (with some exceptions. Looking at you, SOAP!)

Ready for primetime? Not yet…

MCP, however, still has some limitations, partly due to its age. The most demos seen online are using local MCP connections, which don’t scale in enterprise settings. Servers using remote connections are more complicated. Take authentication, for example: securely connecting AI to tools is paramount, but early versions of MCP lacked strong, standardised security protocols, which is a major barrier for businesses. However, the ecosystem is rapidly evolving, as MCP recently incorporated industry-standard security protocols (like OAuth 2.1), similar to what secures many online services they already use

While this is definitely an improvement, it does not fix all problems: making it work seamlessly and securely within complex enterprise environments isn't perfectly straightforward yet. In other words, MCP is not "plug and play" from a security perspective. Careful implementation, security reviews, and potentially waiting for further maturation of the standard or relying on trusted vendor platforms are necessary.

Governance is another major gap – the protocol itself doesn't define tool lifecycle management, policy enforcement, or provide observability standards. Furthermore, ensuring reliable agent behaviour may require deterministic controls like execution budgets or retry limits, currently outside the core MCP spec.

MCP alone is not enough..

It should be emphasized that MCP is best understood as a lightweight coordination protocol, a “common language” that LLM applications speak; not a complete platform. It standardizes agent-tool communication but doesn't inherently handle identity management, policy enforcement, observability, or tool lifecycle governance. Real enterprise value depends on integrating MCP within a broader architecture that provides these critical functions. In other words, adopting MCP isn't just about the protocol; it requires investment in the surrounding infrastructure – security, monitoring, tool management – to be truly useful and safe in an enterprise setting.

There is more to MCP than just servers

Most content around MCP addresses MCP servers, which basically show how MCP standardizes the connection to an external service. Other aspects, like clients and hosts, are less addressed, despite them being vital to integrating the protocol into private AI use cases and open-source tooling.

For example, how are your on-prem applications connecting to MCP servers? In these scenarios, one must think beyond only hosting MCP servers as you also become responsible for creating and hosting and MCP clients.

Final Thoughts

MCP is here to stay, and feels just a few weeks away from being an absolute must-have. Hence, organizations that want to retain their competitive advantage in the AI era must start exploring how MCP can be implemented effectively in their organisation to standardize and accelerate both new and existing GenAI use cases.

Like any IT product, best practices are applied. Security, governance, and observability are of critical importance and should be prioritised in order to leverage them effectively across your organization. But, while much is still undefined, its promises and potential still make it one of the most exciting tools interfacing with different cloud providers, external services, and models. In a world where MCP remains the standard, new models can interface with it right from the start and its integration can foster ease of access with all future models. For this dream to come true, however, a lot will depend on how the community and model builders will approach it.

Would you like to know more? Feel free to contact us!

Written by

Giovanni Lanzani

Our Ideas

Explore More Blogs

Introducing XBI Advisor; Start auto-consulting your BI environment.

Ruben Huidekoper, Camila Birocchi, Valerie Habbel

Contact