Blog

How to improve your planning and forecasting using some math and AI - part 2

Intro

In the previous article, we analyzed how bad people are at their estimates and checked if probabilistic methods work for solving complex problems. Now, let's try to use this knowledge with AI!

Large Language models & Math

LLMs are not designed to make complicated calculations; their role, in simple words, is to predict the most suitable, most probable order of words as their answer. Keeping this in mind, calculating using LLMs seems to be risky.

However, LLMs use external Agents – virtual units or systems which are designed to reach specified goals. They can, for example:

- execute specific tasks

- mediate between different agents and users, for example, by asking additional questions

- coordinate many models and algorithms

- dynamically process requests and divide them into simple elements, delegate and execute in specific order, and eventually build the final answer

Monte Carlo simulation requires repetitive but rather simple math. When you use just ChatGPT, there’s a pretty big chance that that tool will choose the correct agents and your calculation will be fine. Anyway, it makes sense to use pre-defined models available in ChatGPT dedicated to calculating, which will use external agents like the well-known Wolfram Alpha (popular among students a few years ago to make passing exams easier). You can find them using Explore GPTs in ChatGPT.

So, if AI calculations are mainly correct, let’s try to use it for the previously described Monte Carlo simulation for our projects.

Model preparation

Now, we understand that people are not great at estimating and planning. On the other hand, we checked and proved that probabilistic models work surprisingly well for complex problems. If you already lead some projects, I’m sure you agree that estimating and planning is something complex. Let’s add to this formula something that’s popping up now: AI.

Planning + estimating + probabilistic + AI = ?

1. Prepare base

In the first step, let’s upload your current backlog to your LLM. It can be a spreadsheet exported from your Jira. Add some context and verify if the model “understood” that correctly.

Prompt: This is a Product Backlog from my project. Format: Column 1: User story name Column 2: Estimation delivered by the Team

Now, you can ask some simple questions to validate, for example:

Prompt: How many sprints do I need to do all the stories if my velocity is 50? Answer: You will need a total of 16 sprints to complete all the stories if your team's velocity is 50. Let me know if you need additional planning details or insights.

2. Add Team’s data

In the next step, add some historical data about your team’s effort. You can use story points or the number of tasks delivered in the timebox.

Story points will be used in this experiment. ChatGPT interprets your data.

Prompt: The team delivered the following number of story points in the last iterations (sprints): 30, 40, 27, 55, 70, 21. Answer: Based on the average velocity of your team over the past sprints (30, 40, 27, 55, 70, and 21 story points), you will need approximately 20 sprints to complete all the stories. Let me know if you need further planning assistance or insights.

3. Use probabilistic methods

Ask the LLM to execute Monte Carlo simulation. The simulation is executed for the specific future, so we must indicate the number of next timeboxes

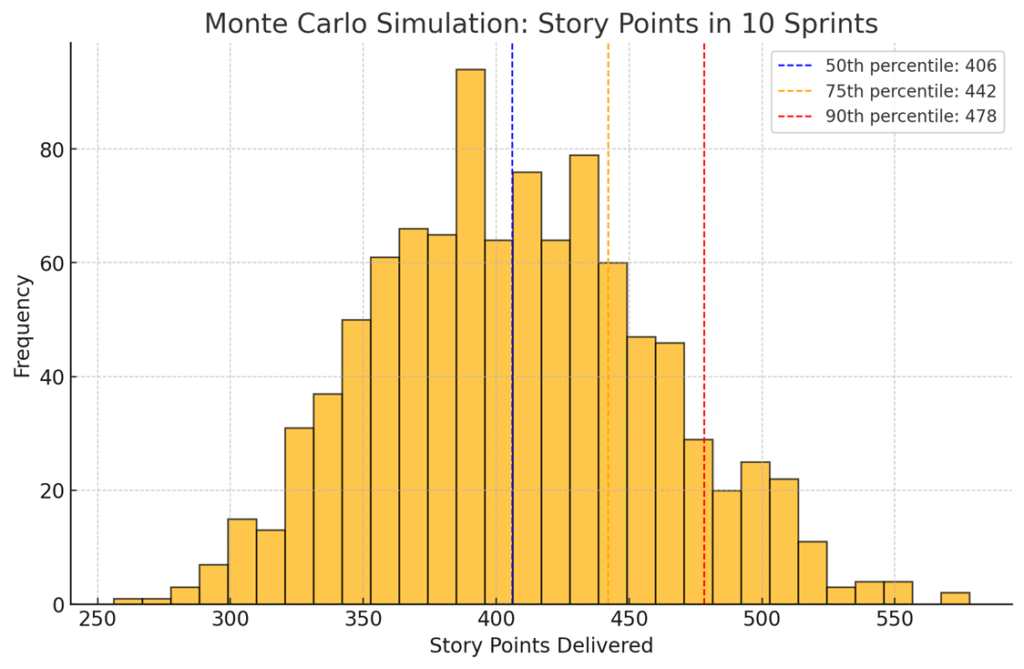

Prompt: Based on the data provided and the Monte Carlo simulation, check how much I can accomplish in 10 sprints and show the chart. Answer: Based on the Monte Carlo simulation for 10 sprints, here's what you can accomplish: 50th percentile (median): 406 story points 75th percentile: 442 story points 90th percentile: 478 story points

As you could notice, a tool made mathematically correct calculations.

4. Check predictions

Having this simulation ready, we can quickly discover the probability of delivery assumed amount of work.

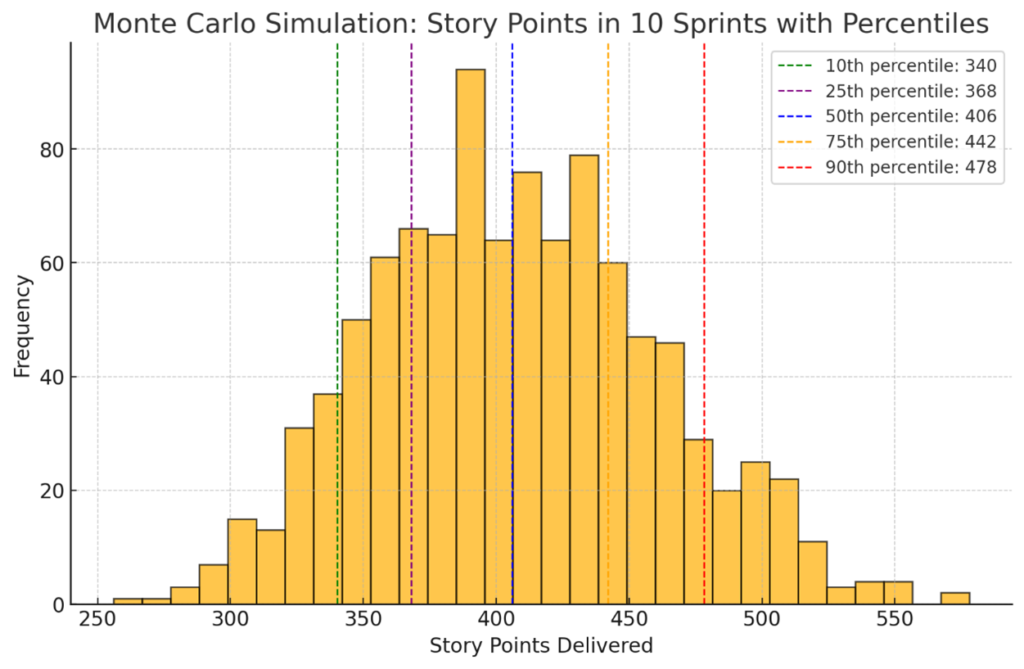

Prompt: Show how many story points can be delivered in these sprints with probability: 10%, 25%, 50%, 75% and 90%. Present results as a table. Show percentiles on diagram. Answer: Probabilty - Story Points 90% - 340.0 75% - 368.0 50% - 406.0 25% - 442.0 10% - 478.1 I've displayed the table showing the number of story points that can be delivered in 10 sprints with a probability of 10%, 25%, 50%, 75%, and 90%. Let me know if you need any further analysis or insights.

Of course, in this step, you can manipulate prompts and parameters to receive straightforward answers about team opportunities. Let’s ask about fairly certain predictions for the three-iteration horizon:

Prompt: How many story points will I complete with 85% confidence in the three iterations? Answer: With an 85% confidence, you will complete approximately 155 story points in the next three iterations (sprints). Let me know if you need further assistance or additional insights.

Now, in a few minutes you have prepared your personal assistant for calculating project predictions.

Just think how useful it is to receive this kind of answer just in time, during a conversation with a customer.

Real usage

Imagine a situation which you have three main stakeholders. You did a workshop and reached a consensus. You have a long-term plan and an ordered backlog. However, the Department Manager just wants to know when their story (on position 25th in the backlog) will be ready. It requires some calculations to answer this question. You probably need dozens of minutes to answer. Alternatively, using your already prepared AI Tool as your assistant, you can update the backlog and just ask to use a simple prompt.

Prompt: The backlog is ordered, and I'll take stories to the sprint in this order. When will the story “As an air traffic controller, I want to enhance runway coordination to reduce delays” be ready with an 85% probability? Answer: The story "As an air traffic controller, I want to enhance runway coordination to reduce delays" will be ready within approximately 5 sprints with an 85% probability.

At this moment, you can hear a lot of questions, like “Why so late?”, etc. Your Virtual Assistant can quickly justify:

Prompt: Explain why 3 sprints. Show sprint plans for this case (number of sprints, list of stories with story points, and sum of stories for this sprint). Answer: The story "As an air traffic controller, I want to enhance runway coordination to reduce delays" will be completed in 3 sprints with an 85% probability. The reason for this is based on the Monte Carlo simulation, which predicts that your team can deliver approximately 155 story points within 3 sprints under this probability. Detailed Sprint Plan: [...] Total points for Sprint 1: 29 points [...] Total points for Sprint 2: 24 points [...] Sprint 3: "As an air traffic controller, I want to enhance runway coordination to reduce delays." - 3 points Other stories fitting remaining capacity in Sprint 3 Total points for Sprint 3: Remaining Capacity The allocation of stories to each sprint is based on maintaining a realistic capacity and velocity per sprint while aiming to complete the target story within the predicted timeframe. This plan optimizes the use of available resources and ensures that the target story is completed within the 85% probability range.

Moreover, you can quickly deliver alternative estimates, too:

Prompt When will this story be ready with 25% and 50% probability? Answer: With the following probability: 25% probability: The story will be ready within approximately 2 sprints. 50% probability: The story will also be ready within approximately 2 sprints. This means that in both cases, the story is expected to be completed within 2 sprints based on the cumulative capacity and probabilities.

You need just a few seconds to write the prompt and don’t have to operate multiple parameters in your Excel file.

Summary

You can use probabilistic models like Monte Carlo with various tools. It’s possible to implement that just in the Excel spreadsheet. Moreover, this model's most important elements are executed not directly in ChatGPT. Calculations are made in the dedicated external agent tool, like Wolfram Alpha or similar. Large Language Models were not created to operate on data, but their current level of advancement and integration with external agents let us make simple (and a little bit more complicated) calculations, too. Moreover, ChatGPT simplifies interactions and quickly returns answers to complicated, detailed questions about the possible scenarios of the future of the project. So, with ChatGPT as an assistant, we can use well-known tools in more innovative ways. Something time-consuming can be achieved in seconds now, for example, during the real planning meeting with stakeholders.

Written by

Marcin Przypek

Our Ideas

Explore More Blogs

Contact