In my previous post (fluentbytes.com/deploying-asp-net-4-5-to-docker-on-windows) I showed you how you can create a docker container image that has an ASP.NET 4.5 website running on the full .NET framework. In this post I want to show you how you can use VSTS build V-next and the release management tools to leverage the docker technology.

Let us first start by creating a docker image as the result of our build, that we then later can use in the deployment pipeline to very easily run the website from the container and run some UI tests on them.

Creating the docker image that has the website, from the build

When we want to use docker as part of our build, then we need the build agent to run on a host that has the docker capabilities build in. For this we can use either windows 10 anniversary edition, or we can use windows server 2016 Technical preview 5. In my example I choose windows server 2016 TP5, since it is available from the azure gallery and gives a very simple setup. You choose the Windows server 2016 TP5 with containers as the base server and after you provision this in azure you download the build agent from VSTS and install it on the local server. After the agent is running, we can now create a build that contains several commands to create the container image.

First we need to ensure the build produces the required webdeploy package and accompanying artefacts and we place those in the artifact staging area. This is simply done by adding the following msbuild arguments to the build solution task that is part of a standard Visual Studio build template.

/p:DeployOnBuild=true /p:WebPublishMethod=Package /p:PackageAsSingleFile=true /p:SkipInvalidConfigurations=true /p:PackageLocation=$(build.stagingDirectory)

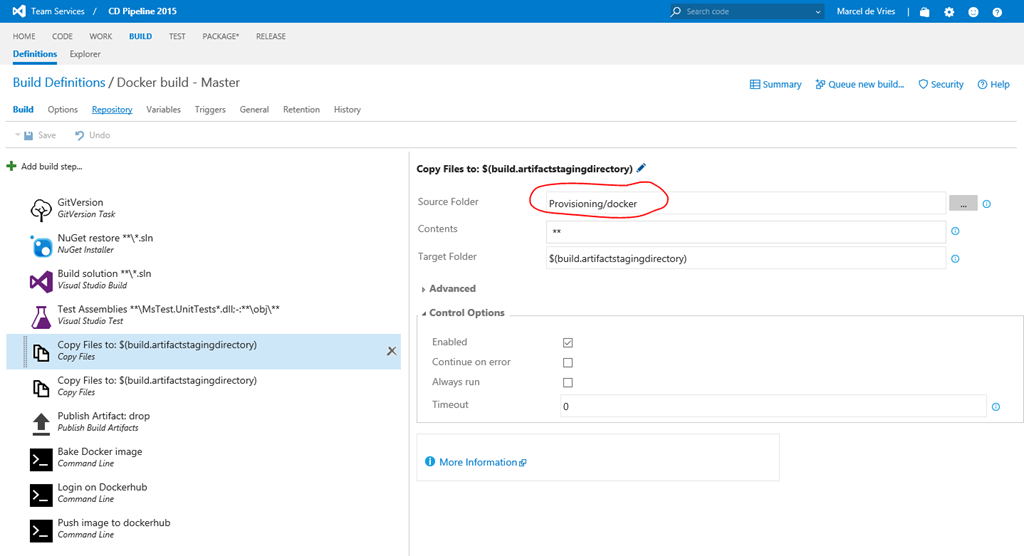

Then after we are done creating the package and copying the files to the artifacts staging location, we then add an additional copy task that copies the docker files I described in my previous post to the artifacts staging directory, so they become part of the output of my build. I put the docker files in my Git repo, so they are part of every build and versioned.

After copying the docker files, we then add a couple of command line tasks that we will look at in more detail.

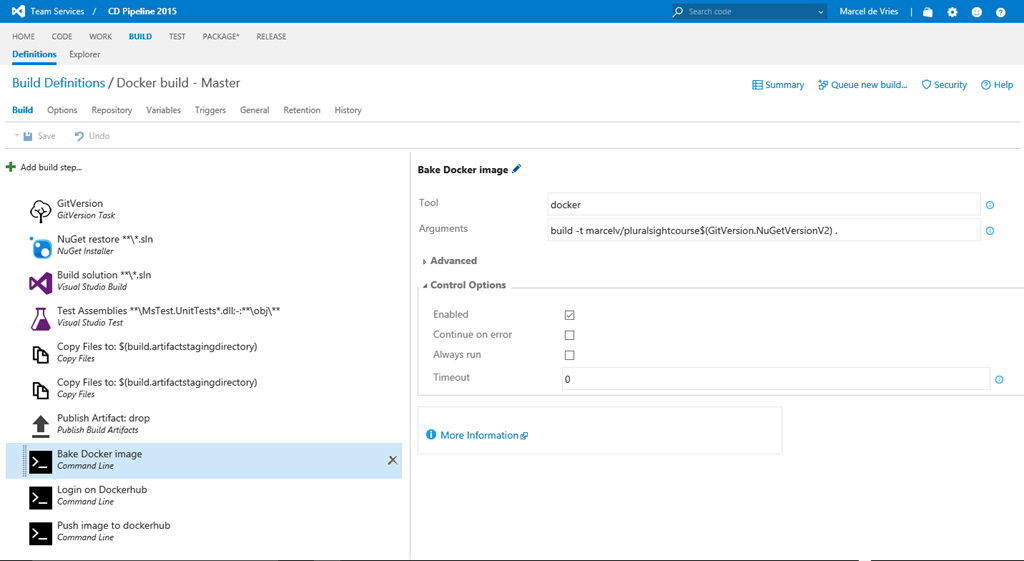

In the following screenshot you can see the additional build steps I used to make this work. The first extra command I added is the docker build command to build the image based on the dockerfile I described in my previous post. I just added the dockerfile to the Git repository as you normally do with all the infrastructure scripts you might have. The docker file contains the correct naming of the package that we produce in the build solution task.

You see me passing in the arguments like I did in the previous post, to give the image a tag that I can use later in my release pipeline to run the image.

You see I am using a variable $(GitVersion.NugetVersionV2). this variable is available to me because I use the task GitVersion that you can get from the marketplace. GitVersion determines the semantic version of the current build, based on your branch and changes and this is override able by using git commit messages. For more info on this task and how it works you can go here

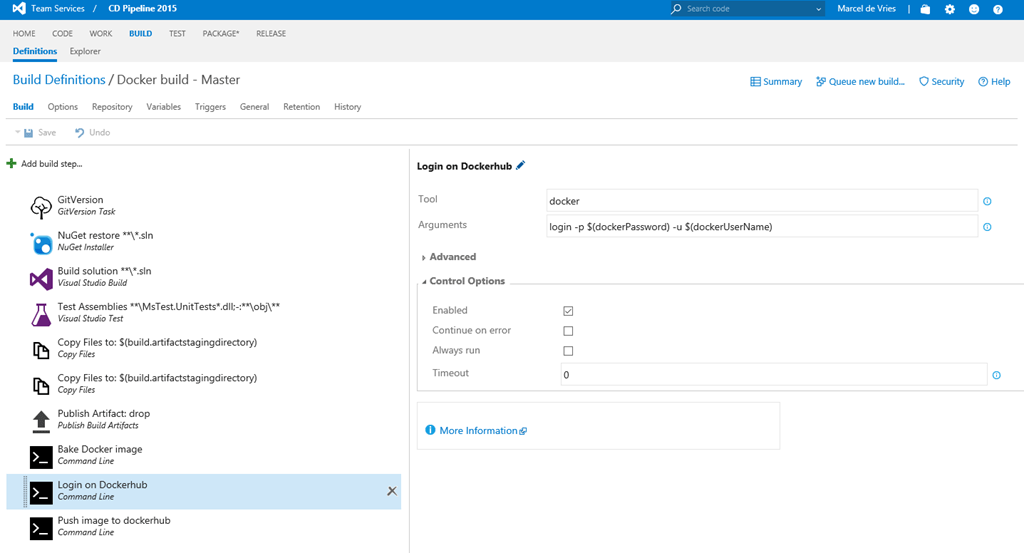

Now after I create the image, I also want to be able to use it on any of my machines, by using the dockerhub as a repository for my images. So the next step is to login to dockerhub

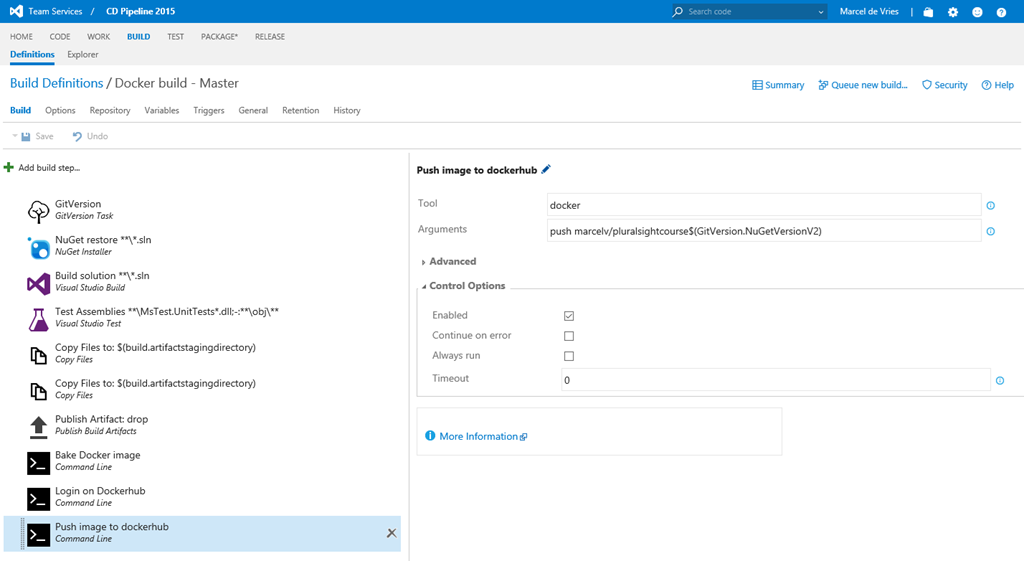

After I have logged in to dockerhub, I can now push the newly created image to the repository.

And now we are done with our build. Next is using the image in our release pipeline

Running a docker image in the release pipeline

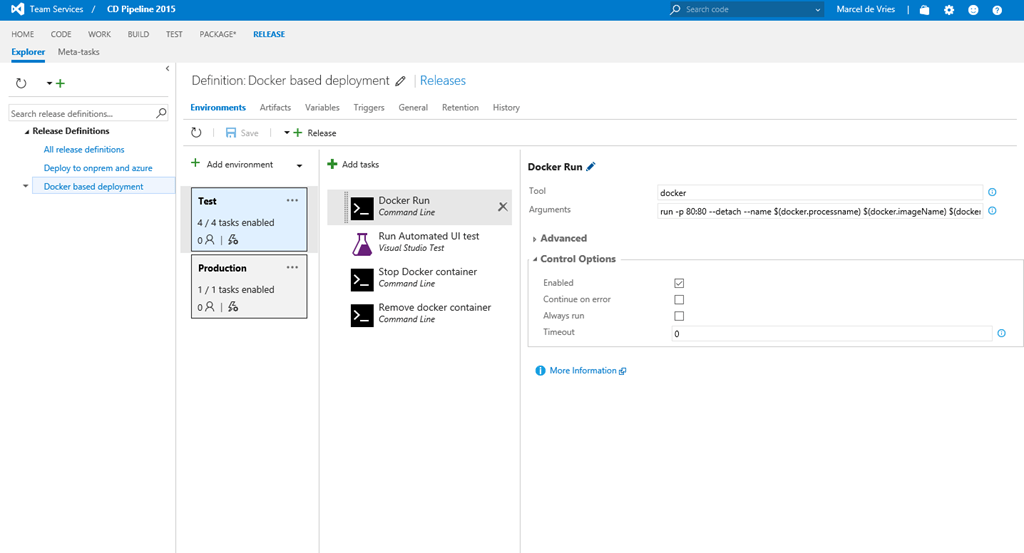

Now I go to release management and create a new release definition. What I need to do to run the docker container, is I need the release agent to run on the docker capable machine, exactly the same as with the build. Next we can then issue commands on that machine to run the image and it will pull it from dockerhub when not found on the local machine. Here you can see the release pipeline with two environments, test and production.

As you can see the first step is nothing more then issuing the docker run command and mapping the port 80 of the container to 80 on the machine. We Also use the –detach option, since we don’t want the agent to be blocked on running the container, so this starts the container and releases control back to the release agent. I also pass it in a name, so I can use the same name in a later stage to stop the image and remove it.

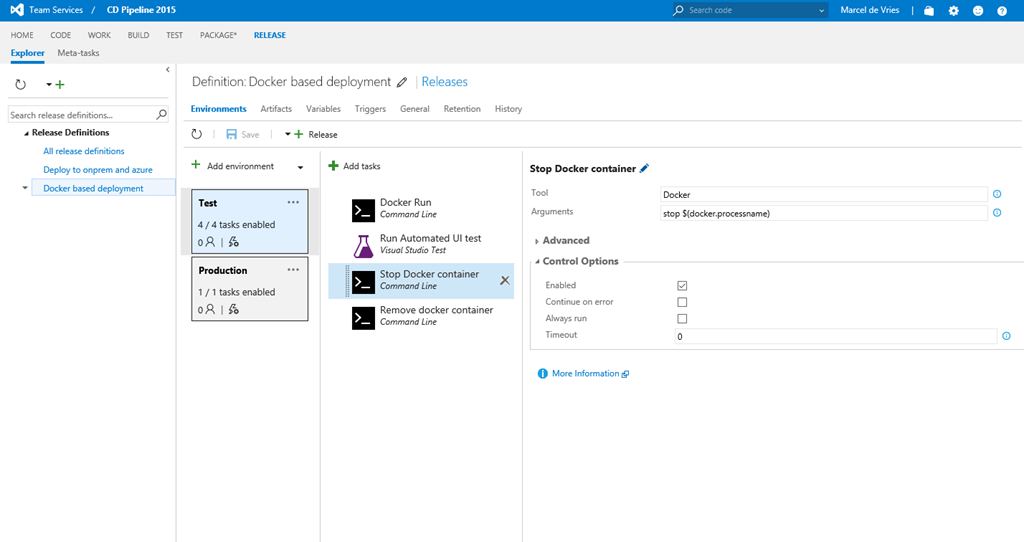

Next I run a set of CodedUI tests to validate if my website is running as expected and then I use the following docker command to stop the container:

docker stop $(docker.processname)

the variable $(docker.processname) is just a variable I defined for this release template and just contains an arbitrary name that I can then use cross multiple steps.

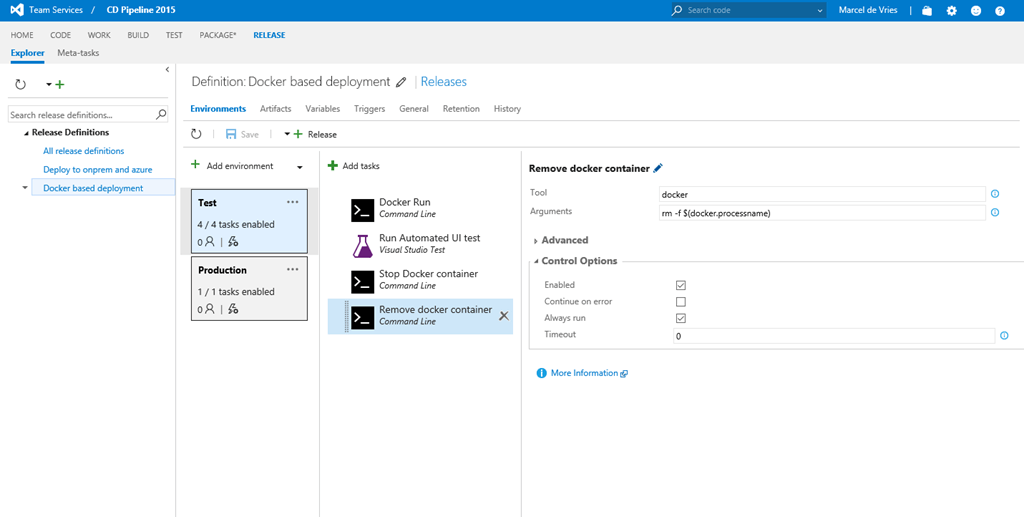

Finally I am running the command to remove the container after use. This ensures I can run the pipeline again with a new image after the next build

For this I use the docker command:

docker rm –f $(docker.processname)

I used the –f flag, and I set the task to always run, so I am guaranteed this image is removed and even after a non successful release. this ensures the repeatability of the process which is of course very important.

Summary

As you can see it is quite easy to build containers during the build and use them in the release pipeline. I now used simple command line tasks to do the job, I assume it is just a matter of time before we will see some docker specific tasks in the marketplace for us to use. Microsoft has docker tasks in the marketplace, but these are only targeting Linux (at the moment I am writing this) and require a Linux docker machine connection to work. In my example here I am focused on leveraging docker on Windows and for this I hope we can see tasks in the future.