TL;DR

- Browser extensions offer a valuable tool to integrate Generative AI into existing processes since they enable rapid prototyping without modifying legacy backends.

- We demonstrate such a soft integration by presenting a Chrome extension that creates multiple-choice questions based on the content of the current webpage.

The state of LLM use cases

While the usefulness of language models for specialised natural language procession (NLP) tasks has been apparent for a few years already, the emergent nature of large language models (LLMs) with billions of parameters in combination with fine-tuning on chat inputs was a surprise. Generic chat interfaces like ChatGPT have materialised as a Swiss army knife for various tasks – for example, programming without LLM support puts you at a disadvantage nowadays. However, the integration of LLMs into existing use cases is still work in progress. We have not yet ordered a pizza via a chat interface, for instance.

Despite all the hype, not all proposed LLM-integrations will make sense. Therefore, we need scalable approaches to rapidly prototype such integrations without setting up specialised backends. Hence, we discuss the combination of browser extensions with LLM endpoints for rapid prototyping.

Example: automatically generated questions

To maximise study results, technical documentations should be paired with separate multiple-choice tests to check whether all concepts have been understood. To this end, there are external resources like Quizlet that collect pre-defined questions. On the other hand, webpages like Quizbot even allow the real-time generation of multiple-choice questions (using LLMs). However, both solutions are not directly integrated into a webpage. Hence, the user acceptance due to UX-gaps is questionable. More specifically, switching to a different webpage and copy-pasting content is a hurdle many users aren’t willing to accept.

In fact, we are not aware of any tool that generates multiple-choice questions directly on the webpage where content is consumed. Thus, we think that this example can act as a blueprint for a soft integration of LLMs into existing systems.

A “Question Generator” browser extension

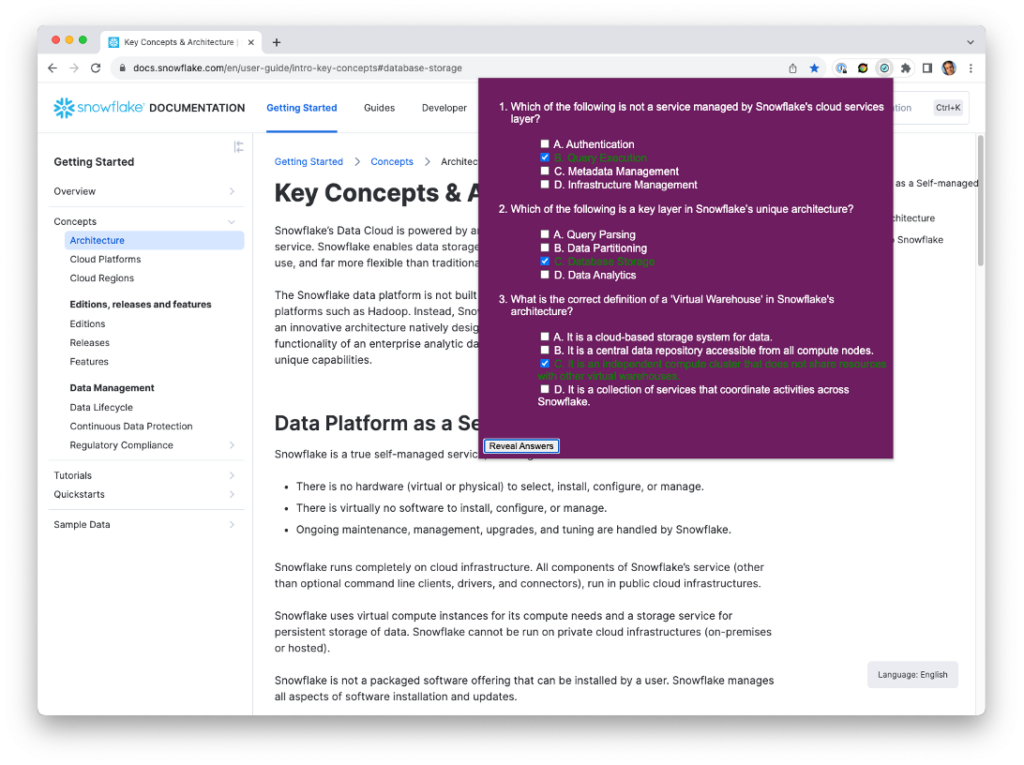

We created the Chrome extension demonstrated in the following picture (on a Snowflake documentation page). It generates three multiple choice questions (in purple window) based on the current page content. To generate these questions, the page content is extracted as plain text and sent to the OpenAI chat completion endpoint, using in-context learning by providing example questions. Once answers are selected by the user, it is possible to confirm whether this selection is correct:

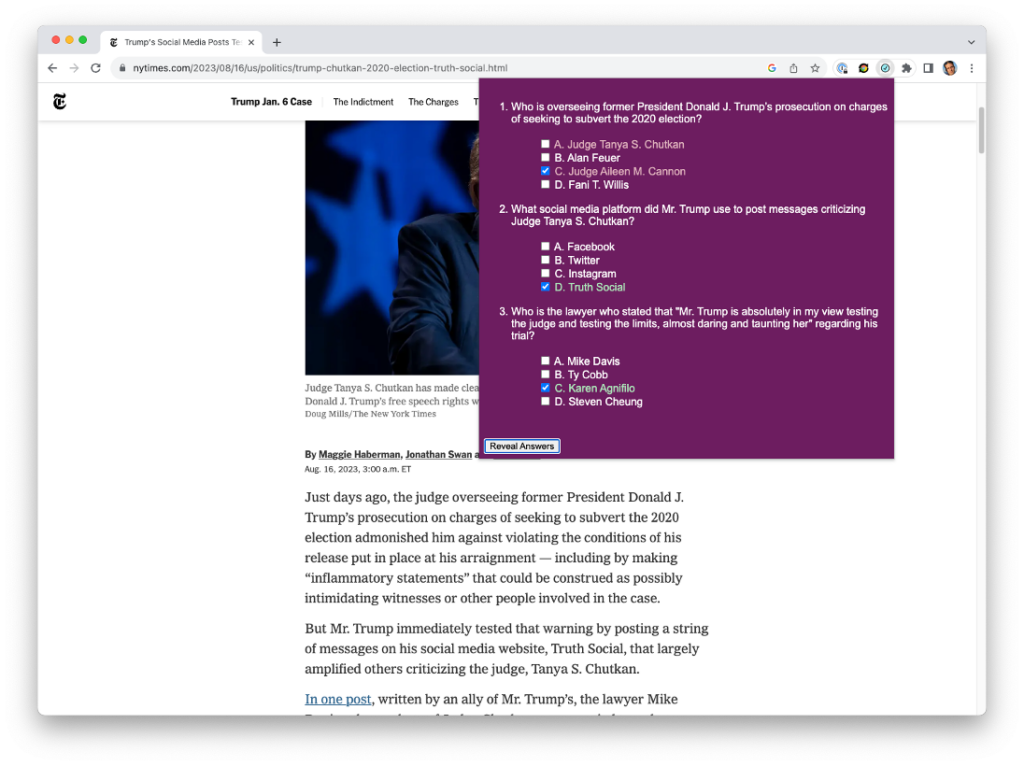

The extension also works also on journalistic articles, for instance from the New York Times:

How to use the “Question Generator” extension

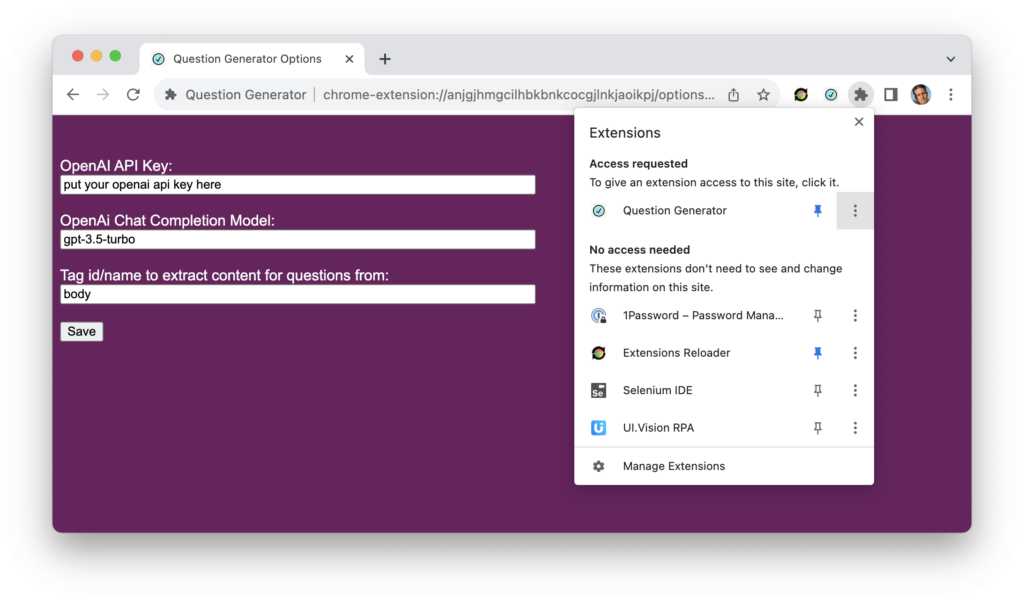

- Download the extension code directory from: Github questiongenerator

- Go to the extension manager in Chrome (puzzle piece) and select this directory with “load unpacked”.

- As illustrated in the next figure, open the extension options and enter your OpenAI API key.

- Go e.g. to User-guide intro-key-concepts and click on the plugin to check your knowledge!

Developing browser extensions

Developing Chrome browser extensions has a steep learning curve. Without previous knowledge of Javascript, this used to be a tedious endeavour. However, with the use of ChatGPT as a coding assistant, it is possible to create such plugins in a few hours, even with basic Javascript knowledge. While a complete end-to-end implementation is still out of reach, it is possible to create building blocks that can be manually integrated. For instance, the translation of the generated questions into html and the checking and colouring of answers has been entirely written by ChatGPT.