The previous post showed how you can create an unsecure Service Fabric test cluster in Azure, and how to run a Windows Container on it. In this follow up post, I’ll show you what’s going on inside the cluster, using the Docker command line. Knowledge about this can be very useful when troubleshooting.

Verify your container is running

This will set an Environment Variable that configures where the Docker Service can be reached.

Now check that Docker is up and running, by typing:

docker version

This command displays the current version of Docker, which is 1.12 at this time.

Next type:

docker ps –no-trunc

This command will list all running containers, with information not truncated for display.

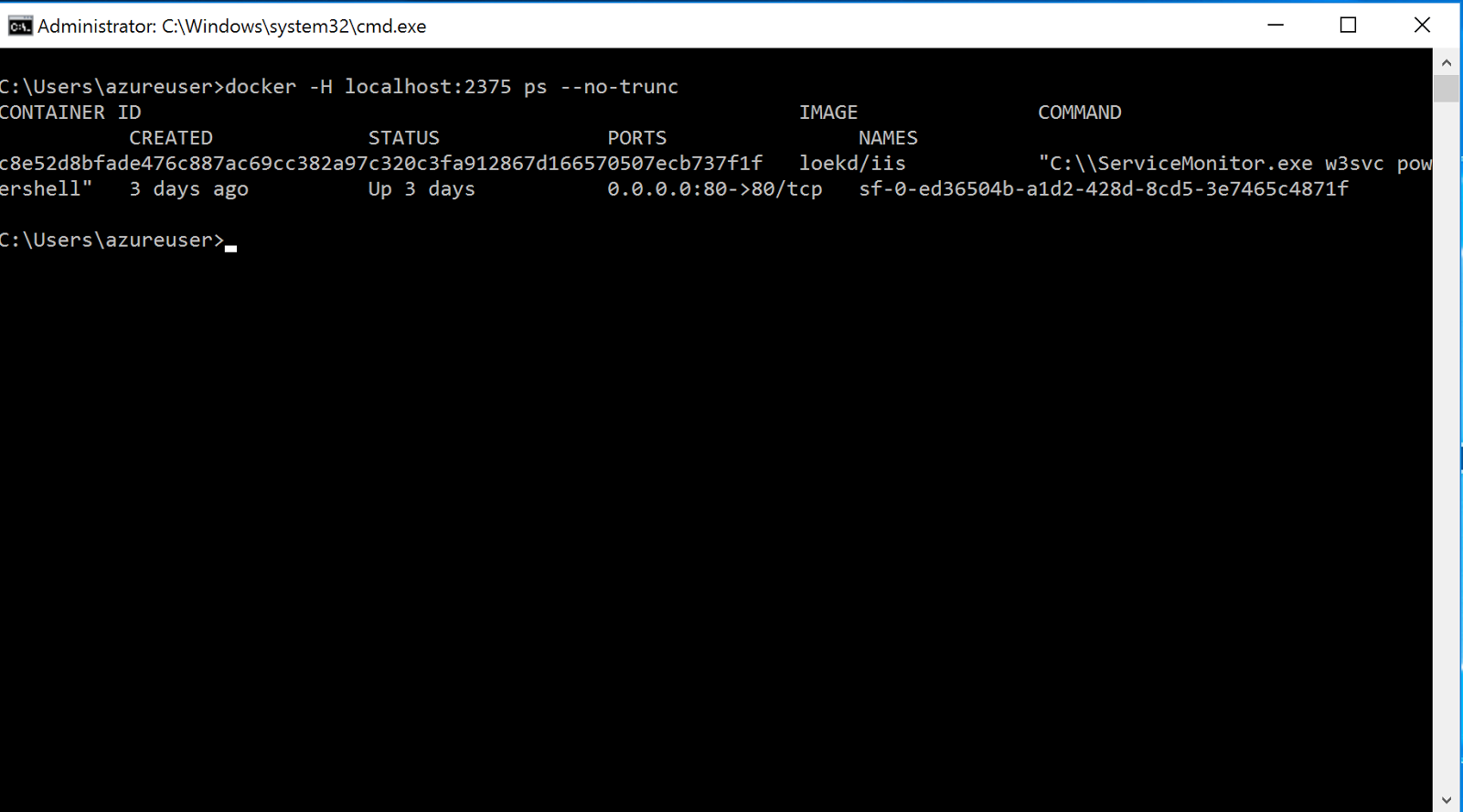

The result should be similar to this:

It shows information about your running containers. Some important elements here are:

- Container ID – which you can use to perform operations on a specific container

- Ports – Which indicates that port 80 inside the container is exposed and published (note that this value will be missing if your image doesn’t explicitly expose a port.)

- Command – which shows what was executed when the container started. In this case, the w3svc service and PowerShell. (Note that PowerShell was configured in the ServiceManifest.xml file in part 1.)

Verify IIS is running

If you want to validate whether IIS is running, you can’t just open up https://xebia.com/blog in your browser (at this time) due to an issue in WinNAT. You can use the IP Address of the Windows Container. To find that, type:

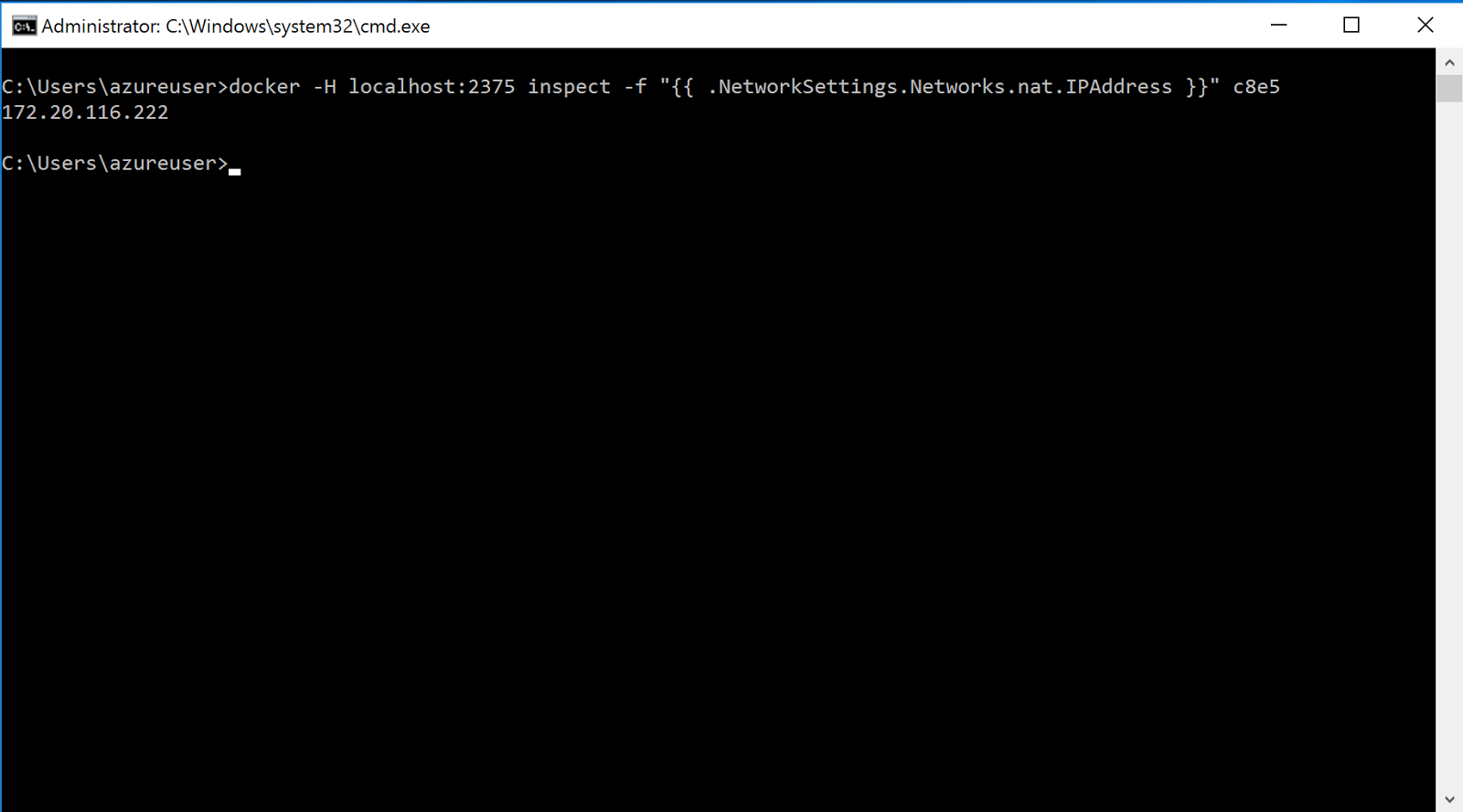

docker inspect -f “{{ .NetworkSettings.Networks.nat.IPAddress }}” c8e5

Note that ‘c8e5‘ is the start of my running Containers’ Container ID, so it will be different in your situation.

The result should be similar to this:

It shows the IP Address of your running container. In my environment, it’s 172.20.116.222. In your environment it will likely be different.

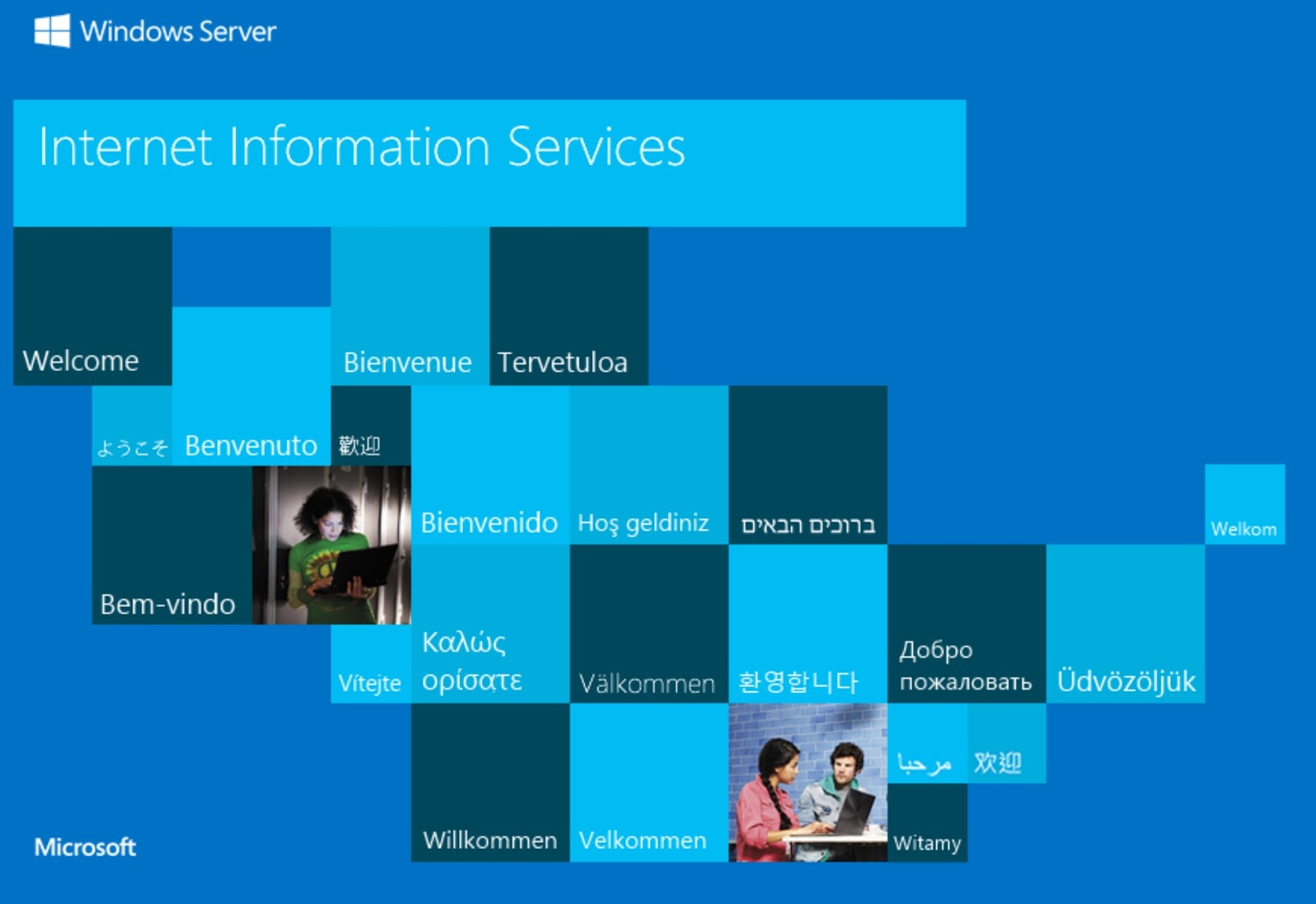

Open up Internet Explorer and navigate to that IP Address, and you should see the familiar IIS start page. This works, because port 80 was defined as exposed in my image loekd/iis.

Enable Remote Desktop to cluster nodes

If you want to enable RDP access to every node in the cluster (even when it scales up and down) you can do so by specifying it in your ARM template. (My sample template has this already configured.)

Note that this will expose RDP access over the internet, which has security implications. Use strong passwords for the login account. Consider using a Network Security Group or a non public IP Address to restrict access.

The relevant part of the ARM template is this:

“inboundNatPools”: [

{

“name”: “LoadBalancerBEAddressNatPool”,

“properties”: {

“backendPort”: “3389”,

“frontendIPConfiguration”: {

“id”: “[variables(‘lbIPConfig0’)]”

},

“frontendPortRangeEnd”: “4500”,

“frontendPortRangeStart”: “3389”,

“protocol”: “tcp”

}

}]

Using this tempate definition, the Azure Load Balancer will be configured to forward Internet ports 3389 and up to specific VMSS nodes. The first node will get port 3389, the second one will get port 3390 and so on.

Load Balancer rules that map internet ports to different backend node ports are called ‘Inbound NAT Rules‘.

On the Azure Portal the result from the template deployment should look like this:

In my environment I have a three node cluster, and every node is now accessible through the public IP Address, using its own port.

Read more info about Service Fabric and Scale Sets here.

In this post I’ve showed some ways to verify your Windows Containers are running correctly on Azure Service Fabric.

![]()