Do you keep your lights/AC or any other appliances turned on when you’re not home? Or would you turn it off? In general, we have been taught to save on energy bills by turning off appliances when no one is using them. This is beneficial for efficient energy consumption, your monthly utility bill and the environment as well. Can we apply a similar kind of approach to create sustainable AWS workloads?

Sustainable AWS workloads

My friend and colleague Mudit Gupta from Xebia has already pointed out how cost optimization and environmental sustainability in the cloud can go hand-in-hand in his blog Cloud Sustainability – A Union of FinOps and GreenOps. In this blog, we will take one step further to check on tools, services and practices that we can apply to make AWS workloads more environmentally sustainable.

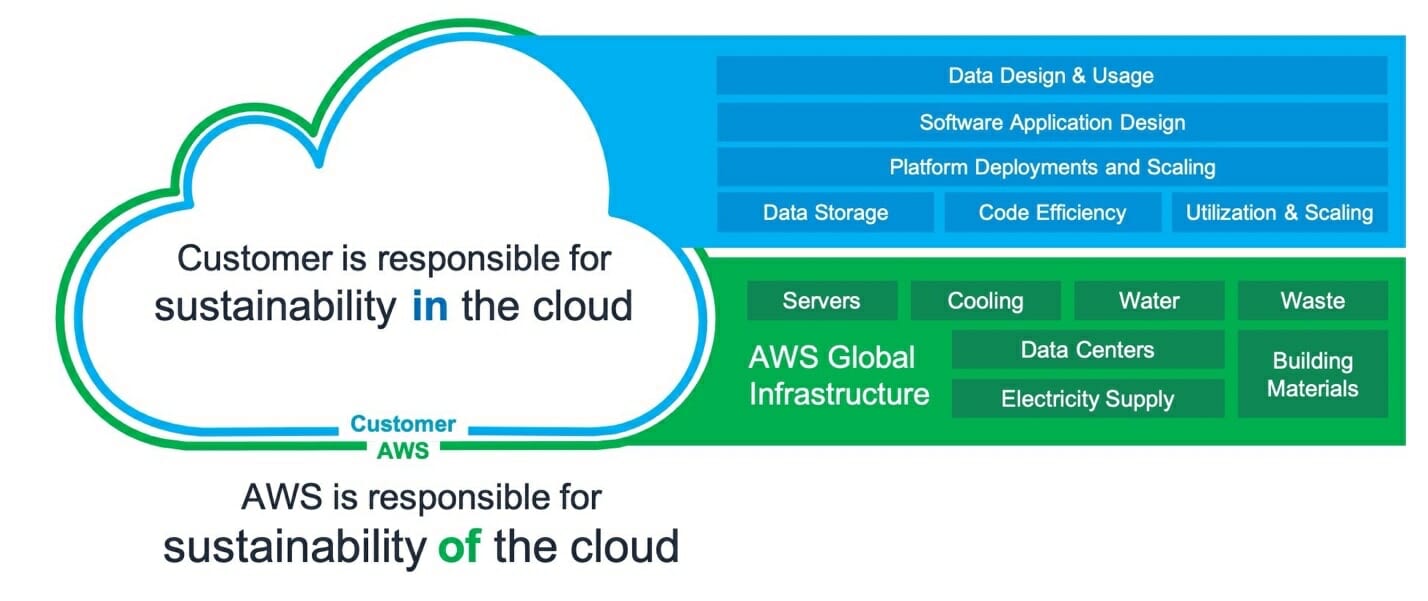

Source: The shared responsibility model – Sustainability Pillar

If you already started your AWS journey, you should be proud of that because by moving from on-premise to cloud, AWS is already helping customers to reduce their carbon footprint by 88% (Source: New – Customer Carbon Footprint Tool | AWS News Blog)

Now, let’s get started to check if l the workloads hosted in the cloud are environmentally sustainable. We are going to touch on three main parts: Compute, Storage and Networking.

Compute

Idle/Under-utilized resources

There are resources running in the cloud, even when we have created that resource only for some testing or POC purposes. Let’s check some of these scenarios:

Scenarios:

- Students/whoever enrolled for Free Tier or wants to get their hands dirty by playing with AWS and keep resources running.

- Developers/Testers spin up compute resources for development/testing and keep running resources during non-business hours/weekends.

- In-production EC2 instances that are over provisioned.

- EKS/EMR/Redshift Clusters that are over provisioned.

- Inefficient Utilization of AWS AppStream

This list can go on.

Key Metrics

In order to identify idle/underutilized resources AWS CloudWatch is your best friend. We can use the CloudWatch Metrics depicted below to check idle/underutilized resources.

| Services | CloudWatch Metrics |

| AWS EC2 | CPUUtilization, Total Number of vCPUs |

| AWS ECS | CPUUtilization |

| AWS EKS | CPUUtilization |

| AWS EMR | Is Idle |

| AWS RDS | Number Of Connections |

| AWS LB | ActiveConnectionCount, HealthyHostCount |

| AWS AppStream | ActualCapacity, AvailableCapacity, InUseCapacity, CapacityUtilization |

There are more services and tools to reduce idle resources and maximise utilization:

- Enable AWS Trusted Advisor to identify idle or underutilized EC2 instances, database instances, Redshift clusters, EBS volumes or idle/underutilized Load Balancers. With Trusted Advisor you can also identify over-provisioned Lambda functions for memory size and functions with excessive time-outs. The Trusted Advisor can also run at the Organisation level and can provide recommendations for all the resources running in the Organisation.

- AWS Auto Scaling: With AWS Auto Scaling you can automatically scale-up and scale-down resources. While defining the Auto Scaling group, you should mention the minimum and maximum values, which should not be the same.

- You can use below scaling types based on your requirements:

- Target tracking scaling: it is recommended to use this policy if you have a target on metrics AverageCPUutilization or the RequestCountPerTarget.

- Step Scaling: if CPU utilization reaches 50% you can 1 add EC2 instance, and if CPU utilization reaches 60% you can add 1 more EC2 instance.

- Scaling based on SQS: in some scenarios, you can also configure ASG based on the load in an AWS SQS queue. See for reference: Scaling based on Amazon SQS – Amazon EC2 Auto Scaling.

- Predictive Scaling: Based on daily and weekly patterns in traffic, we can increase/decrease EC2 instances proactively. It is well suited for Cyclical traffic, recurring batch workload patterns, and applications that take a long time to initialize. This is a scheduled scaling approach compared to dynamic scaling which is a reactive approach and can have cold start time.

- Scheduled scaling: If you know during a specific time of the day or week traffic gets increased or decreased, you can configure a Schedule scaling policy.

- AWS Instance Scheduler: It helps to start and stop your AWS EC2 and AWS RDS instances automatically. For example, you can implement this in your development or any environment to stop resources during weekend or non-business hours. This will reduce cost and energy consumption.

Right Sizing

- If you have workloads with unpredictable spikes, you can consider using burstable T-type instances. This reduces the need to over-provision capacity.

- You can use AWS Trusted Advisor and Compute Optimiser to get right sizing recommendations for your workload.

- Switch to Graviton2-based instances. Graviton2 is the most power-efficient processor. Additionally, Graviton2 provides up to 40% better price-performance over the comparable current generation x86-architecture based instances.

- AWS Compute Optimizer: By enabling AWS Compute Optimizer we can make sure whether resources we provision are underutilized or overutilized. AWS Compute Optimizer recommends optimal AWS resources for your sustainable AWS workloads to reduce costs and improve performance by using machine learning to analyse historical utilization metrics. It covers three types of AWS service – AWS EC2, AWS EBS and AWS Lambda. We can use AWS Compute Optimizer at organisation level and can have a dedicated account that will manage all recommendations centrally.

Use Spot Instances

- Spot Instances use spare capacity which is much cheaper than On-Demand instances.

- By shaping your demand to the existing supply of an EC2 instance capacity, you will improve your overall resource efficiency and reduce idle capacity.

Storage

As the data is the new gold, every organisation is working to manage their data efficiently and effectively. We store huge amounts of data but sometimes we are not sure when or for how long we need that data. So, effective data management is important for sustainability as ineffective utilisation of storage resources on cloud can lead to wastage of energy and money. To make data storage efficient AWS offers various storage options and data lifecycle management policies. Let’s look at some.

Analyze Data Access & Usage Patterns

- Identify the data usage patterns:

- In-frequently accessed data can be stored on magnetic storage rather than a solid-state drive.

- For data archiving use cases, consider using Amazon EFS Infrequent Access, Amazon EBS Cold HDD volumes, and Amazon S3 Glacier.

- To store data efficiently throughout its lifecycle, consider using AWS S3 Lifecycle Management. It will automatically transfer data from one storage class to the other. .

- For data with unknown or changing access patterns, use Amazon S3 Intelligent-Tiering to move objects among S3 storage classes automatedly depending on the object access patterns.

- You should also consider Lifecycle Management for services mentioned below:

- AWS EFS

- EBS Snapshot

- RDS Snapshot

- AWS EFS

Optimize data formats

- Columnar data formats are very efficient compared to row-oriented storage. Columnar storage can significantly reduce the amount of data fetched from disk by allowing access to only the columns that are relevant for the particular query or workload.

- You can improve performance and reduce query costs of Amazon Athena by 30–90 percent by compressing, partitioning, and converting your data into columnar formats such as parquet

Reduce unused storage resources

Making efficient use of storage also helps in achieving sustainability goals. We see EBS being overprovisioned or not attached to any EC2 instance. Also, no retention period has been defined for CloudWatch Logs as logs keep getting piled up and no one uses it.

- We should delete unused EBS volumes. Additionally, define Amazon Data Lifecycle Manager to retain and delete EBS snapshots and Amazon EBS-backed Amazon Machine Images (AMIs) automatically.

- Define CloudWatch logs retention period.

Deduplicate data

Large datasets often have redundant data, which may increase your storage footprint.

- By turning on data deduplication for your Amazon FSx for Windows File Server, you will optimize data storage. For general-purpose file shares, storage space can be reduced by 50–60 percent through deduplication.

- If you have datasets residing in Amazon S3, you can automatically get rid of duplicates by using the FindMatches transform provided by AWS Lake Formation.

Key Monitoring Metrics to check around Data Storage:

| Service | Metric |

| AWS S3 | BucketSizeBytes, S3 Object Access |

| AWS EBS | VolumeIdleTime |

| AWS EFS | StorageBytes |

| Amazon FSx for Lustre | FreeDataStorageCapacity |

| Amazon FSx for Windows File Server | FreeStorageCapacity |

Networking

When you make your applications available to more customers, the packets that travel across the network will increase. Similarly, the larger the size of data, as well as the more distance a packet has to travel, the more resources are required to transmit it. If we don’t optimize the network, it obviously brings latency issues and also leads to inefficient energy consumption. Let’s understand what can be done to optimize the network.

Reduce Data Travel Path.

Data transfer will be more efficient if we reduce data sent over the network, optimize paths for packets, and implement compression techniques.

Keep data transfer within a single region and AZ:

- When transferring data between AWS EC2/Lambda and S3, architect your application to make sure that these transfers happen within the same region.

- Also when transferring data in and out from services like AWS RDS, AWS EC2, AWS EKS, Redshift and other AWS service instances, AWS charges you when data is moved from one Availability Zone to another but it is also not a good practice for sustainability.

- If your application communicates internally, use Private IP for communication.

- If your application communicates between two VPCs (same or different account), use VPC Peering or Transit Gateway.

Read Replicas

- If your workload is within a single region, you should choose regions that are nearest to your end users.

- If you have global presence, you can set up multiple copies of Read Replica in AWS RDS and AWS Aurora. Amazon DynamoDB global tables allow for fast performance and alleviate network load.

CloudFront CDN – Content Delivery Network

With a Content Delivery Network, you can bring data closer to the user. When requested, they cache static content from the original server and deliver it to the user. This shortens the distance each packet has to travel.

- AWS offers CloudFront, a service that optimizes network utilization and delivers traffic over CloudFront’s globally distributed edge network.

- Also, you should have Trusted Advisor enabled, as it will include a check that recommends whether you should use a CDN for your S3 buckets. It analyzes the data transferred out of your S3 bucket and flags the buckets that could benefit from a CloudFront distribution.

- Cache Hit Ratio: CloudFront caches different versions of an object depending upon the request headers (for example, language, date, or user-agent). You can further optimize your CDN’s distribution cache hit ratio (the number of times an object is served from the CDN versus from the origin) with a Trusted Advisor check. It automatically checks for headers that do not affect the object and then recommends a configuration to ignore those headers and not forward the request to the origin.

Edge Computing

- CloudFront Functions can run compute on edge locations and Lambda@Edge can generate Regional edge caches. AWS IoT Greengrass provides edge computing for Internet of Things (IoT) devices.

- In the last re:Invent, AWS made an announcement for AWS Local Zones to run low-latency applications at the edge.

Below are some metrics that can help you optimize the network.

| Service | Metric/Check |

| Amazon CloudFront | Cache hit rate |

| Amazon Simple Storage Service (Amazon S3) | Data transferred in/out of a bucket |

| Amazon Elastic Compute Cloud (Amazon EC2) | NetworkPacketsIn/NetworkPacketsOut |

| AWS Trusted Advisor | CloudFront Content Delivery Optimization |

Reduce data size over your network

Compressing data

- CloudFront not only caches static content but can also help to optimize the network by serving compressed files. We can configure CloudFront to automatically compress objects, which results in faster downloads, leading to faster rendering of web pages.

Optimizing APIs

- Consider reducing API size to reduce network traffic by compressing your messages for your REST API payloads, if your payloads are large. Edge-optimized API endpoints are best suited for geographically distributed clients. Regional API endpoints are best suited for when you have a few clients with higher demands, because they can help reduce connection overhead. Caching your API responses will reduce network traffic and enhance responsiveness.

Apart from the the things we discussed, I would also like to add the below points:

- Location matters: AWS has published about the website Amazon Around the Globe, to showcase their Sustainability actions. To drive sustainability we can check renewable energy projects like Wind Farms or Solar Farms on a map and select our region.

- Use AWS Managed Services: If we manage services like databases, Container Platforms, and Data engineering/analytics platforms it is not only a challenging job but it also leads to inefficient resource utilization. To avoid this, start using AWS Managed service where AWS takes most of the responsibility. Below are some services managed by AWS.

I hope you find this blog helpful to start your Sustainable Development practice. If we do monitoring and logging efficiently I know for sure, we can find more opportunities for sustainable AWS workloads.