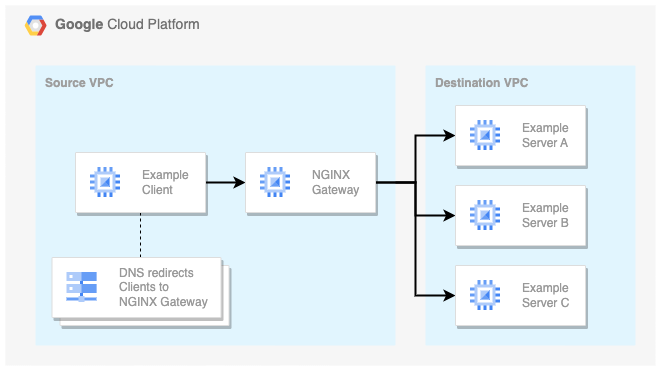

This blog uses an NGINX gateway VM to access services in another Google Cloud VPC. Clients are directed to the gateway (or forward proxy) via custom DNS entries. As a result, no client configuration is needed.

Connectivity overview

First, custom DNS entries direct Clients to the NGINX Gateway. Next, the NGINX gateway connects clients from the Source VPC to services in the Destination VPC:

The NGINX gateway uses two network interfaces to connect the VPCs. One interface accepts requests from the Source VPC and one interface proccesses requests in the Destination VPC. Combined, the NGINX gateway enables clients in the Source VPC to access resources in the Destination VPC.

Find the full example on GitHub.

Piecing the connectivity together

Clients connect to Destination VPC resources as any other internet resource:

curl http://example-server.xebia/

The following configuration ensures that requests are handled accordingly:

- Redirect Destination VPC requests to the NGINX gateway

Services in the Destination VPC are registered in the private DNS zone of the Source VPC:

# The .xebia private DNS zone is made visible to the Source VPC

resource "google_dns_managed_zone" "source_vpc_xebia" {

project = var.project_id

name = "${google_compute_network.source_vpc.name}-xebia"

dns_name = "xebia."

visibility = "private"

private_visibility_config {

networks {

network_url = google_compute_network.source_vpc.id

}

}

}

# Any .xebia domain name is redirected to the nginx-gateway VM

resource "google_dns_record_set" "source_vpc_nginx_gateway_redirect_base_xebia" {

project = var.project_id

managed_zone = google_dns_managed_zone.source_vpc_xebia.name

name = "*.xebia."

type = "CNAME"

ttl = 300s

rrdatas = [google_compute_address.nginx_gateway.address]

}

Note that the registered services point to the NGINX gateway address

- Accept HTTP/S requests on the NGINX gateway

The NGINX gateway listens for HTTP/S requests to forward:

http {

...

server {

listen ${load_balancer_ip}:80;

resolver 169.254.169.254;

location / {

# Note: Please include additional security for a production deployment. Exposing all reachable HTTP endpoints is probably not intended.

proxy_pass http://$http_host$uri$is_args$args;

proxy_http_version 1.1;

}

}

}

stream {

server {

listen ${load_balancer_ip}:443;

resolver 169.254.169.254;

# Note: Please include additional security for a production deployment. Exposing all reachable HTTPS endpoints is probably not intended.

proxy_pass $ssl_preread_server_name:443;

ssl_preread on;

}

}

- Lookup resources in the Destination VPC

By configuring the Destination VPC as the primary interface, DNS queries and IP packets go to the Destination VPC:

resource "google_compute_instance_template" "nginx_gateway" {

...

# NOTE: Order of interfaces matter. DNS et al is bound to primary NIC.

network_interface {

subnetwork_project = var.project_id

subnetwork = google_compute_subnetwork.destination_vpc_nat.self_link

}

network_interface {

subnetwork_project = var.project_id

subnetwork = google_compute_subnetwork.source_vpc_proxy.self_link

}

...

}

- Return load-balanced traffic to the Source VPC

The NGINX gateway uses an internal Network Load Balancer to balance requests. An internal passthrough Network Load Balancer routes connections directly from clients to the healthy backends, without any interruption. To accept load-balanced traffic, Google Cloud configures each backend VM with the IP address of the load balancer.

To return load-balanced traffic, a route is configured to use the Source VPC gateway for traffic from the load balancer IP:

echo "Adding 'source' route table for load balanced traffic in source VPC"

SOURCE_CIDR=$(ip -o -4 addr show dev ens5 | awk '{print $4}')

SOURCE_IP=$${SOURCE_CIDR%"/32"}

SOURCE_GW_IP=$(ip route | grep 'dev ens5 scope link' | awk '{print $1}')

# Return load balanced traffic over source VPC interface

echo "1 source" >> /etc/iproute2/rt_tables

ip rule add from ${load_balancer_ip} table source

ip route add default via $SOURCE_GW_IP dev ens5 src $SOURCE_IP table source

Discussion

While this setup prevents you from configuring peerings and/or exchanging too much networking details. The setup does come with several limitations.

First, it’s a one-way communication channel, because of the reliance on the built-in feature of the primary network interface (DNS et al). An additional gateway would be needed to facilitate two-way communication.

Second, it’s prone to overlapping IP addresses. Requests to IP addresses of the Source VPC proxy subnet will leave the Source VPC gateway, instead of the Destination VPC gateway. Additional IP rules can steer the traffic accordingly, but require a more throrough change of the IP routing rules, especially for the local load balancer IP address. Preferably, a safe IP address space is agreed upon, or the problem is addressed using a managed service such as Private Service Connect.

Finally, the HTTPS forwarder depends on the ngx_stream_ssl_preread_module module to obtain the target domain. Requests without a Server Name Indication (SNI) will fail.

Conclusion

There are endless ways to connect Google Cloud VPCs. NGINX gateway VMs with multiple network interfaces allow clients to access services in the target VPC and control/monitor traffic. Note that while the solution is transparant to clients, subtle errors may appear due to overlapping IP addresses and/or uncovered client requests.