Airflow 1.10.10 was released on April 9th and in this blog post I’d like to point out several interesting features. Airflow 1.10.10 can be installed as usual with:

pip install apache-airflow==1.10.10The Airflow 1.10.10 release features a large number of bug fixes, UI improvements, performance improvements, new features, and more. For me personally, these are the main highlights of the Apache Airflow 1.10.10 release:

- Avoid creation of default connections

- Release of the Airflow Docker image

- A generic interface for fetching secrets, plus several implementations

- The webserver is not dependent anymore on the DAG folder

- Two noteworthy UI features:

- A timezone picker to display your desired timezone

- When triggering a DagRun from the UI, a JSON config can now be provided

Note that all UI changes are applied only to the RBAC interface. The "standard" interface is deprecated and new changes are only applied to the RBAC interface. In Airflow 2.0 the standard interface will be removed and the RBAC interface will become the new standard interface. Therefore, in order to see and use the UI changes in Airflow 1.10.10, you must activate the RBAC interface:

export AIRFLOW__WEBSERVER__RBAC=TrueAn empty Airflow installation holds no default users. To add one for just looking around, you can create a user with admin rights:

airflow create_user --role Admin --username airflow --password airflow -e airflow@airflow.com -f airflow -l airflowThis creates a user with username airflow, password airflow.

Avoid creation of default connections

Airflow has had a toggle for not loading example DAGs for a long time: AIRFLOW__CORE__LOAD_EXAMPLES. However, there was no such toggle for example connections until now: AIRFLOW__CORE__LOAD_DEFAULT_CONNECTIONS. The default value is True, so you’ll still have to set it to False explicitly.

I think this was a much requested feature and the default connections have led to unexpected behaviour more than once, so I’m happy to see this toggle.

Release of the Airflow Docker image

Airflow now provides a set of production Docker images:

docker run apache/airflow:1.10.10The default will come with Python 3.6, but other versions are also available:

- apache/airflow:1.10.10-python2.7

- apache/airflow:1.10.10-python3.5

- apache/airflow:1.10.10-python3.6

- apache/airflow:1.10.10-python3.7

The image is not intended for demonstration purposes, so simply running apache/airflow:1.10.10 will display the Airflow CLI help. It does not come with a convenience function for running both the webserver and scheduler inside a single image for looking around. The images are intended to be used in a production setup, e.g. with a Postgres database, and one image running the webserver, and one running the scheduler. For more information how to use these Docker image, refer to Github using-the-images.

A generic interface for fetching secrets, plus several implementations

The way to store and use secrets until now were the Airflow variables and connections, which stores secret values inside the Airflow metastore. In many situations this was not desirable because many companies already apply a different system for storing credentials, for example AWS SSM when running on AWS. This posed a challenge and such secrets were often provided to Airflow via environment variables, e.g. AIRFLOW_CONN_SUPERSECRET=[AWS SSM secret inserted].

The environment variable secrets were easily readable and required lots of configuration so this was not ideal. In Airflow 1.10.10, a generic interface for communicating with secrets providers was added, and several implementations were added:

- AWS SSM (Parameter Store)

- GCP Secrets Manager

- HashiCorp Vault

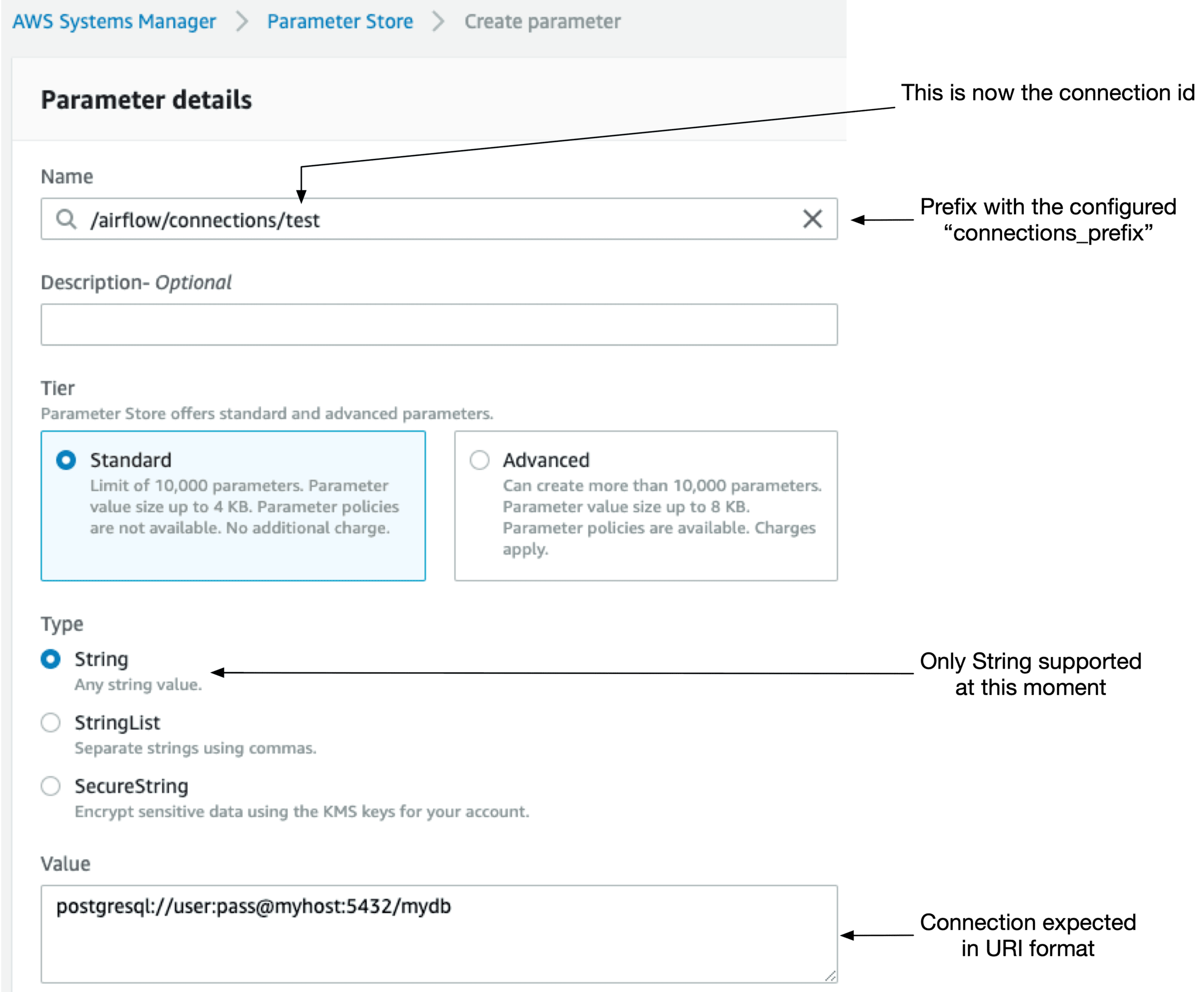

To configure a secrets backend, configure the secrets section in the Airflow config. Take for example AWS SSM:

export AIRFLOW__SECRETS__BACKEND=airflow.contrib.secrets.aws_systems_manager.SystemsManagerParameterStoreBackend

export AIRFLOW__SECRETS__BACKEND_KWARGS='{"connections_prefix": "/airflow/connections", "variables_prefix": "/airflow/variables", "profile_name": "aws_profile_name_in_config"}'Only one single secrets backend can be configured. The search order is:

- Configured secrets backend

- Environment variables

- Airflow metastore

Note: the AWS SSM secrets backend currently only works with unencrypted secrets! Kwargs for e.g. setting the KMS decryption key are not propagated down to the actual get_parameter call to AWS, but I expect this to soon be fixed. While I still think it’s a very useful feature, currently the only way (specific for AWS SSM) is to store an unencrypted string value:

The connection can now be requested as usual:

from airflow.hooks.base_hook import BaseHook

myconn = BaseHook.get_connection("test")

print(myconn.host)

# myhostMore details here: Use alternative secrets backend

The webserver is not dependent anymore on the DAG folder

One of the more quirky things when setting up Airflow is the fact all Airflow processes require access to the DAG folder. When running Airfow on different machines, or in containers, this results in all sorts of creative solutions to make the DAG files available to both the webserver and scheduler.

Several changes were made to persist DAG code in the database, with (one of) the goal(s) to make the webserver read and display metadata only from the database, and not from the DAG files themselves. This should result in a single source of truth for the webserver, and display a consistent state to the user.

The complete script in which a DAG is defined can now be stored in the metastore, in a table named DagCode. To do so, configure Airflow with AIRFLOW__CORE__STORE_DAG_CODE=True (plus AIRFLOW__CORE__STORE_SERIALIZED_DAGS=True to serialize the DAGs as JSON in the database). Now, the webserver reads everything from the metastore and does not require access anymore to the DAGs folder.

There are two notes to mention:

- There is a bug which doesn’t update the DagCode value if the change was made within two minutes of the last change. The serialized DAG is updated though which is reflected in all views except the Code view. Bug is already fixed and likely to be included in next release: Fix non updating DAG code by checking against last modification time #8266.

- In Airflow’s configuration, the

AIRFLOW__CORE__STORE_DAG_CODEvalue is interpolated fromAIRFLOW__CORE__STORE_SERIALIZED_DAGS. However, the interpolation fails when using environment values, so ensure to setAIRFLOW__CORE__STORE_DAG_CODEexplicitly. Bug report filed: Config value interpolation not working when set as environment variable #8255.

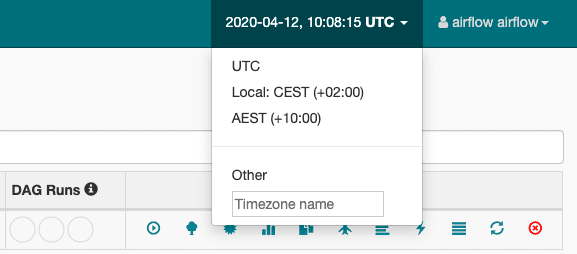

A timezone picker to display your desired timezone

The webserver UI now features a timezone picker, for displaying all times in the UI in your preferred timezone:

It shortlists three timezones:

- UTC time

- Your machine’s local timezone

- A selected timezone

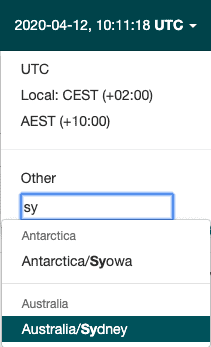

Any desired timezone can be chosen in the "Other" field by typing your desired city name:

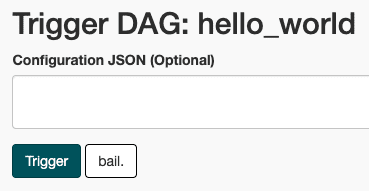

When triggering a DagRun from the UI, a JSON config can now be provided

The last noteworthy feature is the possibility to add a JSON config when manually triggering a DagRun:

This JSON will be available in the task instance context, via dag_run.conf, which can be a convenient way to create parameterized DAGs.

That’s the highlights for this release. Feel free to contact us about anything Airflow!