Apache Airflow 1.10.2 is now released and installable with pip install apache-airflow==1.10.2. It is not Python 3.7 compatible (yet), so the latest possible Python version is still 3.6. Also setting SLUGIFY_USES_TEXT_UNIDECODE=yes to avoid the unidecode GPL dependency is still required, although that’s being worked on and hopefully not required in the near future.

Over 250 PRs are included in this release and I’d like to point out several major changes:

- “Releasing” of sensors with

reschedulemode (PR) - Generic PythonSensor (PR)

- Various performance optimisations

- RBAC UI with themed support (PR)

Read the full changelog here: airflow-1-10-2-2019-01-19.

Apache Airflow is now an Apache TLP

Besides the new release, it is worthwhile to mention the Apache Airflow project recently graduated from incubator to TLP (Top Level Project)! The project has gained a lot of traction over the last years and lots of PRs are being added every day.

Releasing of sensors with reschedule mode

This is a very important new feature for people running Airflow and using sensors. The new feature can be used by setting mode="reschedule" in your sensor arguments. Airflow workers (the process executing tasks) have a maximum parallelism, i.e. the maximum number of tasks that can be executed in parallel. By default, sensors run with the poke mode, which blocks a worker slot while "poking" every X seconds for a given condition to be true and continue execution of the downstream tasks. In case you have more sensors than available worker slots this could result in a "sensor deadlock" where all worker slots are in use by sensors.

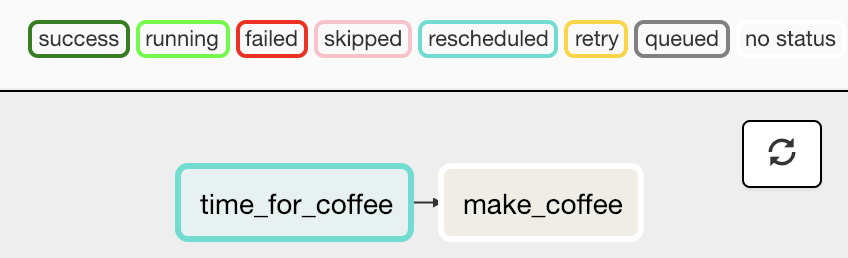

The new sensor reschedule mode "releases" the worker slot by rescheduling the task and thus not blocking the worker slot anymore. This comes with a new up_for_reschedule state:

Generic PythonSensor

A number of sensors for various use cases existed but there was no generic PythonSensor until now. Just like the PythonOperator, it works by passing a python_callable argument which is executed every X seconds and should return True or False. For example:

from datetime import datetime import airflow from airflow import DAG from airflow.contrib.sensors.python_sensor import PythonSensor from airflow.operators.bash_operator import BashOperator default_args = {"owner": "godatadriven", "start_date": airflow.utils.dates.days_ago(14)} dag = DAG( dag_id="make_coffee", default_args=default_args, schedule_interval="0 0 * * *", description="Is it time for coffee?", ) def _time_for_coffee(): """I drink coffee between 6 and 12""" if 6 datetime.now().hour 12: return True else: return False time_for_coffee = PythonSensor( task_id="time_for_coffee", python_callable=_time_for_coffee, mode="reschedule", dag=dag, ) make_coffee = BashOperator( task_id="make_coffee", bash_command="echo 'Time for coffee!'", dag=dag, ) time_for_coffee >> make_coffee

Various performance optimisations

Several large performance optimisations were made, e.g.:

- Read DagBag only once during startup (PR)

- Decoupling of the DAG parsing loop from the scheduler loop (PR)

- Multiple indices were added to the database (PR, PR, PR)

- The topological_sort method listing tasks in topological order is much faster (PR)

RBAC UI with themes

Lastly, the RBAC UI now supports themes via usage of FAB (Flask-AppBuilder). That automatically means all supported themes are the ones supported by FAB, and of course you can add your own theme.

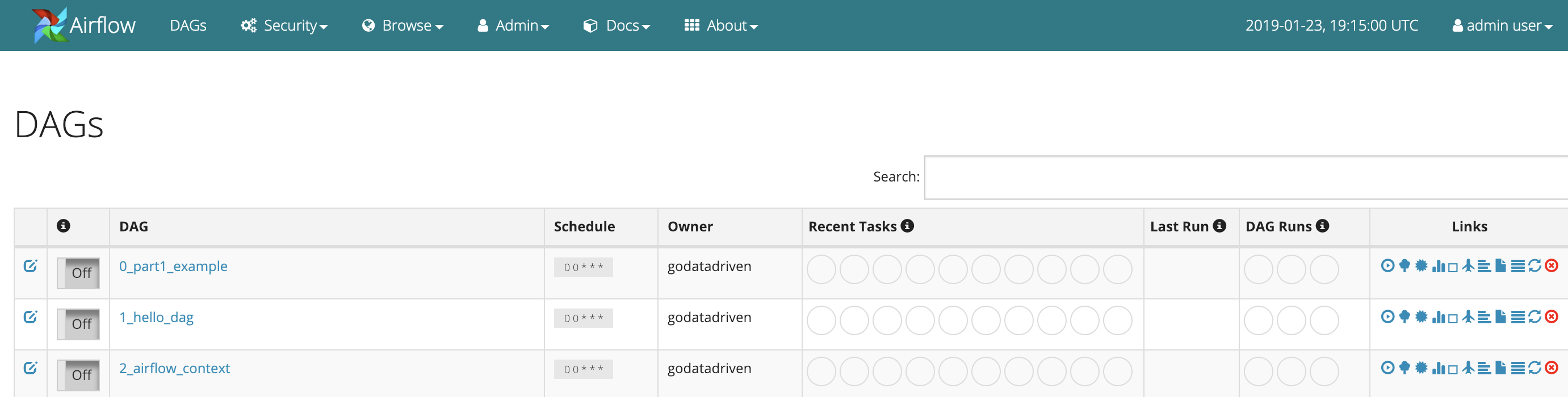

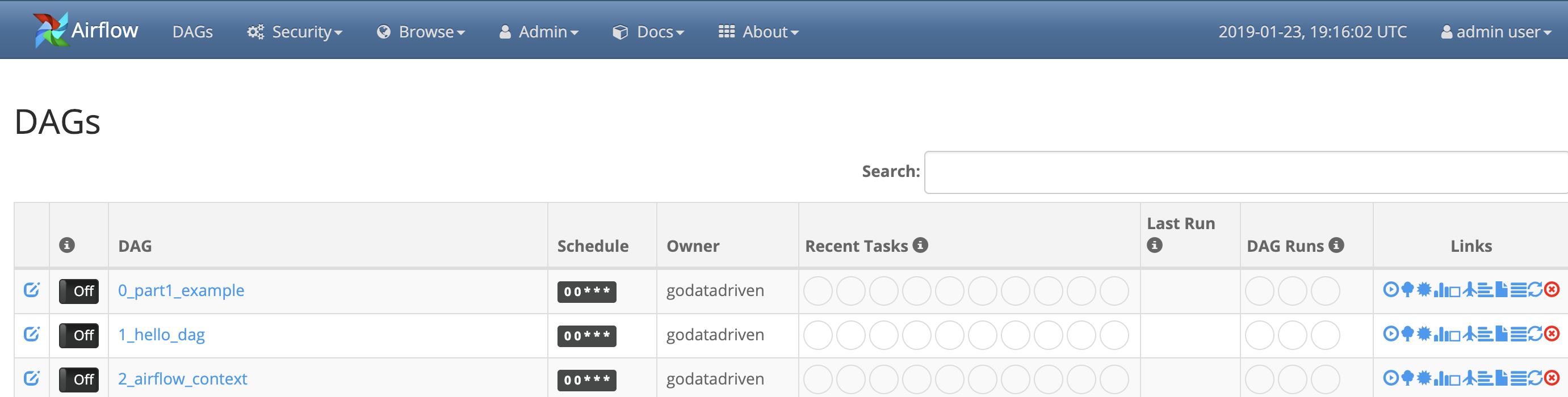

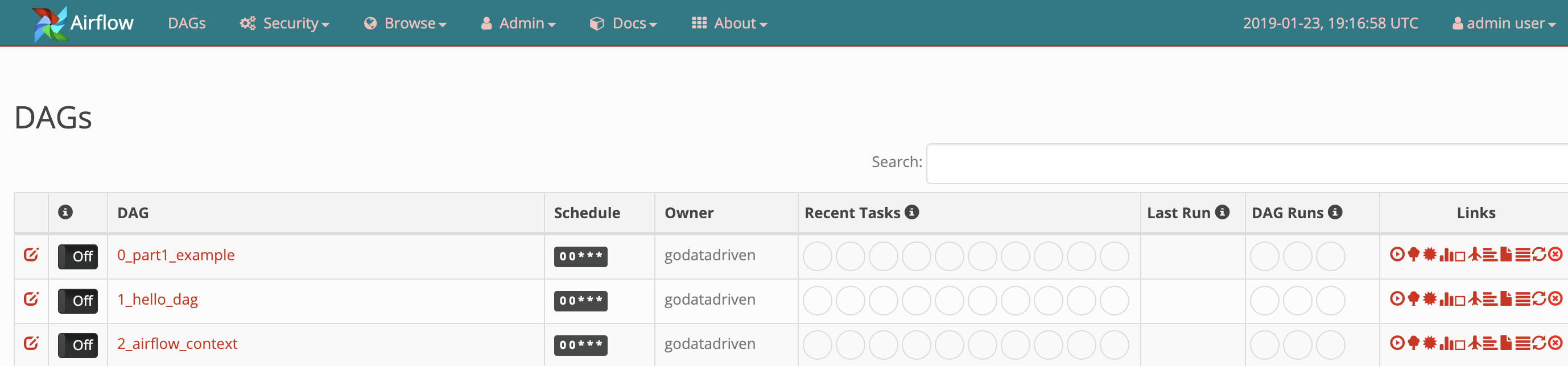

To use it, set APP_THEME = "[theme-name].css" (last line in webserver_config.py) and set one of the themes listed here.

For example:

Yeti

Spacelab

Simplex

Note there is a configuration navbar_color which interferes with the theme, so set it accordingly.

That’s the highlights for this release. Feel free to contact us about anything Airflow!

We offer an Airflow course to teach

you the internals, terminology, and best practices of working with Airflow, with hands-on

experience in writing an maintaining data pipelines.