The following is a review of the book Fundamentals of Data Engineering by Joe Reis and Matt Housley, published by O’Reilly in June of 2022, and some takeaway lessons. The authors state that the target audience is technical people and, second, business people who work with technical people. The audience is very broad when described that way. Nevertheless, I strongly agree. This book is as good for a project manager or any other non-technical role as it is for a computer science student or a data engineer.

This post will briefly introduce some insights I wish I had known when I started my path as a Data Engineer four years ago. If you find what I am about to share interesting, get a copy of this magnificent book.

I will be covering the first and the third parts of the book. The second part of the book, called “The Data Engineering Lifecycle in Depth” (210 pages out of 406), targets technical people. Still, I have found that the authors explain all this technicality in a friendly way for non-technical people curious about the data engineer lifecycle.

Data Engineering Described

In recent years, a data engineer was expected to know and understand how to use a handful of powerful and monolithic technologies to create a data solution. Their work would be devoted to cluster administration and maintenance, managing overhead, and writing pipeline and transformation jobs, among other tasks. The following quotes date back to those years:

Data Engineers set up and operate the organization’s data infrastructure, preparing it for further analysis by data analysts and scientist. – AltexSoft

All the data processing is done in Big Data frameworks like MapReduce, Spark and Flink. While SQL is used, the primary processing is done with programming languages like Java, Scala and Python. – Jesse Anderson

The data engineering field could be thought of as a superset of business intelligence and data warehousing that brings more elements from software engineering. – Maxime Beauchemin

Nowadays, data engineers are focused on balancing the simplest, most cost-effective, best-of-breed services that deliver value to the business. The data engineer is also expected to create agile data architectures that evolve as new trends emerge. The skill set of a data engineer encompasses the undercurrents:

- Security

- Data Management

- DataOps

- Data Architecture

- Software Engineering

Finally, a data engineer juggles a lot of complex moving parts and must constantly optimize along the axes of:

| Cost | Agility | Scalability | Simplicity | Reuse | Interoperability |

Data engineering has become a holistic practice. The best data engineers view their responsibilities through business and technical lenses. Building architectures that optimize performance and cost at a high level is no longer enough. A data engineer should:

- Know how to communicate with non-technical and technical people.

- Understand how to scope and gather business and product requirements.

- Understand the cultural foundations of Agile, DevOps, and DataOps.

The Data Engineering Lifecycle and its undercurrents

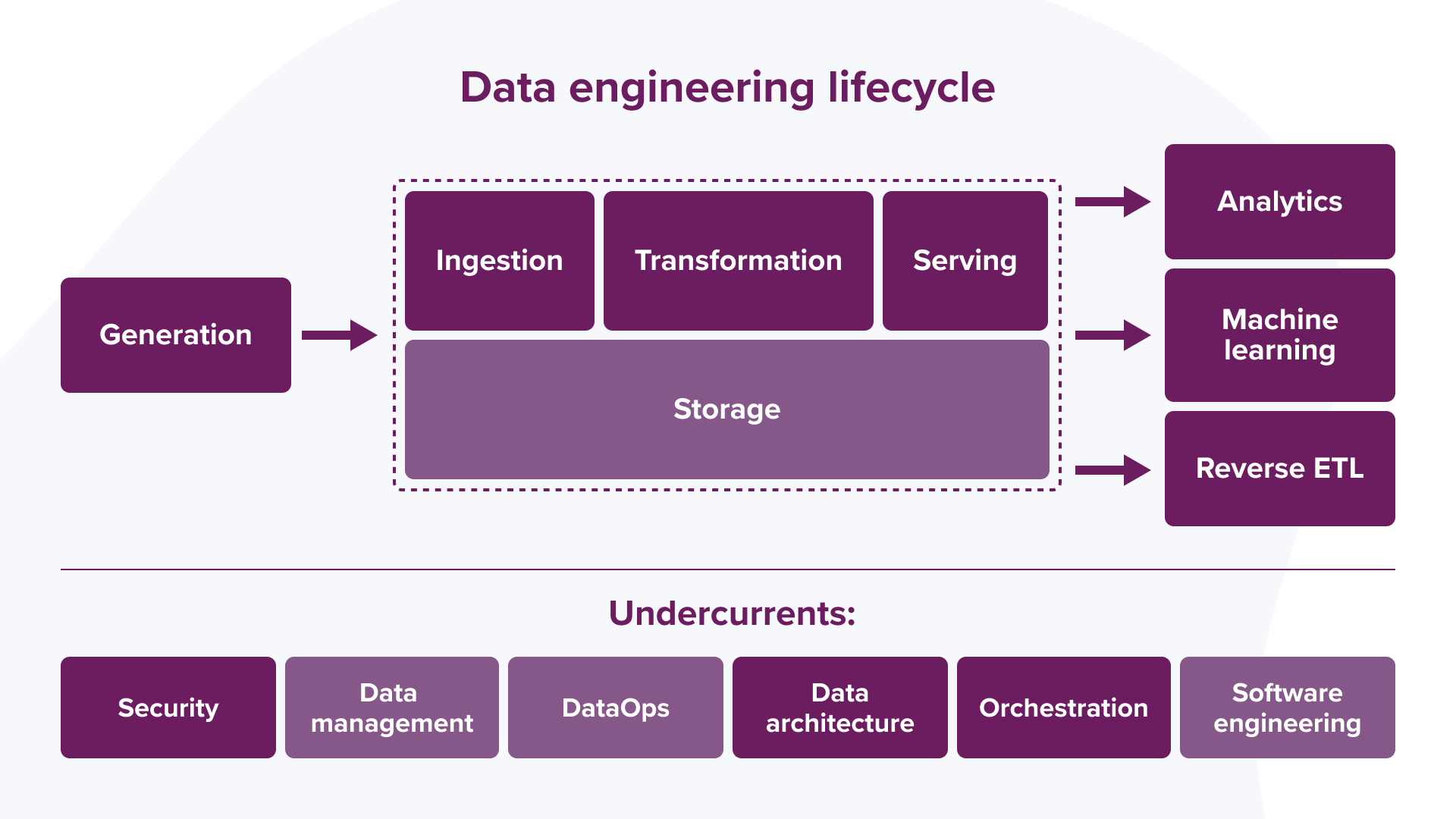

Whereas the full data lifecycle encompasses data across its entire lifespan, the data engineer lifecycle focuses on the stages a data engineer controls. That means the data engineering lifecycle comprises stages that turn raw data into a useful end product. The authors divide the data engineer lifecycle into five stages:

- Generation

- Storage

- Ingestion

- Transformation

- Serving Data

The field is moving up the value chain, incorporating traditional enterprise practices like data management and cost optimization and new practices like DataOps. All the stages of the data engineer lifecycle must consider the previously listed undercurrents to function properly.

| Security | Data Management | DataOps | Data Architecture | Orchestration | Software Engineering |

|---|---|---|---|---|---|

| Access control to data and systems | Data Discoverability, Data Definitions, Data Accountability, Data Modeling, Data Integrity | Observability, Monitoring, Incident reporting | Analyze trade-offs, Design for agility, Add value to the business | Coordinate workflows, Schedule jobs, Manage tasks | Programming and coding skills, Software design patterns, Testing and Debugging |

Security

Security must be top of mind for data engineers. They must understand both data access and security, exercising the principle of least privilege. Give users only the access they need to do their jobs today, nothing more. People and organizational structure are always the biggest security vulnerabilities in any company. Data security is also about timing – providing data access to exactly the people and systems that need to access it and only for the duration necessary to perform their work.

Data Management

Data Management encompasses a set of best practices that data engineers will use to manage data, both technically and strategically. Without a framework for managing data, data engineers are simply technicians operating in a vacuum. Data engineers need a broader perspective of data’s utility across the organization, from the source systems to the C-suite and everywhere in between. Data Management has quite a few facets, including the following:

- Data governance, including discoverability and accountability

- Data modeling and design

- Data lineage

- Storage and operations

- Data integration and interoperability

- Data lifecycle management

- Data systems for advanced analytics and ML

- Ethics and privacy

DataOps

DataOps maps the best practices of Agile methodology, DevOps, and statistical process control to data. DataOps aims to improve the release and quality of data products. It borrows much from lean manufacturing and supply chain management, mixing people, processes, and technology to reduce time to value.

DataOps is a collection of technical practices, workflows, cultural norms, and architectural patterns that enable:– Data Kitchen

- Rapid innovation and experimentation deliver new insights to customers with increasing velocity

- Extremely high data quality and very low error rates

- Collaboration across complex arrays of people, technology and environments

- Clear measurement, monitoring, and transparency of results

Data Architecture

Data Architecture reflects the current and future state of data systems that support an organization’s long-term data needs and strategy. A data engineer should understand the needs of the business and translate those to design new ways to capture and serve data while balancing costs and simplicity. This means knowing the trade-offs with design patterns, technologies, and tools in source systems, ingestion, storage, transformation, and serving data.

Software Engineering

Software Engineering has always been a central skill for data engineers. Nevertheless, the low-level implementation of the early days, circa 2000 – 2010, has been abstracted away. This abstraction continues today. Though it has become more abstract and easier to manage, core data processing code still needs to be written.

Data engineers must be highly proficient and productive in frameworks and languages like Spark, SQL, or Beam. It is also imperative that a data engineer understands proper code-testing methodologies, such as unit, regression, integration, end-to-end, load, and smoke.

When data engineers have to manage their infrastructure in a cloud environment, they increasingly do this through IaC (Infrastructure as Code) frameworks rather than manually spinning up instances and installing software. In practice, regardless of which high-level tools they adopt, data engineers will run into corner cases throughout the data engineering lifecycle that requires them to solve problems outside their chosen tools’ boundaries and write custom code.

Principles of a good Data Architecture

Successful data engineering is built upon rock-solid architecture.

Good data architecture serves business requirements with a common, widely reusable sets of building blocks while maintaining flexibility and making appropriate trade-offs. Bad architecture is authoritarian and tries to cram a bunch of one-size-fits-all decision into a big ball of mud. – Joe Reis and Matt Housley, Fundamentals of Data Engineering

Good data architecture is flexible and easily maintainable. It is a living, breathing thing. It’s never finished. A good data architecture flourishes when there is an underlying enterprise architecture.

Enterprise architecture is the design of systems to support change in the enterprise, achieved by flexible and reversible decisions reached through careful evaluation of trade-offs. – Joe Reis and Matt Housley, Fundamentals of Data Engineering

Principles of data engineering architecture:

- Choose common components wisely.

- Plan for failure.

- Architect for scalability.

- Architecture is leadership.

- Always be architecting.

- Build loosely coupled systems.

- Make reversible decisions.

- Prioritize security.

- Embrace FinOps.

Choose Common Components Wisely

Common components should be accessible to everyone with an appropriate use case, and teams are encouraged to rely on common components already in use rather than reinventing the wheel. Common components must support robust permissions and security to enable sharing of assets among teams while preventing unauthorized access.

Architects should avoid decisions that will hamper the productivity of engineers working on domain-specific problems by forcing them into one-size-fits-all technology solutions.

Plan for Failure

Everything fails, all the time. – Werner Vogels, CTO of Amazon Web Services

Some areas to keep an eye on while planning for failure:

| Availability | Reliability | Recovery Time Objective (RTO) | Recovery Point Objective (RPO) |

Architect for Scalability

Scalability in data systems encompasses two main capabilities. First, scalable systems can scale up to handle significant quantities of data. Second, they can scale down once the load spike ends. If your company or the company you work for grows much faster than anticipated, this growth should also lead to more available resources to re-architect for scalability.

Architecture Is Leadership

Data architects should be highly technically competent but delegate most individual contributor work to others. Strong leadership skills combined with high technical competence are rare and extremely valuable. The best data architects take this duality seriously.

As a data engineer, you should practice architecture leadership and seek mentorship from architects. Eventually, you may well occupy the architect role yourself.

Always Be Architecting

An architect’s job is to develop deep knowledge of the baseline architecture (current state), develop a target architecture, and map out the sequencing plan to determine priorities and order of architecture changes.

Build Loosely Coupled Systems

When the architecture of the system is designed to enable teams to test, deploy, and change systems without dependencies on other teams, teams require little communication to get work done. In other words, both the architecture and the teams are loosely coupled. – Google DevOps tech architecture guide

For software architecture, a loosely coupled system has the following properties:

- Systems are broken into small components.

- These systems interface with other services through abstraction layers, such as a messaging bus or an API.

- Internal changes to a system component do not require changes in other parts.

- Each component is updated separately as changes and improvements are made.

Make reversible Decisions

The data landscape is changing rapidly. Today’s hot technology is tomorrow’s afterthought.

One of architect’s most important task is to remove architecture by finding ways to eliminate irreversibility in software designs. – Martin Fowler

Prioritize Security

All data engineers should consider themselves security engineers. Those who handle data must assume that they are ultimately responsible for securing it. The two main ideas, among others, are zero-trust security and shared responsibility.

Traditional architectures place a lot of faith in perimeter security […]. Unfortunately, this approach has always been vulnerable to insider attacks, as well as external threats such as spear phishing. – Google Cloud’s Five Principles

Embrace FinOps

FinOps is an evolving cloud financial management discipline and cultural practice that enables organizations to get maximum business value by helping engineering, finance, technology, and business teams to collaborate on data-driven spending decisions. – The FinOps Foundation

Types of Data Architecture

Lambda Architecture (2000)

In a Lambda architecture, the source system is ideally immutable and append-only, sending data to two destinations for processing: stream and batch. In-stream processing intends to serve the data with the lowest possible latency in a “speed” layer, usually a NoSQL database. In the batch layer, data is processed and transformed in a system such as a data warehouse, creating precomputed and aggregated data views. The serving layer provides a combined view by aggregating query results from the two layers.

Kappa Architecture (2014)

The Kappa Architecture was proposed as a response to the shortcomings of the Lambda architecture. The central thesis is to stream process all the data. Real-time and batch processing can be applied seamlessly to the same data by reading the live event stream directly and replaying large chunks of data for batch processing.

The Dataflow Model (2015)

The core idea of the Dataflow Model is to view all data as events, as the aggregation is performed over various types of windows. Ongoing real-time event streams are unbounded data. Data batches are bounded event streams. Real-time and batch processing happens in the same system using nearly identical code.

IoT (1990)

The Internet of Things is a distributed collection of devices. While the concept of IoT devices dates back at least a few decades, the smartphone revolution virtually created a massive IoT swarm overnight. The IoT has evolved from a futuristic fantasy to a massive data engineering domain.

Data Mesh (2022)

The data mesh attempts to invert the challenges of centralized data architecture, taking the concepts of domain-driven design and applying them to data architecture. Instead of following the data from domains into a centrally owned data lake or platform, domains need to host and serve their domain datasets in an easily consumable way.

Choosing Technologies

The present is possibly the most confusing time in history for evaluating and selecting technologies. Choosing technologies is evaluating a balance of use case, cost, build versus buy, and modularization. Always approach technology the same way as architecture: assess trade-offs and aim for reversible decisions.

Security and Privacy

Security is a key ingredient for privacy. Privacy has long been critical to trust in the corporate information technology space; engineers directly or indirectly handle data related to people’s private lives. Increasingly, privacy is a matter of significant legal importance. GDPR was passed in the European Union in the mid-2010s. Several US-based bills have passed or will soon. The penalties for violating these laws can be significant, even devastating, to a business.

People

The weakest link in security and privacy is you. Conduct yourself as if you are always a target. Take a defensive posture with everything you do online and offline. Exercise the power of negative thinking and always be paranoid.

Negative thinking allows us to consider disastrous scenarios and act to prevent them. The best way to protect private and sensitive data is to avoid ingesting this data in the first place. Data engineers should collect sensitive data only if there is an actual need downstream.

Always exercise caution when someone asks you for your credentials. When in doubt, confirm with other people that the request is legitimate. Trust nobody at face value when asked for credentials.

Processes

Corporations focus on compliance rather than thinking about bad scenarios (negative thinking). This is the security theater, where security is done in the letter of compliance without real commitment. Instead, corporations should pursue the spirit of genuine and habitual security.

Active security entails thinking about and researching security threats in a dynamic and changing world. Rather than simulating phishing attacks, you can study successful phishing attacks and think through your organizational security vulnerabilities.

Apply the principle of least privilege on humans and machines: give them only the privileges and data they need to do their jobs, and only for the time needed. Sometimes, some data must be retained but should be accessed only in an emergency. Put this data behind a broken glass process: users can access it only after an emergency approval process to fix a problem. Access is revoked immediately once the work is done.

Meanwhile, most security breaches in the cloud continue to be caused by end users, not the cloud providers. Breaches occur because of unintended misconfigurations, mistakes, oversights, and sloppiness (like configuring access to object storage for the entire internet).

Lastly, do not forget to back up your data. Data disappears. Data loss can happen because of a hard drive failing, a ransomware attack, or a fire in a data center. Who knows?

Technology

The following are some significant areas you should prioritize:

- Patch and Update Systems

- Encryption

- Logging, Monitoring, and Alerting

- Network Access

Software gets stale, and security vulnerabilities are constantly discovered. Always patch and update operating systems and software as new updates become available.

Encryption is not a magic bullet. It will do little to protect you if a human security breach grants access to credentials. Encryption is a baseline requirement. Data should be encrypted both at rest and over the wire.

Logging, monitoring, and alerting will observe, detect, and alert on incidents. You must be aware of suspicious events when they happen in your system. If possible, set up automatic anomaly detection.

As a data engineer, you will encounter databases, object storage, and servers so often that you should at least be aware of simple measures you can take to make sure you are in line with good network access practices. Understand what IPs and ports are open, to whom, and why.

The Future of Data Engineering

In this section, the authors made forecasts based on historical trends and how they think the industry might evolve from its current state. These are the names of the sections in this chapter:

- The Data Engineering Lifecycle Isn’t going away

- The Decline of Complexity and the Rise of Easy-to-Use Data Tools

- The Cloud-Scale Data OS and Improved Interoperability

- “Enterprisey” Data Engineering

- Titles and responsibilities Will Morph…

- Moving Beyond the Modern Data Stack, Towards the Live Data Stack

- The Live Data Stack

- Streaming Pipelines and Real-Time Analytical Databases

- The Fusion of Data with Applications

- The Tight Feedback Between Applications and ML

- Dark Matter Data and the Rise of…Spreadsheets?!

Conclusion

The book iterates over each topic a few times and connects to other topics from various perspectives. By the end of the book, the authors have built a holistic overview of what Data Engineering is about.

This way of exposing the different responsibilities allows technical and non-technical people to clearly understand the role, tasks, and relations within the team and the organization.

If you are interested in learning about data engineering, I recommend grabbing a copy here: The Fundamentals of Data Engineering.

I hope you enjoy reading this book as much as I did (or even more). If you’re interested in hearing more about our work, contact us about your data engineering needs.