Note: this post was written by Fokko Driesprong in 2021. It now lists Giovanni Lanzani as the author due to a migration artifact.

We all know it: building data pipelines is hard. To build maintainable pipelines within complex domains, we need simple and effective tooling that scales with the organization. Leveraging a tool like dbt and implementing DataOps makes it easy to adopt the best practices. Ensuring a life-cycle around your data models by adopting principles that we’re already familiar with from DevOps. This introduces an automated, process-oriented methodology used by analytic and data teams to improve the quality of the data and shorten the lifecycle, delivering high-quality data all the time. By combining Apache Spark with dbt, we have simplified data pipelines for both Analytics and Data Science purposes. Managed services like Databricks easily scale out the workloads. These require virtually no maintenance and leveraging the elasticity that the cloud provides.

I was involved with providing Spark support for dbt-spark. In this blog, we introduce the technologies and show how to get started.

Above is the talk that I’ve had at the Data+AI Summit. If you’re already using Databricks and dbt, please make sure to check out the talk. It demonstrates how we can leverage Delta to do incremental loads and keep the lineage of your datasets.

You might also be interested in: Cloud Trainings

dbt

dbt (data build tool) enables data analysts and engineers to transform their data using the same practices that software engineers use to build applications. dbt is the T in ELT. Organize, cleanse, denormalize, filter, rename, and pre-aggregate the raw data in your warehouse so that it’s ready for analysis.

Databricks

Databricks is a managed Spark offering, now available in every cloud, including the recently added support for [GCP]. Databricks offers on-demand computing for running big data workloads and analytics pipelines. This is effectively the L in ETL, loading the data into Databricks, where it can be queried and refined.

At GoDataDriven we’re a certified [Databricks Partner], and I have the privilege to call myself a so-called [Databricks Developer Champion].

Getting started

First we have to install dbt using pip3, make sure that you have python version >=3.6.2 installed.

$ pip3 install "dbt-spark[ODBC]"

…

$ dbt –version

installed version: 0.19.0

latest version: 0.19.0

Up to date!

Plugins:

- spark: 0.19.0.1

You check if everything works, by running dbt --version. Next we have to configure a profile that tells us how to connect to our Databricks cluster. For this example, we’ll connect to a Databricks cluster on Azure. For other profiles, you can check out the reference profiles, this allows you to connect to vanilla Spark.

default:

target: dev

outputs:

dev:

type: spark

method: odbc

driver: [path/to/driver]

schema: [database/schema name]

host: [yourorg.sparkhost.com]

organization: [org id] # Azure Databricks only

token: [abc123]

# one of:

endpoint: [endpoint id]

cluster: [cluster id]

# optional

port: [port] # default 443

user: [user]Let’s configure the environment with the recently introduced SQL Analytics. At the time of writing, still in public preview, but at GoDataDriven we like to live dangerously.

Let’s open up SQL Analytics and lets get the credentials needed to set up the connection between dbt and Databricks. We need to create an endpoint (cluster) that will be used for running the pipeline, and we need to create a token to get access.

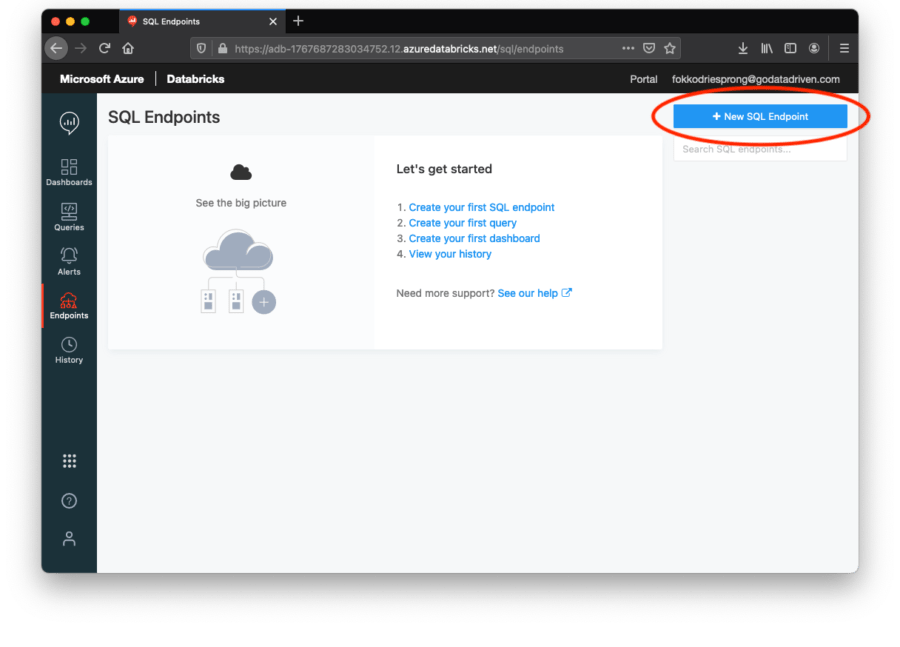

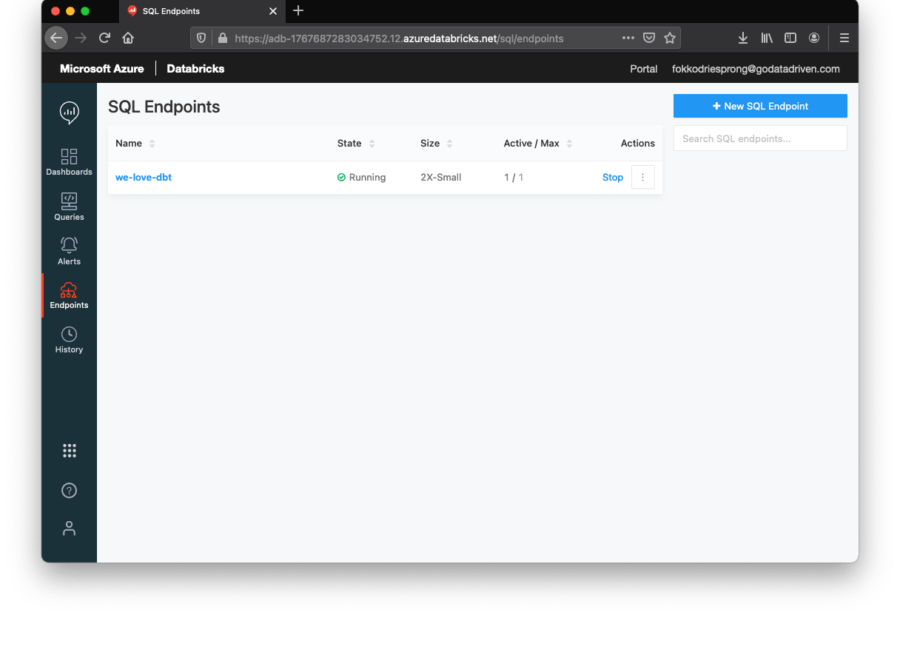

First, we go to the endpoints tab, and create a new endpoint in the top-right.

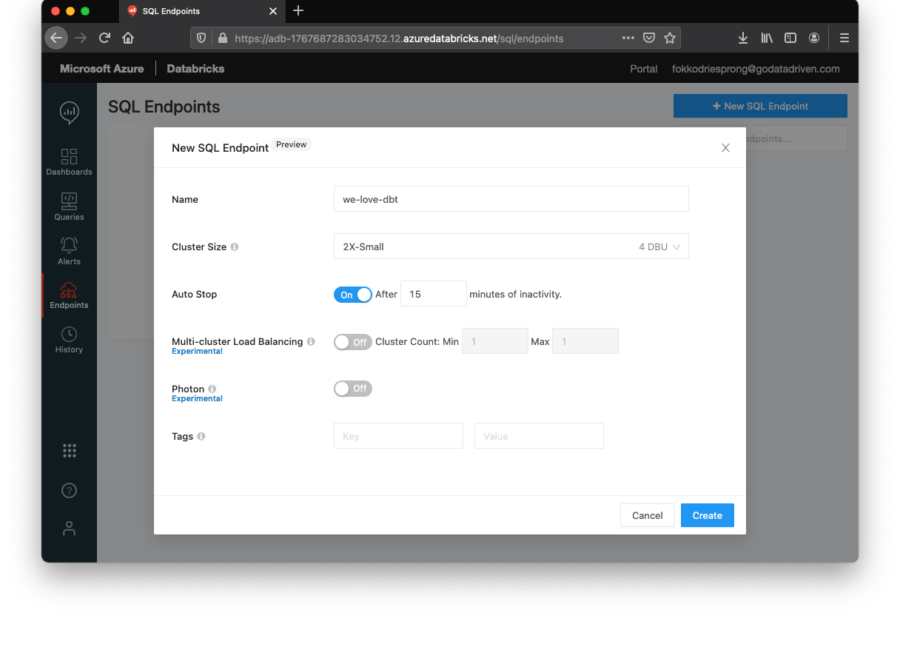

We create a small cluster, just to run our PoC. Make sure to configure an Auto Stop. This is a very handy feature by Databricks to automatically shutdown the cluster, after a set period of inactivity. Just to be nice to your credit card.

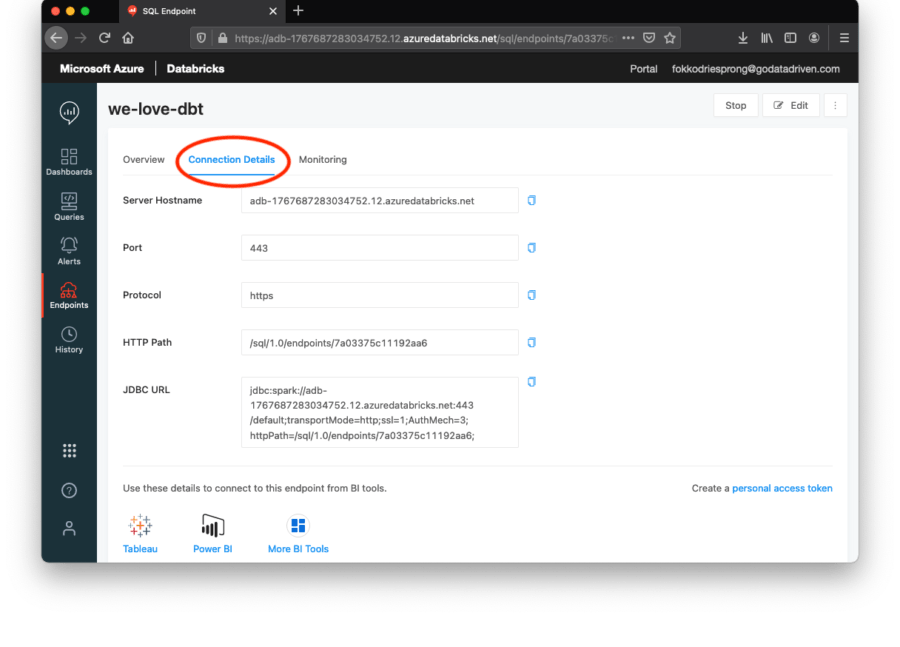

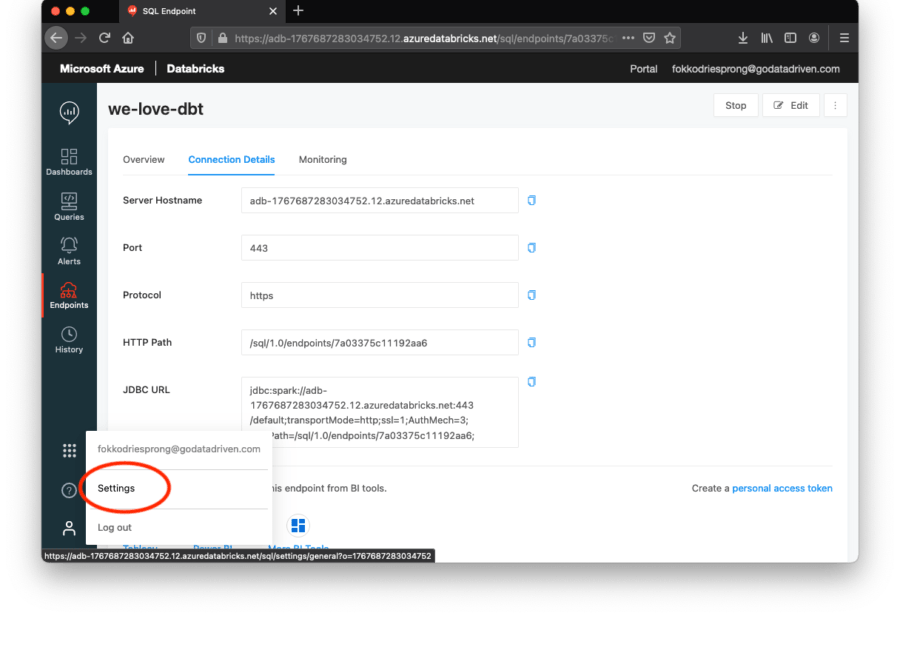

Let’s hit the Connection Details tab, and here we can find the hostname, and the endpoint. The endpoint is the last part of the HTTP Path, in the case above this is 7a03375c11192aa6.

Next, we need to get our personal access token, to get access to the environment. This can be found under the settings tab of your profile.

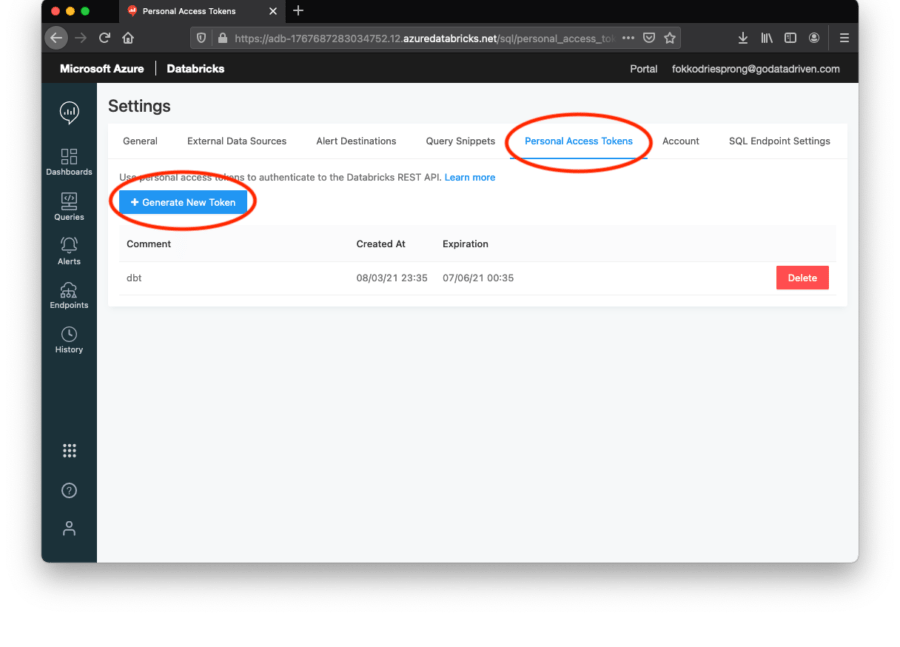

Go to the Personal Access Token, and create a new token. Make sure to never share or leak this token! With this token, 3rd parties can access your workspace, and therefore your data. It is always good practice to create short-lived tokens, or make sure to configure your networking to avoid unauthorized IP’s accessing to the environment.

Let’s create the profile:

$ mkdir -p ~/.dbt/

$ nano ~/.dbt/profile

default:

target: dev

outputs:

dev:

type: spark

method: odbc

driver: "/Library/simba/spark/lib/libsparkodbc_sbu.dylib"

schema: "default"

host: adb-1767687283012345.22.azuredatabricks.net

organization: "{ 1767687283012345 | as_text }"

token: "dapie1c2dfe5558af06ba4735f04a92012345"

endpoint: "7a03375c11012abc"

port: 443

Next we can test the connection:

$ dbt debug

Running with dbt=0.19.0

dbt version: 0.19.0

python version: 3.7.9

python path: /usr/local/opt/python@3.7/bin/python3.7

os info: Darwin-20.3.0-x86_64-i386-64bit

Using profiles.yml file at /Users/fokkodriesprong/.dbt/profiles.yml

Using dbt_project.yml file at /Users/fokkodriesprong/Desktop/dbt-data-ai-summit/dbt_project.yml

Configuration:

profiles.yml file [OK found and valid]

dbt_project.yml file [OK found and valid]

Required dependencies:

- git [OK found]

Connection:

host: adb-1767687283034752.12.azuredatabricks.net

port: 443

cluster: None

endpoint: 7a03375c11192aa6

schema: default

organization: { 1767687283034752 | as_text }

Connection test: OK connection ok

If something is off, please check logs/dbt.log for any pointers. Feel free to open up an issue if you need any help.

After running the dbt debug, the cluster should be automatically be started:

Now we should be able to run the code that was used in the Data+AI Summit talk. Let’s check out the code:

$ git clone <a href="https://github.com/godatadriven/dbt-data-ai-summit.git">https://github.com/godatadriven/dbt-data-ai-summit.git</a>

$ cd dbt-data-ai-summit

Running with dbt=0.19.0

Found 3 models, 2 tests, 0 snapshots, 0 analyses, 159 macros, 0 operations, 0 seed files, 0 sources, 0 exposures

23:43:59 | Concurrency: 1 threads (target=’dev’)

23:43:59 |

23:43:59 | 1 of 3 START table model default.order_lines……………………. [RUN]

23:44:42 | 1 of 3 OK created table model default.order_lines……………….. [OK in 42.93s]

23:44:42 | 2 of 3 START table model default.orders………………………… [RUN]

23:44:49 | 2 of 3 OK created table model default.orders……………………. [OK in 6.98s]

23:44:49 | 3 of 3 START incremental model default.revenue………………….. [RUN]

23:45:03 | 3 of 3 OK created incremental model default.revenue……………… [OK in 13.92s]

23:45:03 |

23:45:03 | Finished running 2 table models, 1 incremental model in 66.28s.

Completed successfully

Done. PASS=3 WARN=0 ERROR=0 SKIP=0 TOTAL=3

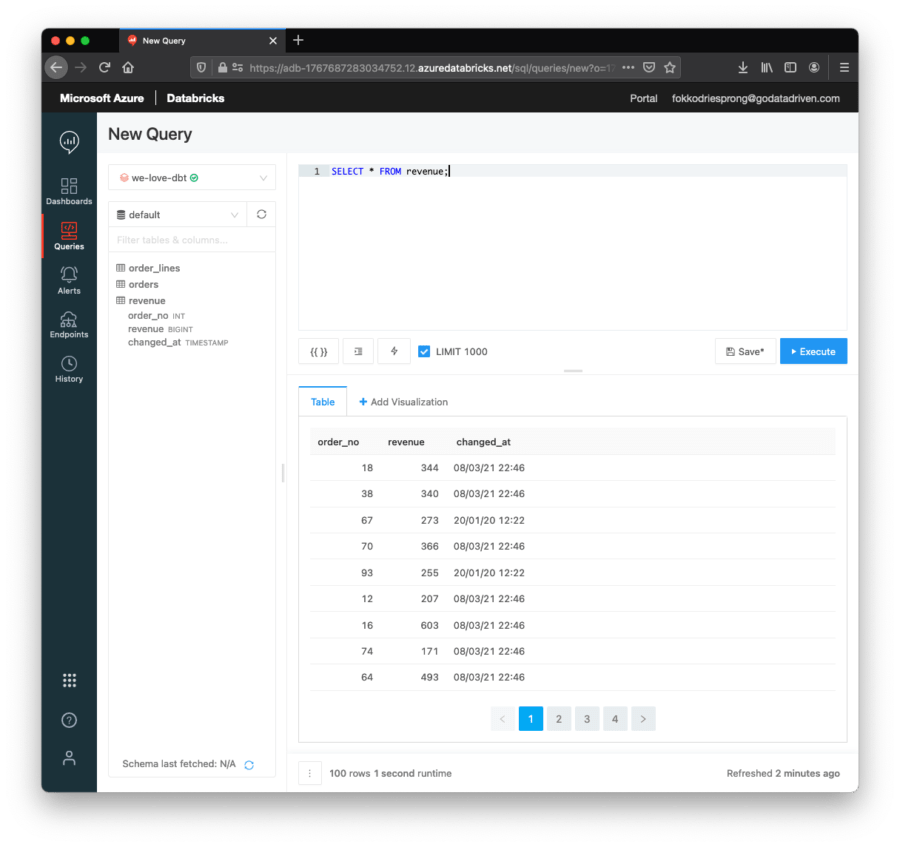

We can see that dbt ran the 3 models that are in the example. When looking in the SQL Analytics we can see the results:

We can run dbt test to make sure that all the constraints and data checks still hold:

$ dbt test

Running with dbt=0.19.0

Found 3 models, 2 tests, 0 snapshots, 0 analyses, 159 macros, 0 operations, 0 seed files, 0 sources, 0 exposures

10:02:29 | Concurrency: 1 threads (target=’dev’)

10:02:29 |

10:02:29 | 1 of 2 START test not_null_revenue_order_no…………………….. [RUN]

10:02:30 | 1 of 2 PASS not_null_revenue_order_no………………………….. [PASS in 0.80s]

10:02:30 | 2 of 2 START test unique_revenue_order_no………………………. [RUN]

10:02:31 | 2 of 2 PASS unique_revenue_order_no……………………………. [PASS in 1.07s]

10:02:31 |

10:02:31 | Finished running 2 tests in 3.65s.

Completed successfully

Done. PASS=2 WARN=0 ERROR=0 SKIP=0 TOTAL=2

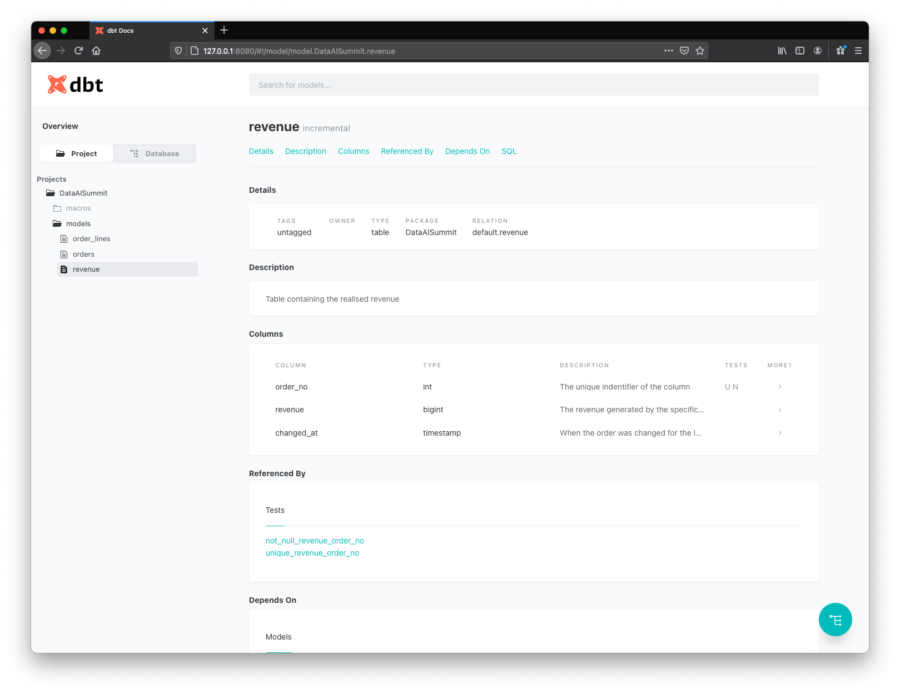

Finally, we can generate docs using dbt docs generate && dbt docs serve:

$ dbt docs generate && dbt docs serve

Running with dbt=0.19.0

Found 3 models, 2 tests, 0 snapshots, 0 analyses, 159 macros, 0 operations, 0 seed files, 0 sources, 0 exposures

10:03:36 | Concurrency: 1 threads (target=’dev’)

10:03:36 |

10:03:36 | Done.

10:03:36 | Building catalog

10:03:39 | Catalog written to /Users/fokkodriesprong/Desktop/dbt-data-ai-summit/target/catalog.json

Running with dbt=0.19.0

Serving docs at 0.0.0.0:8080

To access from your browser, navigate to: localhost:8080

Press Ctrl+C to exit.

This will also launch a browser with the docs:

Of course, this is a simple example. But using dbt on top of Databricks enables you to develop data pipelines that are scalable with the power of Spark/Databricks, and are maintainable by implementing the DataOps priciples provided by dbt.

Interested?

In this blog we demonstrated how to set up a basic pipeline. However, in a production setting, you will need additional measures, such as monitoring/alerting, data retention, staging environments, continuous deployment, networking and much more automation. If you need assistance setting up pipelines like these, please don’t hesitate to reach out.