5G offers latency at a completely new level. It opens whole new possibilities in many different fields such as autonomous vehicles or robotic surgeries. So, how can this low latency be achieved in 2021?

What is Latency and Why Does it Matter?

5G – the fifth generation of the cellular network standard – is undoubtedly a technological game-changer. Compared to 4G, it introduces an entirely new level of data transmission speed, reliability, number of connected devices, and, maybe most importantly, low latency.

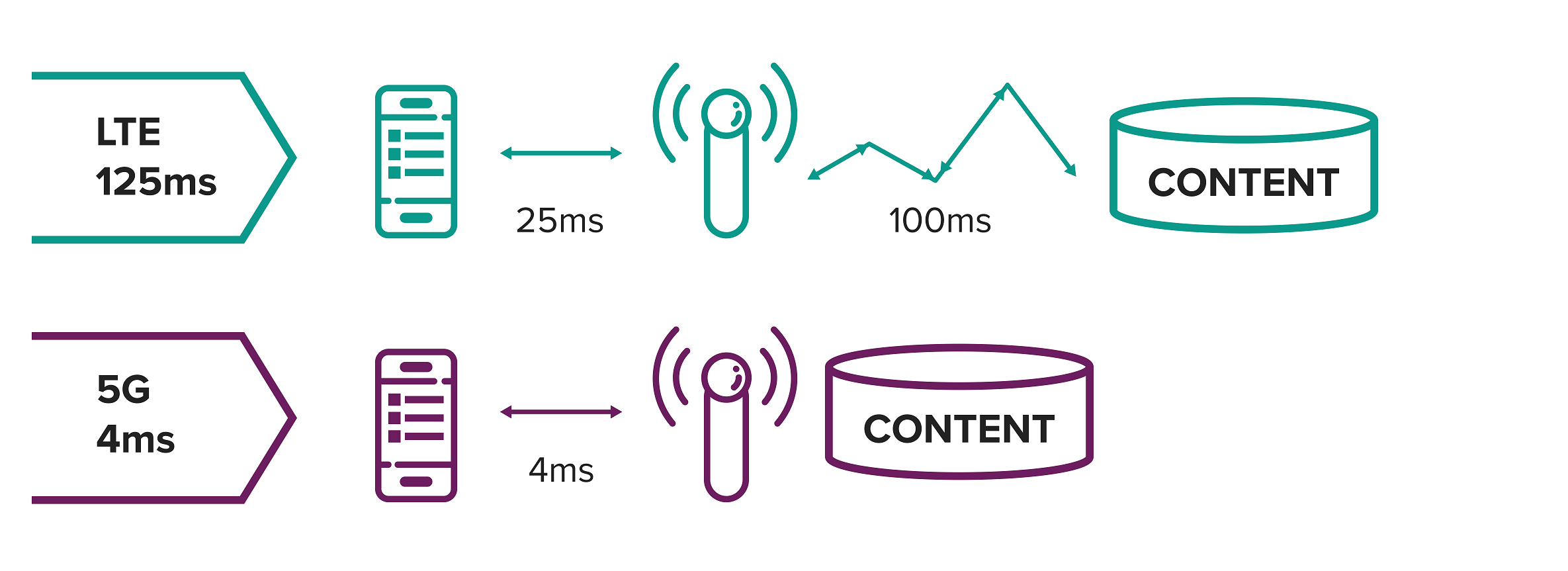

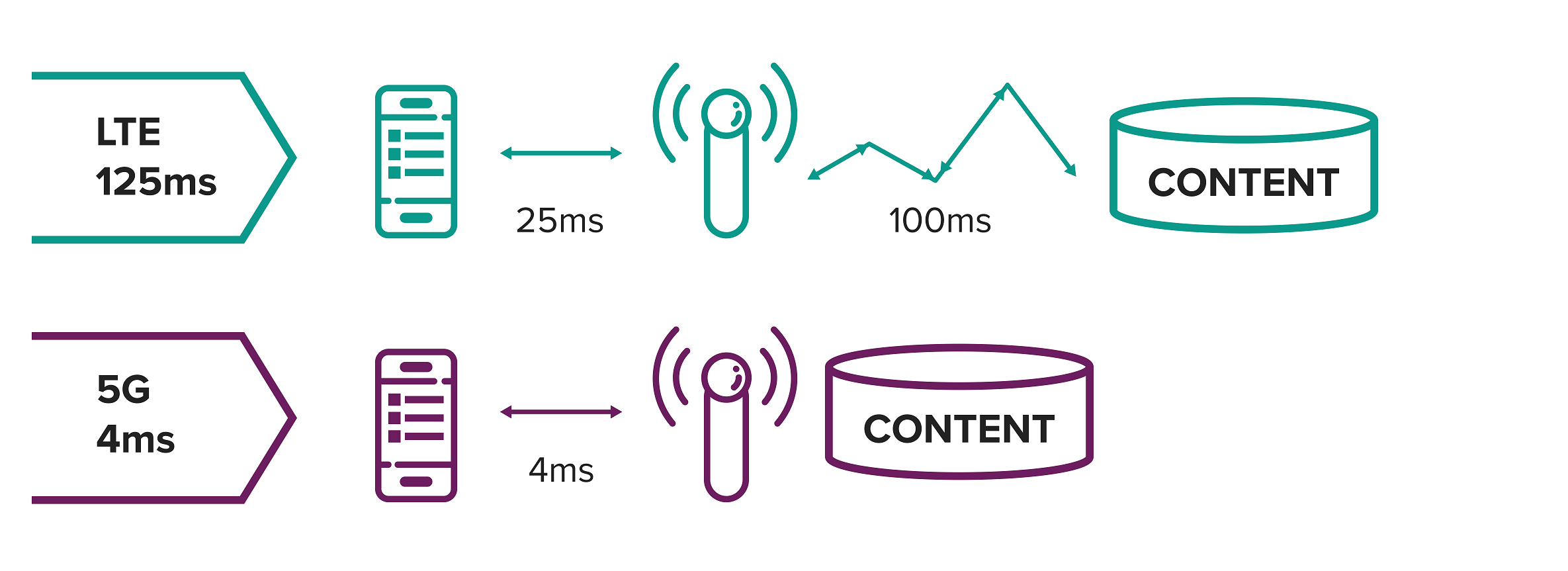

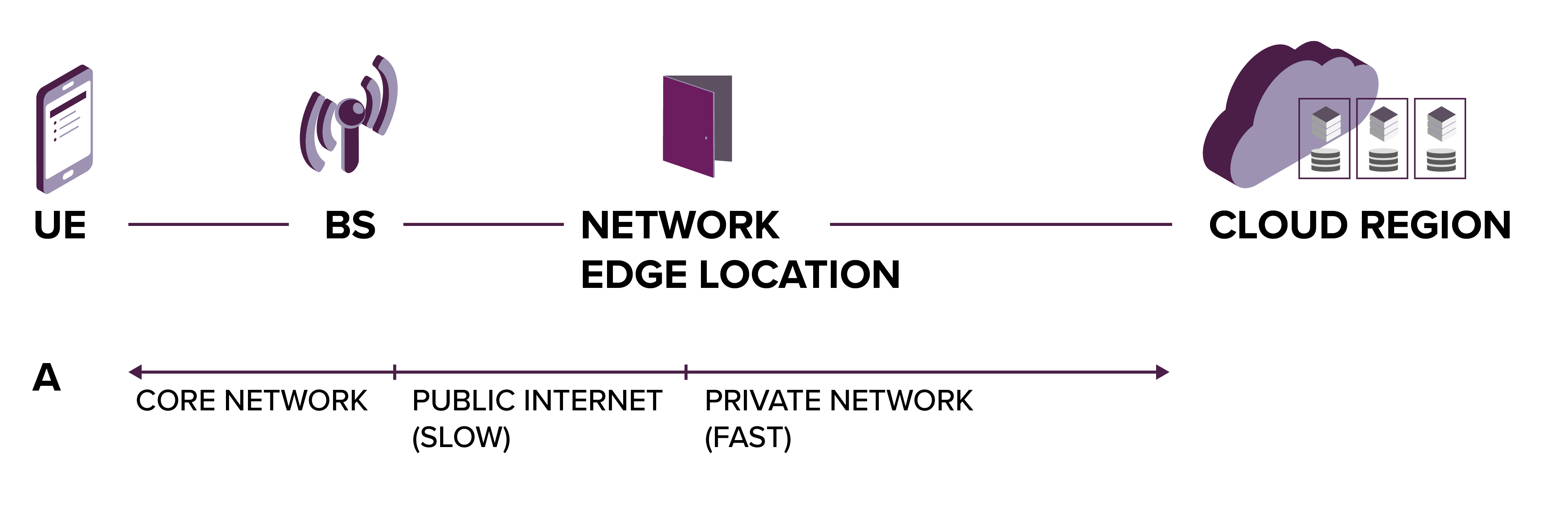

With 4G (LTE), it took from 10 to 100 milliseconds for a piece of data to travel in the air between the mobile device and base station. 5G reduces this time to single-digit milliseconds. And there are several use cases that desperately require such microscopical latency.

An example of LTE vs optimised 5G setup latency difference

Autonomous vehicles communicate with the infrastructure and each other – and they need to do it without delays. Even a split-second delay can cause a collision. High-frequency trading, where stock prices change in the blink of an eye (and the fastest bidder snatches the profit!) make every millisecond priceless. At multiplayer games, an input lag often determines a win or loss. At AR, the augmentation needs to keep up with the reality to sustain the illusion of a magical, real-time experience. Not even to mention remote health care and robotic surgery, where the doctor needs precise feedback to operate a patient safely.

The final latency is a sum of several chunks and depends on the use case and the approach – there are a few moving parts at play. In this article, we’re going to take a look at these dependencies and determine what contributes to the final speed.

Air Interface in the 5G Standard

This is where the first hop of our data takes place: between the mobile device and the base station. In 5G, the Radio Access Technology specification goes by a simple name – New Radio. So, what makes the latency so much lower than before? There are few tricks to that.

Firstly – Scalable Slot Duration. The length of slots used for data transmissions is no longer fixed to address different bandwidths, spectrums, and service types.

Secondly – Mini-Slot Scheduling. It allows using just a part of available symbols of the full slot for even more flexibility.

Thirdly – Grant Free Transmission. The device doesn’t have to wait for the base station to assign resources but can start transmitting thanks to the Multi-Access Analog Fountain Codes technique immediately.

And finally – Pre-emptive Scheduling and Downlink Multiplexing. Latency-critical small pieces of data for one device can cut into the ongoing transmission for another device.

Aside from the overall hardware speed improvements, there’s more we can touch on, but for now, we are not going to dive any deeper.

MEC – Multi-Access Edge Computing

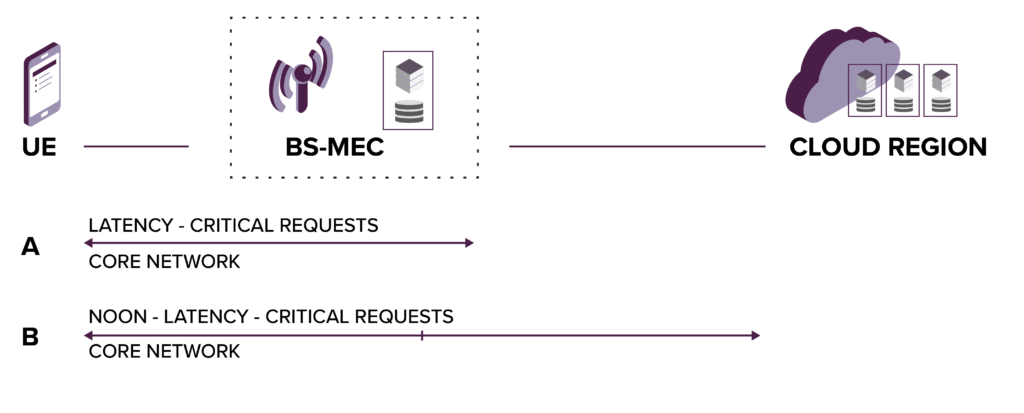

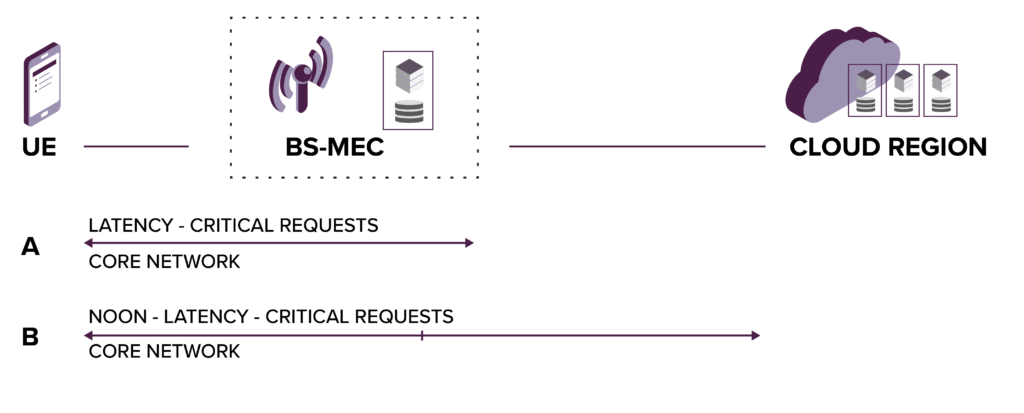

Having a fast air interface is excellent, but it isn’t a game-changer if the request from the base station needs to travel half of the world to reach its actual destination. The obvious solution to this problem is to move the destination closer to the base station. A way to achieve this is to leverage Multi-Access Edge Compute – an architecture concept standardised by ETSI.

The idea of MEC is to move computing resources – virtual machines – to servers that are a part of mobile access network base stations. The 5G architecture shifted towards the virtualisation of services hosted on more generic hardware, opening an option to use the same hardware for 3rd parties.

Even though base stations belong to multiple business entities, major Cloud providers simplified the problem acting as a middle man between telco companies and MEC application developers (e.g. AWS Wavelength or Azure Network Edge Compute).

MEC can be transparently seen as another Availability Zone in the region and used as such in the Cloud environment. If you’d like to know how to set this up, in this article you can learn more about the implementation of MEC solutions.

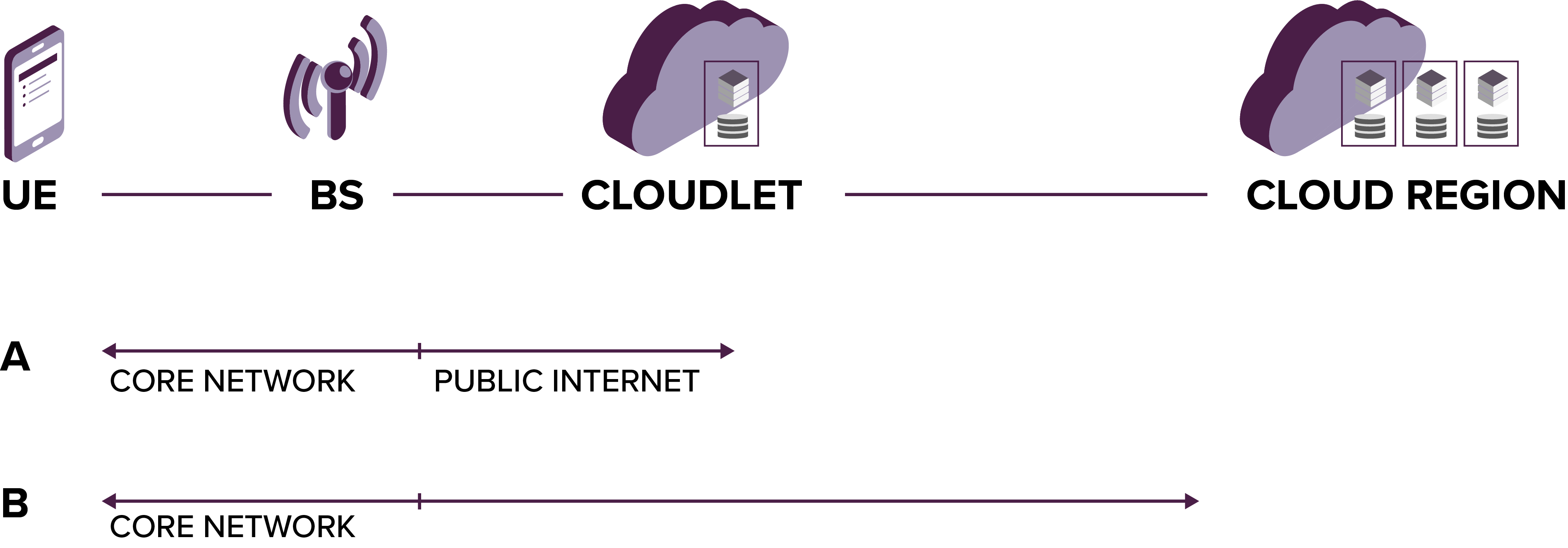

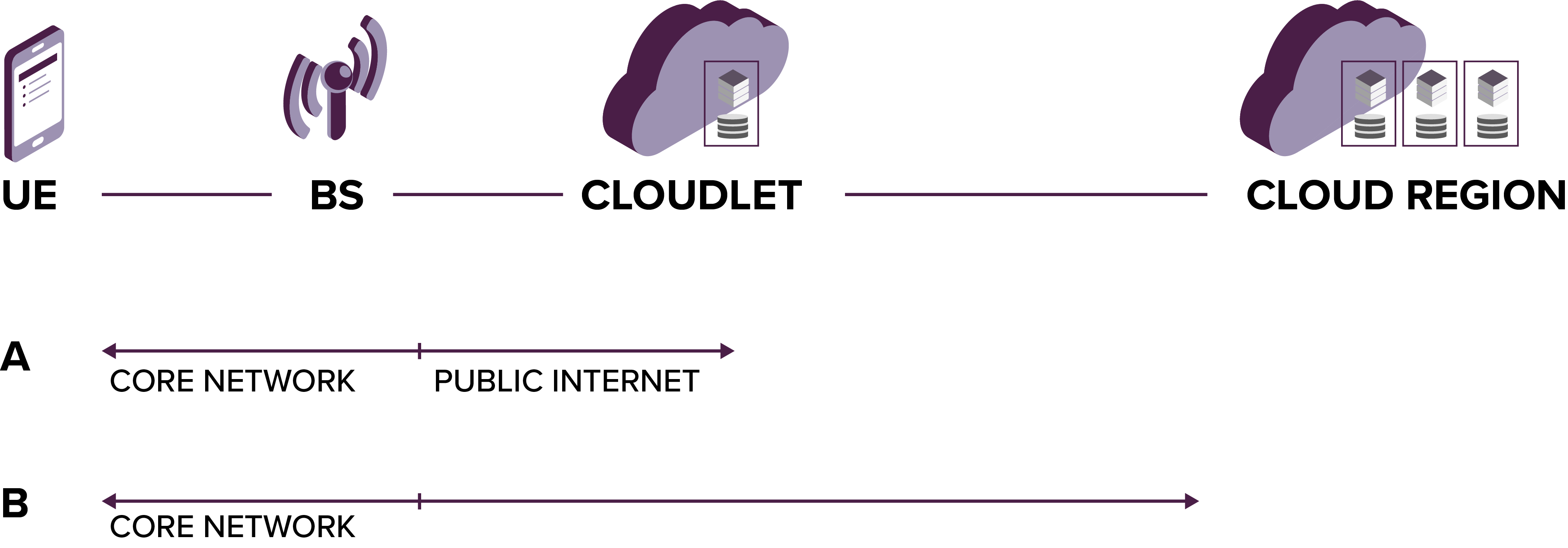

The Cloudlet

When MEC isn’t an option (due to limited capacity of regular servers, or a need for specialized hardware to, for example, efficiently run machine learning or stream a graphics-heavy game in 4K) we can still move close to the user – just outside of the telecommunication network.

The Cloudlet might be a bit vague term. In essence, it’s any small datacentre located close to users and linked with an actual full-scale Cloud – when massive support is needed. This can be, for example, a generic hardware hosted in a datacentre in the same city as our users and connected with a Hybrid Cloud approach.

<

It might be slightly slower than MEC, but it’s still much faster than travelling across two countries to reach the core Cloud region. AWS Outposts are an interesting option for those who want to minimise taking care of material things. You can order an enclosed piece of Cloud hardware to be delivered to your premises, plug it into the network and let AWS monitor it remotely (and even send people to fix any hardware issues for you!).

The Cloudlet acts as a private Availability Zone in your region – similarly to MEC.

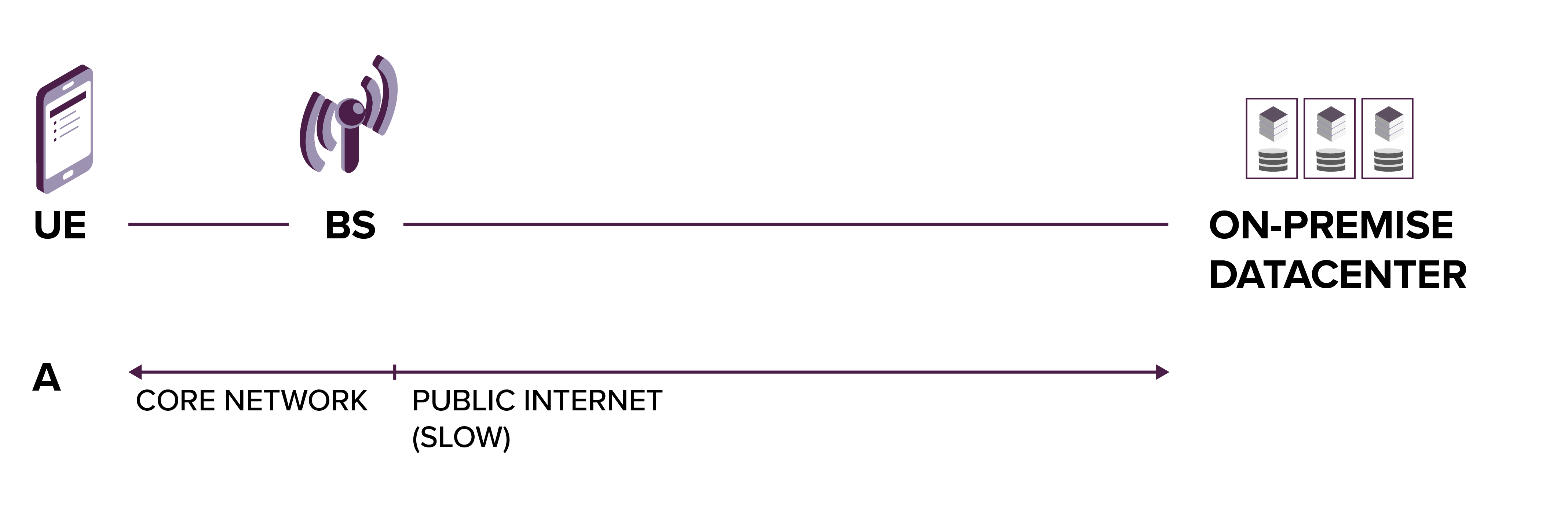

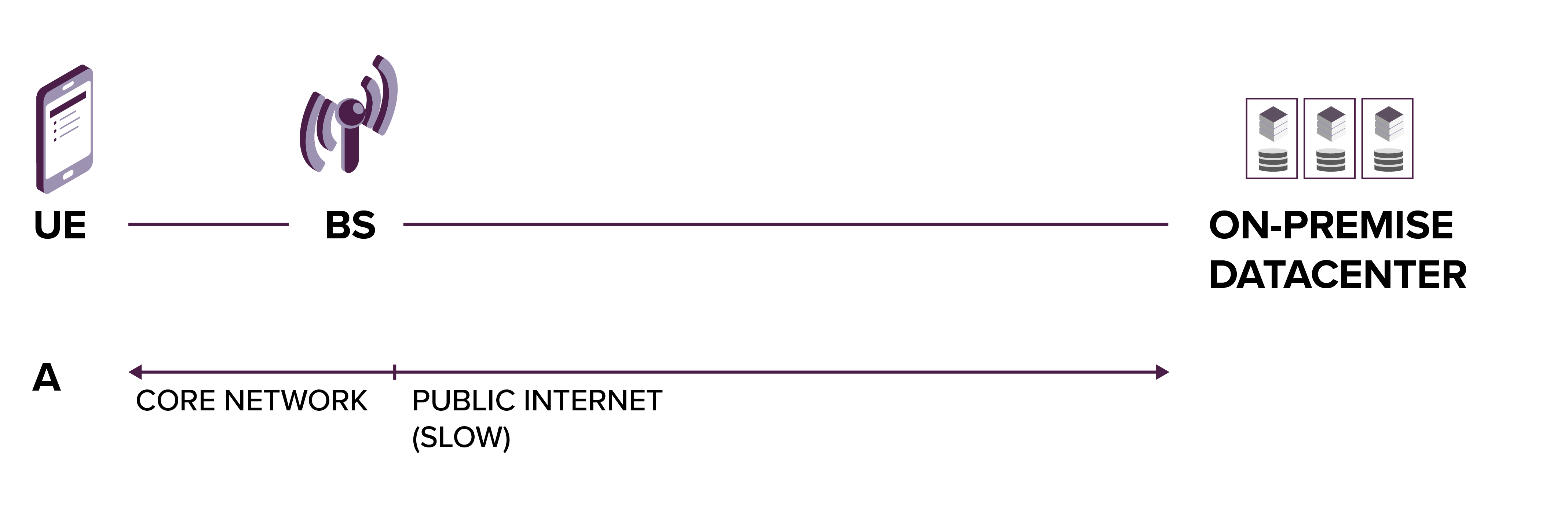

The Cloud

Hosting in Cloud has the potential to reduce latency even better than MEC and the Cloudlet. Major Cloud providers have over twenty datacentres around the globe each. Deploying software in several geographically strategic locations will save us much more milliseconds in comparison to one or two classics on-premise datacentres.

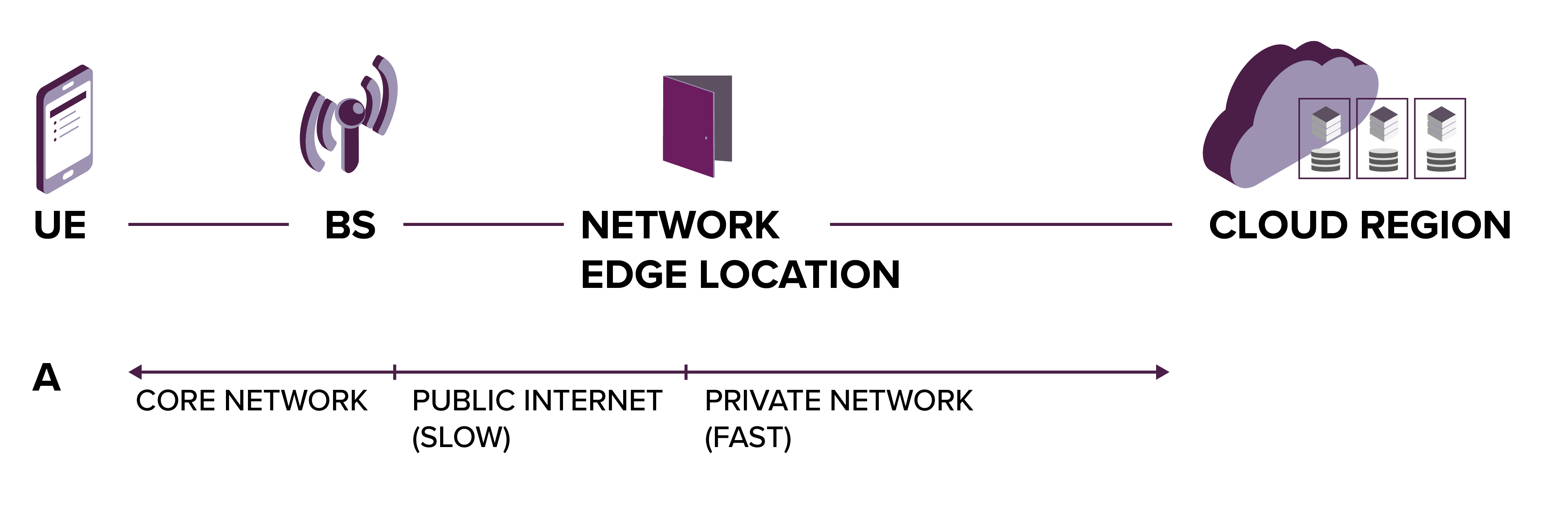

What if the location of our data is restricted by law? For example, you are a Swiss bank, and your systems need to be physically in Switzerland. Luckily, from the legal perspective, there isn’t a difference between keeping servers in your basement and using Google Cloud Platform for the Zurich region. But there’s a difference in speed. Google Cloud networking datacentres have a private optical fibre connection not only to each other but also to over 150 network edge locations around the globe – and that’s where the reduced latency comes from.

Google will keep your packets in their network for as long as possible. Even if your compute is bound to a single location you can reach your users significantly faster than frantically hopping over the public Internet nodes. For example, if the user is 100 km away from Warsaw, the request might hop to the local Google Network Edge Location and travel to Zurich way quicker than through the previous technologies.

Client And Server

We’ve talked a lot about the communication between the mobile device and the server, but we still need to mention two important sources of latency – the device and the server itself.

Depending on the use case, we might not always have control over the mobile device hardware. Luckily, we can often make up for it on the server-side, selecting proper CPU or Memory across available options, adding graphic cards, Tensor Processing Units or even custom FPGA chips.

There’s also a lot we can do in the software domain to keep latency under control.

- Properly architecting API (to perform a single request instead of multiple requests on critical paths).

- Non-blocking IO (if we need many requests that can be parallelised).

- Optimized network protocols stack and packet processing offloading (e.g. with DPDK)

- Lightweight frameworks and libraries.

- Reducing unnecessary dependencies and footprints.

- Selecting appropriate languages and writing low-level code constructs with speed in mind.

- Computational complexity awareness.

- Batch processing and multithreading.

- Properly utilizing NUMA (Non-uniform memory access) and SR-IOV (Single-root input/output virtualization).

- Further fine-tuning of virtual machines, operating systems, containers and their orchestration, garbage collectors.

…or whatever else is there to eat up our precious milliseconds down the technology chain!

5G – Business Perspective

Latency is a delicate subject and can be gained or lost in many places between the mobile device and its backend. If everything is well-architected, implemented, and deployed, 5G enables to achieve a single-digit millisecond latency range which opens up an entirely new level of possibilities for business across a vast number of use cases.

And to see what the difference between a 16 and 17 milliseconds roundtrip latency can lead to, check out the… Hummingbird Project movie!

See also: How 5G can empower smart factories.

____

Sources

- http://www.eurecom.fr/en/publication/5193/download/comsys-publi-5193_1.pdf

- https://www.edn.com/how-5g-reduces-data-transmission-latency/

- https://ytd2525.wordpress.com/2014/07/04/latency-in-5g-legacy-in-4g/

- https://www.rcrwireless.com/20200521/opinion/readerforum/is-latency-key-to-realising-5g-potential-reader-forum

- https://futurenetworks.ieee.org/images/files/pdf/FirstResponder/Rapeepat-Ratasuk-Nokia.pdf

- https://community.keysight.com/community/keysight-blogs/next-generation-wireless-communications/blog/2018/04/11/5g-flexible-numerology-what-is-it-why-should-you-care

- https://medium.com/5g-nr/ultra-reliable-low-latency-communication-urllc-9b2505e81579

- https://www.metaswitch.com/blog/5g-multi-access-edge-computing-with-cloudlets-in-fog-creating-mist

- https://www.vodafone.com/perspectives/blog/mec-complementing-5g-radio-technology

- https://www.etsi.org/images/files/ETSIWhitePapers/etsi_wp28_mec_in_5G_FINAL.pdf

- https://stlpartners.com/2019/12/11/aws-wavelength-game-over-for-telco-edge/

- https://www.researchgate.net/publication/315683979_Business_Case_and_Technology_Analysis_for_5G_Low_Latency_Applications