The Wednesday afternoon session of AWS re:Invent 2023 offered a deep dive into AWS AI and ML Services, and the many ways that enterprise customers are transforming their business models with generative AI.

Dr. Bratin Saha, VP of AWS AI and ML Services, believes, “With the emergence of generative AI, we are at a tipping point in the widespread adoption of machine learning.” His argument is that even now, global firms are building and integrating AI/ML applications into their day-to-day operations, and we can clearly identify – even at this early stage – a range of conceptual and digital best practices.

Dr. Saha identified five central goals or areas of thematic focus for successful AI/ML implementations:

- Choice & Flexibility of Models: Amazon Bedrock offers many choices of LLMs or foundational models, in part because different models (e.g., GPT-4, Lamda, Claude v2) have distinctly different advantages and disadvantages – one may offer better price/performance, while another may yield more complete or accurate answers to queries.

- Differentiate with your Data: Everything begins and ends with data – and as Dr. Saha pointed out, without a robust data platform, it’s very hard to build AI and ML applications. Hence, companies must develop an optimized data platform and data strategy, including KPIs for data acquisition, error correction, integration, storage and more.

- Responsible AI Integration: AI should feature “access control by employee role.” For example, your CEO may need to see the company’s quarterly sales forecast, but most staff members should not have this information ahead of an earnings announcement.

- Low-Cost, High-Performance Infrastructure: Over the past six years, we’re seen a 1,000X increase in the size of LLMs, and a 100,000X increase in the amount of compute resource allocated to AI/ML. Given these extraordinary demands, improvements are essential. Some examples: recent cost/performance advances in Amazon S3 and Aurora Serverless database, or in AI hardware such as AWS Graviton 4, Inferentia, and Trainium 2 chips.

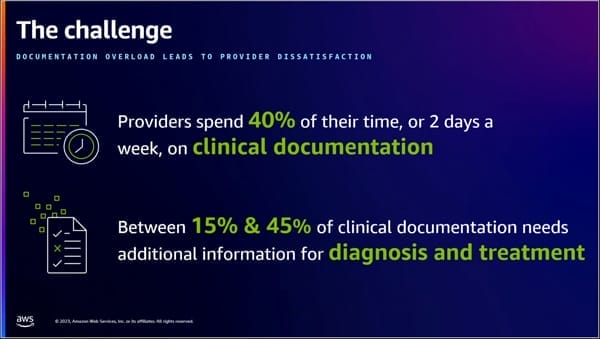

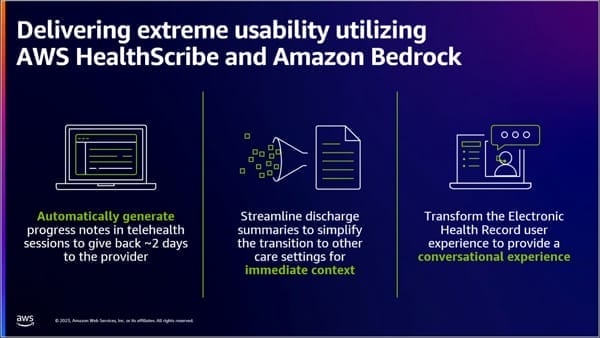

- Generative AI-Powered Applications: AI/ML can save time and money, and make life easier and more productive for workers in all fields. Incredibly, doctors spend up to 40% of their time in “scribe” work, manually typing patient exam records, treatment details, etc. But AWS HealthScribe can employ AI to handle all this automatically, helping busy physicians recover two full days of time, per week.

How Generative AI Can Add Value to Your Business

Xebia believes that Generative AI (GenAI) can help businesses increase efficiency, drive innovation, personalize products and services, and gain competitive advantage. By automating repetitive tasks and enabling personalized solutions, GenAI can save businesses time & money while improving customer satisfaction and loyalty. GenAI can streamline the workflow of engineers, scientists, researchers and creative artists, and AI models can accept audio, video, text, or image inputs and generate new content in alternate modalities. And the entire process of software development can be accelerated by “letting AI write the code for you.”

Here's an example: Recently, one of our leading managing directors at Xebia Data & AI had to send a bulk reminder message to Xebia customers via SMS. Utilizing ChatGPT, he developed an application in the Go programming language in mere minutes, a task that would typically take days. To quote Giovanni Lanzani, who stands behind this achievement “The speed at which I could create working code in a new language was astonishing . . . you do the high-level thinking, let LLMs handle time-consuming, simple problems, then check their output and assemble the pieces."

The possibilities are endless, and truly exciting. But – Xebia also believes that generative AI presents significant challenges in terms of data science, cloud architecture, and foundational models & use cases (among others). Here’s our take:

Data infrastructures are key to enabling business change. Xebia has created data platforms and pipelines for more than twenty years, and can help you bring the GenAI revolution to your company. We offer a Maturity Scan, Data Roadmap, Data Engineering, Machine Learning and NextGen Analytics (BI) Assessments, leading to the formulation of a plan to harness the power in your data. By using our own templates for each public cloud, we can quickly deploy a data platform and build data science applications that deliver future insights. This allows you to reduce customer churn, predict demand, optimize your logistic chain, and automate business processes.

In an earlier blog, we noted “it takes a cloud” for AI to reach its full potential. One of the most important benefits of the cloud is its sheer heft: very few proprietary ‘glass houses’ can equal the processing power and scalability of an AWS, Azure, or Google Cloud data center. This is key, because GenAI models involve billions of factors and require fast, efficient data pipelines to train. Expert personnel, major capital investment, and large-scale computing infrastructure are all required to develop and maintain generative models. Xebia’s Premier partnerships with the hyperscalers – and our global team of expert consultants – enable us to plan, build and manage cloud solutions anywhere, at any scale.

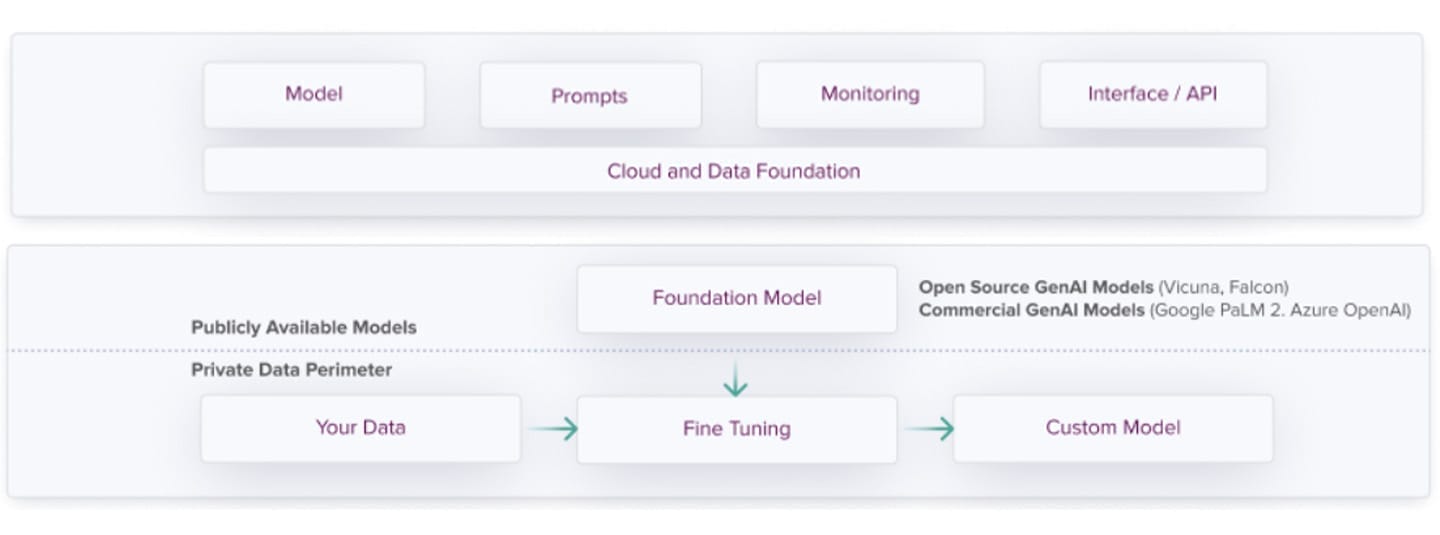

To unleash the full power of GenAI across your applications, Xebia created the GenAI Platform blueprint. This MLOps (Machine Learning Operations) platform is tailored to help you build Gen AI-powered applications, manage the complete lifecycle of your models and integrate them with your existing infrastructure. The platform consists of the following components:

In the Base Generative AI Platform, we use commercial Foundation Generative AI products from third-party vendors, as well as open-source models – and we combine them with your data to shape the platform to your needs. The architecture ensures that your data remains within a private perimeter. Here are the details:

Model: We leverage open-source foundation models (e.g., Falcon) or commercially available models (e.g., OpenAI Chat API, Google PaLM API, Amazon Bedrock). We’ll help you integrate these out-of-the-box solutions or automate the fine-tuning process on your data for personalized applications.

Prompts: The key to Generative AI is finding the ideal prompts. We’ll provide you with the environment to manage, document, and experiment with prompt templates.

Monitoring: We record prompts, responses, and user feedback to monitor performance and detect model drift. Then, we use the provided information to fine-tune the model.

Interface: We provide you with the interface for the GenAI model inference with the programmatic API, i.e., a Docker container ready to be deployed as a service. This will give you control over the process and help you optimize for speed.

Cloud and Data Foundation: We supply you with the necessary components to maintain an end-to-end GenAI model through its entire lifecycle. We offer you everything from models and prompt storage to fine-tuning automation, inference, and model monitoring.

Bottom line: the Xebia MLOps framework makes it easier to train, deploy and update large GenAI models across different environments, reducing operational complexity and improving efficiency. Our GenAI platform enables organizations to transparently update their models, data and prompts, with experiment tracking and the ability to reverse changes. And your teams can ensure that LLMs are based on well-defined processes and best practices, leading to more reliable and predictable results by monitoring model training, deployment, and updates and providing lineage across the whole lifecycle.

The benefits for your firm include:

- Greater team collaboration

- Model standardization

- Model governance and reproducibility

- Improved scalability and performance

- Control of the fine-tuning and the model inference process

- Cost optimization

Stay tuned as we continue to offer our perspectives on GenAI and your future in the cloud.