When doing technical assessments or just small reviews of applications, you’ll often hear, "How the hell did you come up with that?" or "How could we forget this." We know you should never ask an expert how they do things because the incentive and point of view differ from performing and observing the actual task. (As explained by the book Outliers by Malcolm Gladwell). But from studying what has been working at most organizations, we know that threat modeling is often seen as the gateway drug for teams to think about malicious uses of a system. Plus, it is one of the least invasive techniques on teams to start with security.

In a nutshell, threat modeling simply tries to determine: What are we doing? Can something go wrong? If this is important enough, what do we do about this? It answers these questions by basically creating three deliverables:

- Models – Get ideas out of your head and on a medium that can be used within teams to share them with others.

- Misuses – Collaborate on the things we don’t want happening

- Mitigations – Describe the ways that misuses can be less likely to occur or have less of an impact.

If you are starting with the concept, take your time. Don’t start using some tool or framework right away and figuring out how it can be merged in your way of working to it. It’s better to just start small and adapt, and grow when needed than keep waiting for a perfect match. There will never be one, so just start.

First steps

Start with one of the deliverables mentioned above before venturing into using all three simultaneously. The deliverable that most people from the start is the Misuses. Breaking stuff is always fun. And we want security to be fun or beneficial, especially in the beginning. You only have one shot at your first impression. Take your use cases or coming tasks during a sprint session and try to answer the following question.

If a legitimate user with full access to the functionality became malicious, what would that user be able to do?

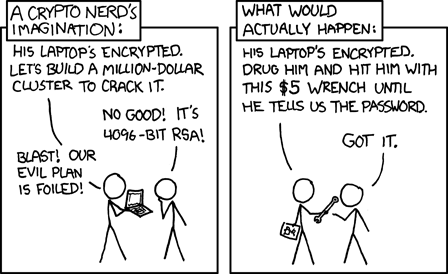

The reason we pick this persona is twofold, first, it takes away the "yeah, but we have this in place or this is a back-end system". This means they must start with the presumption that some mitigations have no use anymore. And the second is a persona they can relate to and understand what capabilities they have. Had we chosen the persona "a hacker has circumvented all protection and now has full access to the functionality", then the start would sound, to a team, as improbable? Plus they have no idea how your evil attacker works, what skills her/she has, or what available resources. As a matter of fact, no one knows this, which makes the change in chance X impact, mostly a useless value. But that is a different topic.

Then ask yourself, is there anything we can do to prevent this from happening or make it less likely that it would happen or impact us? If it is not that important or investing in prevention is not possible (time/resource/technical capabilities), how would we at least know that it happened? So how can we detect it? And finally, if we didn’t catch it, but it did occur, what will we do if we discover this after the fact? How would you respond? What steps do we have as a contingency? By the way, if you are using MITRE’s framework, there are more options, but start using the simple approach.

Abuse Cases

Once 1 or 2 iterations have been done, we can take it to the next step. Instead of asking the question above now start adding abuse cases. There are many ways to come up with but the easiest and most effective one is by simply taking the ‘normal’ use case and appending the word "Not" everywhere in the sentence.

Use-Case:

- As a user, I can download my invoices.

Abuse-Case:

- As a not-user, I can download my invoices

- As a user, I can-not download my invoices

- As a user, I can download not my invoices (but something else)

If done correctly you inversed the object, subject, and verb making it hit confidentiality, integrity, and availability. Now take this list and separate them into concerns that need to be addressed and those that can wait. Then try to map to prevent, detect or respond mitigations to the found risks. The next steps will be looking for adding more value by addressing the risk and doing it in the development lifecycle. This will be in part 2.