This year at re:Invent, the AWS Lambda team introduced a new workflow for packaging cloud applications: OCI containers support for Lambda functions. We’ll take a quick look through what it is and how it works. And most importantly, what it means for your team when getting started with Serverless workloads today.

Containers deliver the potential to leverage existing knowledge in your teams, bridging part of the knowledge gap to Serverless. And by fitting neatly into your existing pipelines and workflows, while allowing you to bring your code in any language to Lambda with ease. Here is a quick history tour to bring us up to speed on the development of Lambda and containers.

The history of AWS Lambda

AWS made Lambda generally available way back in April 2015. Almost 6 years and over 28 pages of blog posts and announcements later, Lambda is still developing new features at a rapid pace and continuing to be at the forefront of innovation when it comes to Serverless compute. Originally, Lambda was only available in one language: Javascript. The idea behind this concept was to allow users to create simple links between AWS services, such as manipulating data in a certain way before passing it to another internal service. All of this could be done without having to run a single container or EC2 instance constantly, creating vast cost savings for businesses with small, infrequently run tasks.

Under the hood, the Lambda service downloads your packaged code from storage, then executes it. The smaller the size of the code, the faster Lambda can start executing it. As such, Lambda has strict size limits on the size of the code uploaded, although more generous now than they were at the beginning.

While tools such as Docker have existed since around 2011, and changed how we deploy applications to this day, containerization has a different strategy. As the purpose is to not only package the code but all dependent libraries, even a standard Ubuntu container image can cross 300MB. With the new AWS announcement, AWS allows you to upload container images of up to 10GB in size. This completely outstrips what was possible before, where the maximum size of code could be 250MB.

Now with full container support, it’s easier than ever to package not just your code, but all dependencies necessary to run it, all using the same tools you know and love from services like Docker. Earlier I mentioned that Lambda needs to download the code before it’s executed. This results in a phenomenon known as “cold starts”, where on the first invocation of a function, there can be a several hundred millisecond delay between the sending of a request and the start of the execution. This problem goes away after the first invocation, as the same execution environment is reused, but can become a large issue if you have specific latency requirements and the function is not run often, or you need to scale up fast.

Containerization with AWS Lambda

The most impressive feat is that the AWS Lambda team has now managed to fit whole containers up to 10GB in size, with no change to the time of a cold start. So how do they do this? Let’s take a deep dive.

When building this new functionality, the Lambda team took advantage of a few key characteristics of a container:

- Generally containers are based on generic images with a lot more dependencies than the code actually needs. Only a small section of the image actually needs to be available when executing.

- The vast majority of containers are built on top of widely available public images, where these layers can be shared amongst multiple applications from multiple customers

- Within a single business, many layers tend to be shared across applications as they work from base images defined by the organization.

With these key ideas in mind, it’s possible to quickly cut down on the amount of data that Lambda needs to download at the start of the invocation.

This is where the concept of the Sparse File System comes into play. When a Lambda container function initializes, the filesystem where the image should exist is empty. As the Lambda function starts execution, the data of the container image is streamed in real-time to the filesystem to be executed.

Alongside this, the Lambda service caches a common set of layers for all customers, and also a set of layers for the specific customers. This reduces the chance that the service needs to download content from a container repository, which could slow down execution as the filesystem waits for content to be streamed in. This process is carried out through deduplication based on encrypted data, so container layers can be shared across multiple customers while maintaining isolation between them.

Finally, the last piece of the puzzle is the release of ECR public, which distributes container images through the CloudFront content delivery network to accelerate image pulls from any region. This service pairs perfectly with the new functionality of Lambda to allow for a dedicated space for images to be uploaded and accessed natively within AWS, while also allowing Lambda-specific generic public images to be built and distributed.

Getting started with Serverless

If you missed this re:Invent 2020 talk, Marc Brooker gives a great breakdown of how Lamba optimizes container functions behind the scenes, and inspired me to write this article.

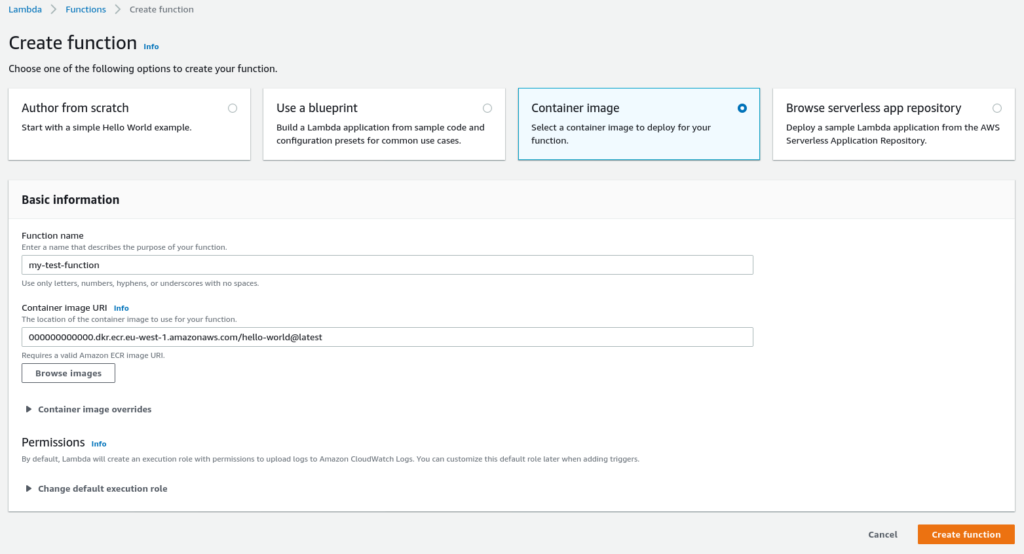

So what does this mean for you and your team? Whether you’ve already dipped your toes into Serverless or are brand new to the concept, Containers for Lambda helps vastly to bridge the knowledge gap between the containerization model you know, and the Serverless model that some teams in your organization may just be getting started in. It’s now possible to use the exact same pipelines you use to build images for your Kubernetes or ECS clusters, to build for the scalability and run-anywhere-anytime nature of Lambda. It allows you to easily bring not just your code in any language, but all your dependencies, quickly into Lambda without compromising on the performance of your code.

Of course the same rule of “more code, longer cold starts” still applies. It makes sense to keep your image sizes as small as possible and reduce the amount of data Lambda needs to read. This blog post from Rob Sutter contains loads of useful information on optimizing your images for Lambda (and containers in general).

Lambda is helping to bridge the gap between containerization and Serverless, but of course there’s a lot more to consider when moving your workload to a Serverless model. I’ve been working on fully Serverless, scalable workloads since 2018; if you’re interested in getting your team up to speed on the latest in Lambda feel free to get in touch with me or my colleagues over at Xebia!