It is very common to set up the AWS credentials used by boto3 and the aws client in ~/.aws/config and ~/.aws/credentials. There are some security risks you need to be aware of though. In this article I will explain risks and mitigation when scripting against your AWS account(s).

The risks involved in working this way are:

long-term credentials eventually leak

the accidental running destructive code

generic roles are overly permissive for specific task

scripting is not infrastructure as code

without mfa this is still single factor auth

I will go into each of these risks below.

Compromised credentials

The credentials often used in this scenario are valid indefinitely. The longer you keep a secret unchanged, the higher the chance of it being compromised. Lately Center for Internet Security (CIS) lowered the required minimum rotation frequency of credentials and secrets from 90 to 45 days in its AWS benchmarks! If you do not rotate the credentials periodically yourself, they will be working as long as your account exists (put a periodic reminder in your calendar!). If you left your laptop unlocked unattended for just a one minute, you should consider the contents of your ~/.aws/credentials compromised, especially if you hang out in places with many cloud engineers 😉.

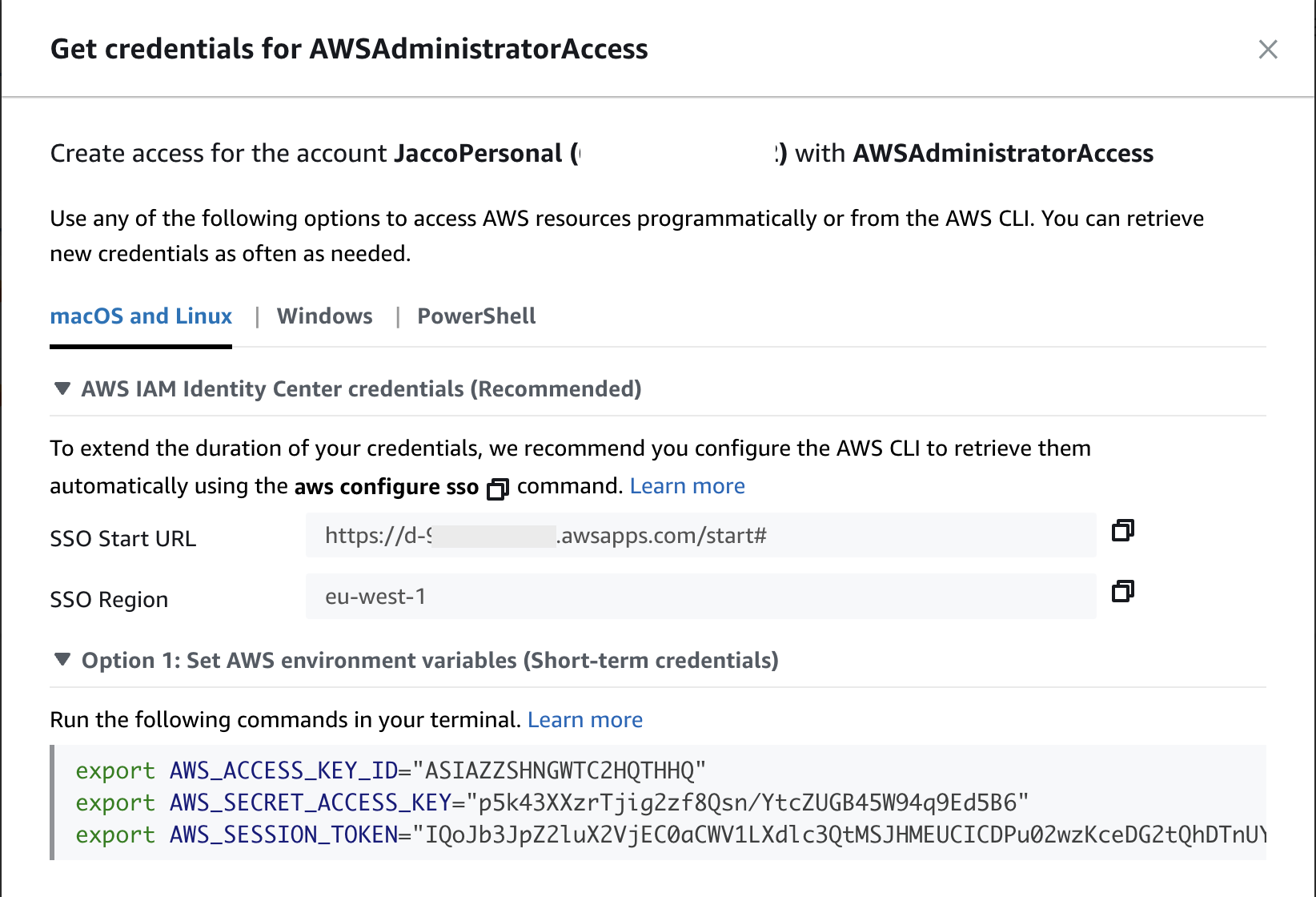

It is much safer to not use long-term credentials at all. Instead use the short-term credentials you can can on the sso-pages once you set that up:

You can just click the credential exports and paste them in the console you are using to execute the script. These credentials are valid for a limited time and they are only known to the console you paste them in. This is equivalent to using get_session_token in your code.

Be aware that this copy-and-pasting credentials does introduces a new risk in a multi-account scenario. Maybe you have different environments in different accounts. You have no way of seeing for which account you currently pasted the credentials. This means you best to copy-and-paste them with every script execution to prevent a mistake. Otherwise, you might accidentally run a script on the production environment rendering it broken.

Accidentally running destructive code

Coincidentally Joris Conijn mentions this risks in his blog post. It is about accidentally running code that does something destructive without any warning prompt. It doesn’t even have to be your own code, any downloaded code, for example in a package initialization, employing boto3 will just try and execute its payload. To mitigate this risk just don’t use the default profile, but set up the credentials in a named profile. That way any script not knowing about the profile name will not use it and it will try use the default profile and render an error. (A really nasty script could parse the credentials file though and try to find a working one. The boto3 library will even help the malicious code by supplying boto3.Session().available_profiles).

It could also be your own code that is malicious. I once had a script that would remove the ingress rules of the default SecurityGroup of every Vpc in all the accounts in my organization (this is a good practice). The code contains a condition to limit its destructive payload to only the SecurityGroup that has IsDefault=True. But one commenting # before this if would arm this code to become a threat of immense magnitude to the organization! (I would not even know where to begin recovering if this armed code would accidentally run…)

This also shows the next risk over overly permissiveness.

Overly permissive sessions

Most of the times the permissions you get from the profile or SSO are very generic or maybe even admin. Once you have a session using those credentials you basically script around with a fully loaded rifle to shoot in your own foot. I have found it to be a good practice to scope down the permissions using a session.

I usually have a function in my code for getting the boto3 session like this:

def get_session(role_name: str) -> boto3.Session:

sts = boto3.Session(region_name='us-east-1').client('sts')

account_id = sts.get_caller_identity()['Account']

creds = sts.assume_role(

RoleArn=f'arn:aws:iam::{account_id}:role/{role_name}',

RoleSessionName='my_session',

Policy=json.dumps({

"Version": "2012-10-17",

"Statement": [{

"Effect": "Allow",

"Action": [

"organizations:DescribeOrganization"

],

"Resource": "*"

}]

})

)["Credentials"]

return boto3.Session(

aws_access_key_id=creds['AccessKeyId'],

aws_secret_access_key=creds['SecretAccessKey'],

aws_session_token=creds['SessionToken']

)

The only thing required is an IAM role that you can assume with enough permissions for your purpose.

In larger scripts I often even pass in the policy I want for that section of the code. This way each section has its own scoping down to further reduce the risks.

When you start adding code to your script that uses other boto3 calls and you run the code you usually get an error elaborate error message mentioning the action and resource missing in the scoping down policy. You just evaluate them, add them to the policy and run the script again. This way you are constantly aware that you are doing things safely.

Infrastructure as Code

Infrastructure as Code (IaC) is the way to go when creating or changing things hosted in the cloud. There should be source code in a repository indicating the desired state of your infrastructure. Usually people consider CloudFormation a good format to express the desired state in. And ideally you would only want one format. But with CloudFormation you are limited to what it supports, unless you are willing to code or introduce some CustomResources into your infrastructure project. Coding CustomResources is not requires a fair amount of experience though. It might seem easy, but surfing around all the edge cases the CloudFormation process has is sometimes not so intuitive. And the CustomResources come with some responsibility of maintenance as well. The Lambda functions involved often sit around in your AWS account for years. The runtime it uses probably no longer supported when the time comes to destroy your resources. You might need to roll out updates to CustomResources and test wether they still cover your use-case.

That why I often compromise the one format rule. You can add a yaml file to your repo containing some desired state and a post deploy script in python that updates the target AWS account(s) to reflect the state from the yaml file. You set up this script to run in the same pipeline where your CloudFormation is deployed. This can be a lot simpler than juggling with custom resources and I think it is still called “Infrastructure as Code”.

Here are some examples where I chose to go for the yaml-script approach (sometimes without yaml):

delete all rules from default security groups in vpcs

(re)setting IAM password policy

configuring the state of SecurityHub controls to ENABLED or DISABLED

updating confluence page with information about your infrastructure

account creation bootstrapping / destruction

I think as long as you put the actual state in separate files (like the yaml) you can still consider it to be Infrastructure as Code.