This blog is a follow up on my previous blog post about optimizing your AWS security footprint. In this series of blogs I would like to focus on improving your security in the cloud without further increase of your running costs. Or even better: being more cost-effective and thus reduce your running costs to a minimum.

Today’s topic is encryption. I will share my experience on how to optimize your data-at-rest capabilities.

Job zero

Security is “job zero” for every company. As part as our apprenticeship (yes I’m representing a Premier Consulting Partner company) we do our best to educate customers to also have security as “job zero”. Quite often we do get questions like: “Isn’t this really expensive?” and “This will take a while to implement, won’t it?”. Especially when we are talking about topics like data encryption of data-at-rest. In my previous life as a on-premises guy I’ve learnt that encryption of data-at-rest is expensive, difficult and slow. I still believe these are the ingredients the “let’s-skip-this-requirement” recipe. When I talk with new (AWS) customers to talk about their to-be landing zone I’ve noticed that this topic is something that, through the eyes of the customer, is avoidable or can be postponed. I have bad news for them. Or actually, I have good news for their security posture, applying encryption-at-rest on AWS does not have to be expensive or difficult.

Werner Vogels (CTO Amazon): “Dance Like Nobody’s Watching. Encrypt Like Everyone Is”

The importance of a key management service

Data-at-rest encryption is a common requirement. Having a key management service is bringing comfort to either the security/compliance stakeholders and the operational/implementation teams. Without a key management service you will have a hard time implementing encryption in a uniform way and also getting your compliancy at the desired level. Most likely you end up with a sub-optimal solution on a limited scope of your data holding assets. We are in the happy position that AWS provides a key management service: AWS Key Management Service.

Streamlining the running costs

Looking at the pricing model of AWS Key Management Service (KMS), you will see two dimensions. The first dimension is the so called “Key Storage”, which can be easily translated into a unit which represents a Customer Master Key (CMK). If annual rotation is enabled each version counts as a unit. The current price for a unit is $1 per month. So if you host a key and enable automatic key rotation, the total running cost for this key over three years will be: $3 for the initial version during the first year, $2 for the version after the first rotation and finally $1 for the last version during the last year. Total costs (3y) for owning this key is $6.

The “Key Storage” dimension can be optimized by reducing the number of keys. It might be wise to have a strategy around the boundaries of keys. From a service perspective you can currently host 10,000 Customer Managed Keys (per region) in each AWS account. You will probably never hit this limit if you apply the AWS best practices and apply workload-oriented account structure. Even when creating a CMK per resource. It will affect your bill. So money-wise having a key per resource doesn’t make sense.

At the other side of the spectrum you can have a single CMK per AWS account/region. This “one key to rule them all” strategy will, technically, work to a certain extend. The pitfall is that each CMK is subjected to limits. The most likely scenario is that you will be confronted with either the operations per second limit or the maximum length of the JSON resource policy. From an operations point of view this strategy is a bit risky, since it requires serious capacity planning and the blast radius during a disaster is a lot bigger than desired.

So the optimal strategy is somewhere in between the two previous described scenarios. This is the strategy I’ve used in multiple cases and so far with success. In a classical three-tier model you will have three layers (presentation, application and data). Rule of thumb is that each layer will have his own dedicated CMK. So the data volumes of the webservers will be using a single CMK, and the same applies for the application and data layer. Then you look at each component within the layers. How likely is it that you will hit a limit? For must resources (RDS, EBS, DynamoDB, etc.) the number of generated data keys is very low. So you can safely have 100 EBS volumes using the same CMK before hitting a ThrottlingException.

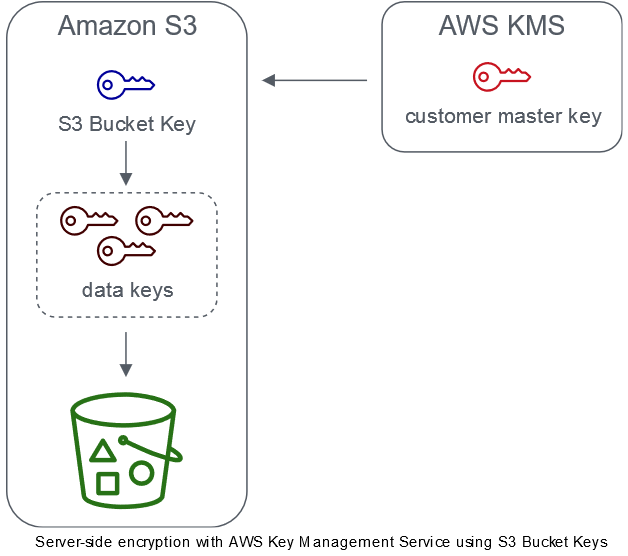

For resources with a per-object data key such as S3, you have to make a rough guestimate on the number of operations per second you will have. It might be useful to have a dedicated CMK for buckets with a lot of I/O operations. Also you might want to consider S3 Bucket Keys. If you enable this feature S3 will generate a time-limited data key to encrypt each object in the bucket. This reduces the number of requests to your CMK. We demonstrate this in an earlier blog here.

In addition you can have additional CMKs, for example when offloading log files of your ALB to S3. By using a dedicated key for administration purposes you can guarantee that reaching a limit while writing log files never impacts your uptime and visa versa.

By following this strategy you will have, in the order of magnitude, 5 CMKs per environment. This means a total of ~20 CMKs for your entire DTAP-lifecycle. Translated to the total costs over three years: (20 CMKs x $1 x 12 months) x 5 versions = $1,200 or on average $33 per month. This is a pretty fair fee if you compare the costs and benefits. Or at least, costs cannot be an valid excuse to skip encryption-at-rest.

The second dimension is called “Key Usage”. AWS will charge you for operations on the key, such as Encrypt, Decrypt, GenerateDataKey and so on. Sounds expensive, but this is only applicable to API calls on the Customer Master Keys. Requests on data keys are not in scope. If you encrypt a EBS volume EC2 loads the data key (generated from the Customer Master Key) in memory and uses this data key to encrypt/decrypt data. Resources such as EBS, DynamoDB tables, RDS instances etc. are using ‘long living’ datakeys. For those resources the number of requests made to the CMK will be limited.

For S3 encryption this is completely different, as S3 (by default) will generate a data key for each object in S3. Depending on the number of objects you are uploading to S3 this can have a big impact on the total number of operations to the CMK.

The previous scenarios can be described as Server Side Encryption. There is an additional scenario where you leverage AWS KMS to do Client Side Encryption. In this case you will be using the CMK to generate a symmetric data key which you then use to encrypt your data. The cipher blob of the data will be stored in S3. Preferably you want to encrypt each object with a dedicated data key and remove the data key from memory once done, but in practice this might lead to performance challenges when uploading a larger number of objects. In this case you might consider reusing the data key to encrypt multiple objects, e.g. use workers to consume a queue with data objects which needs to be encrypted and have a data key per worker and refresh the data key every X objects or every Y seconds (depending on what comes first). This way you can optimize your throughput, but also reduce costs. But please first make sure that this added complexity is worth it.

TL;DR

Encrypting your data-at-rest with AWS Key Management Service (KMS) will protect your (customers) data in a cost-effective way. Cost-effectiveness can be achieved by 1) lower implementation (default feature for almost all AWS data services, ‘checkbox’ style configuration). And 2) running costs compared (almost-zero operational overhead, no performance impact) and 3) friendly pricing model (price per key and operation versus price per gigabyte) to traditional storage layer encryption.