After I added the Google Cloud Storage backend to PrivateBin, I was

interested in its performance characteristics. To fake a real PrivateBin client, I needed to generate an encrypted message.

I searched for a client library and found PBinCLI. As it is written in Python,

I could write a simple load test in Locust. Nice and easy. How wrong was I.

create the test

Within a few minutes I had a test script to create, retrieve and delete a paste.

To validate the functional correctness, I ran it against privatebin.net:

$ locust -f locustfile.py

--users 1

--spawn-rate 1

--run-time 1m

--headless

--host https://privatebin.netThe following table shows measured response times:

| Name | Avg (ms) | Min (ms) | Max (ms) | Median (ms) |

|---|---|---|---|---|

| create-paste | 237 | 140 | 884 | 200 |

| get-paste | 28 | 27 | 30 | 29 |

| delete-paste | 27 | 27 | 29 | 27 |

Now, I had a working load test script and a baseline to compare the performance with.

baseline on Google Cloud Storage

After deploying the service to Google Cloud Run, I ran the single user test. And it was promising.

$ locust -f locustfile.py

--users 1

--spawn-rate 1

--run-time 1m

--headless

--host https://privatebin-deadbeef-ez.a.run.appSure, it is not lightning fast, but I did not expect that. The response times looked

acceptable to me. After all, privatebin is not used often nor heavily.

| Name | Avg (ms) | Min (ms) | Max (ms) | Median (ms) |

|---|---|---|---|---|

| create-paste | 506 | 410 | 664 | 500 |

| get-paste | 394 | 335 | 514 | 380 |

| delete-paste | 587 | 443 | 974 | 550 |

multi-user run on Google Cloud Storage

next I wanted to know the performance of PrivateBin with my Google Cloud Storage onder moderate load. So, I scaled the load test to 5 concurrent users.

$ locust -f locustfile.py

--users 5

--spawn-rate 1

--run-time 1m

--headlessThe results were shocking!

| Name | Avg (ms) | Min (ms) | Max (ms) | Median (ms) |

|---|---|---|---|---|

| create-paste | 4130 | 662 | 10666 | 4500 |

| delete-paste | 3449 | 768 | 6283 | 3800 |

| get-paste | 2909 | 520 | 5569 | 2400 |

How? Why? Was there a bottleneck at the storage level? I checked the logs and saw steady response times reported by Cloud Run:

POST 200 1.46 KB 142 ms python-requests/2.25.1 https://privatebin-37ckwey3cq-ez.a.run.app/

POST 200 1.25 KB 382 ms python-requests/2.25.1 https://privatebin-37ckwey3cq-ez.a.run.app/

GET 200 1.46 KB 348 ms python-requests/2.25.1 https://privatebin-37ckwey3cq-ez.a.run.app/?d7e4c494ce4f613fIt took me a while to discover that locust was trashing my little M1. It was running at 100% CPU without

even blowing a fan to create the encrypted messages! So I needed something more efficient.

k6 to the rescue!

When my brain thinks fast, it thinks golang. So I downloaded k6. The user scripts

are written in JavaScript, but the engine is pure go. Unfortunately, the interpreter

is custom built and has limited compatibility with nodejs and browser JavaScript engines.

This meant that I could not use any existing JavaScript libraries to create an encrypted message.

Fortunately with xk6 you can call a golang

function from your JavaScript code. This is what I needed! So I created the k6 privatebin extension and wrote

an equivalent load test script.

build a customized k6

To use this new extension, I build k6 locally with the following commands:

go install github.com/k6io/xk6/cmd/xk6@latest

xk6 build --with github.com/binxio/xk6-privatebin@v0.1.2k6 baseline run on Google Cloud Storage

Now I was ready to run the baseline for the single user, using k6:

$ ./k6 --log-output stderr run -u 1 -i 100 test.jsThe baseline result looked pretty much like the Locust run:

| Name | Avg (ms) | Min (ms) | Max (ms) | Median (ms) |

|---|---|---|---|---|

| create-paste | 440 | 396 | 590 | 429 |

| get-paste | 355 | 320 | 407 | 353 |

| delete-paste | 382 | 322 | 599 | 357 |

k6 multi-user run on Google Cloud Storage

What would happen, I i scaled up to 5 concurrent users?

$ ./k6 --log-output stderr run -u 5 -i 100 test.jsWoohoo! The response times stayed pretty flat.

| Name | Avg (ms) | Min (ms) | Max (ms) | Median (ms) |

|---|---|---|---|---|

| create-paste | 584 | 350 | 2612 | 555 |

| get-paste | 484 | 316 | 808 | 490 |

| delete-paste | 460 | 295 | 843 | 436 |

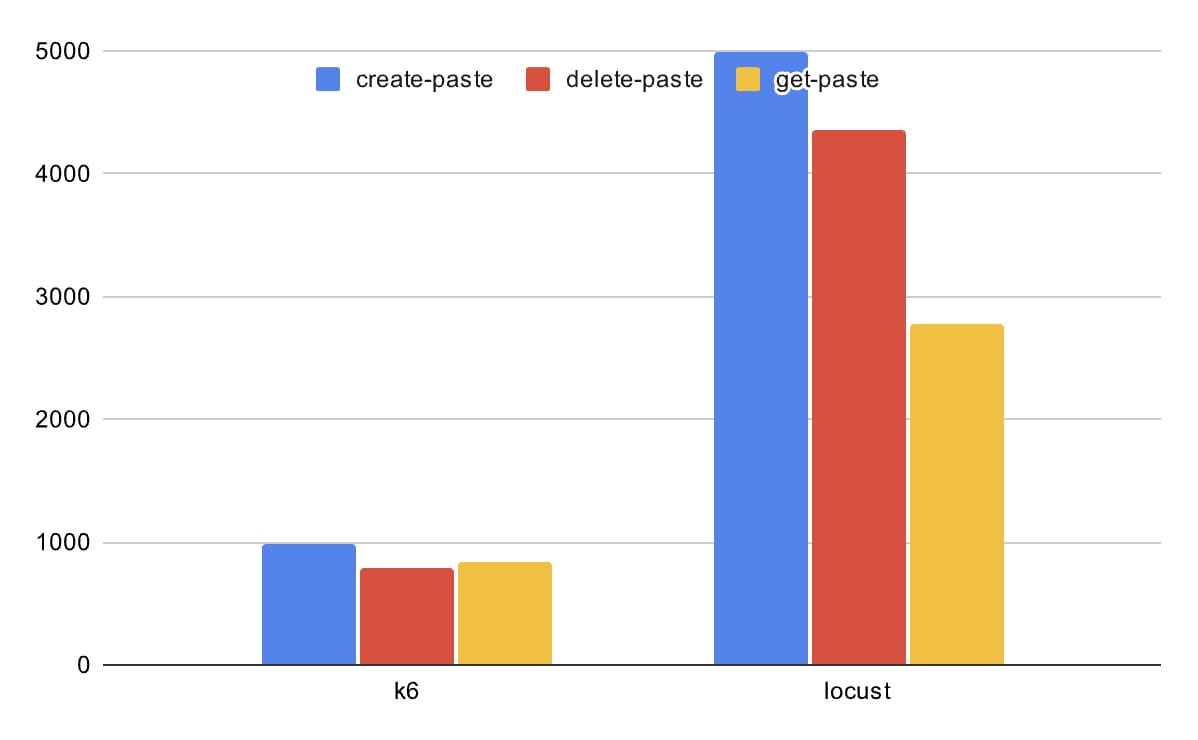

The chart below shows the sum of the medians for the single- and multi-user load test on Locust and k6:

It is clear that Locust was skewing the results way too much. With k6, I could even simulate 20 concurrent users, and still only use 25% of my M1.

| Name | Avg (ms) | Min (ms) | Max (ms) | Median (ms) |

|---|---|---|---|---|

| create-paste | 713 | 414 | 1204 | 671 |

| get-paste | 562 | 352 | 894 | 540 |

| delete-paste | 515 | 351 | 818 | 495 |

These are way more users than i expect on my privatebin installation and these response times are very acceptable for me. Mission accomplished!

conclusion

In the future, my goto tool for load and performance tests will be k6. It is a single binary which you can run anywhere. It is fast, and the golang extensions makes it easy to include any compute intensive tasks in a very user-friendly manner.