ChatGPT has become increasingly popular among professionals who rely on chat.openai.com to help them out in their daily work. Despite its popularity, we spotted some areas for improvement, like privacy, flexibility, and collaboration, to make it even better for our colleagues.

To address these issues we developed an internal tool called SlackGPT.

SlackGPT not only tackles these limitations but also gives our colleagues a unique experience when working with and building modern LLM applications.

In this blog post, we’ll dive into what SlackGPT is, why we built it, and how we use it within our team.

Keep reading to find out more!

1 – Enhanced Privacy

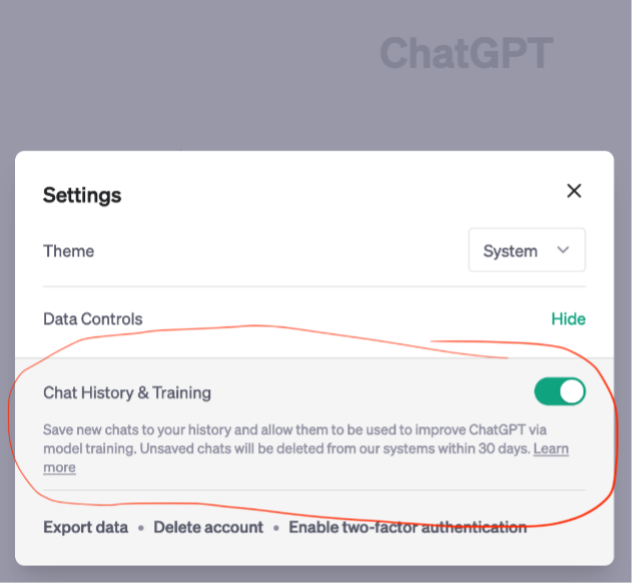

ChatGPT has a potential risk of leaking sensitive info. By default, chat.openai.com uses your data to improve the model (Figure 1). If you accidentally insert sensitive information in ChatGPT, you might risk company secrets, just like Samsung did (article).

You can turn off these settings, but it’s linked to the handy chat history saving feature. Plus, it’s on by default, so there’s a good chance many users will either forget to turn it off or not even know about it.

Figure 1: Default user settings of ChatGPT on chat.openai.com

To improve privacy, we developed our first version of SlackGPT on top of the Azure OpenAI Service. This provides better terms and conditions. Only potentially abusive messages might be reviewed by Microsoft EU employees (link).

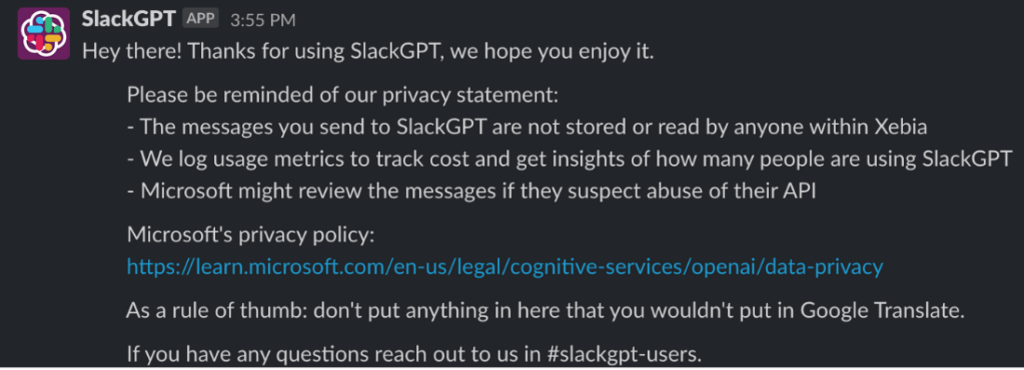

More importantly, we keep our users up to date on the risks. Every day we remind them about data handling and the importance of not sharing sensitive information (Figure 2).

Figure 2: Our daily reminder to active users about privacy and the use of SlackGPT

2 – Access to Advanced Features

The free version of ChatGPT is nice, but to get access to advanced features, like GPT4, you need to get a paid subscription. By using Azure, our colleagues have access to those features without individual subscriptions.

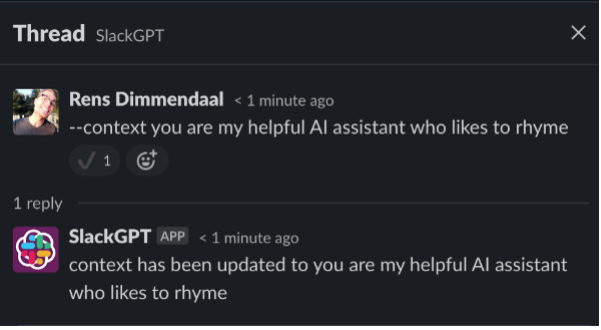

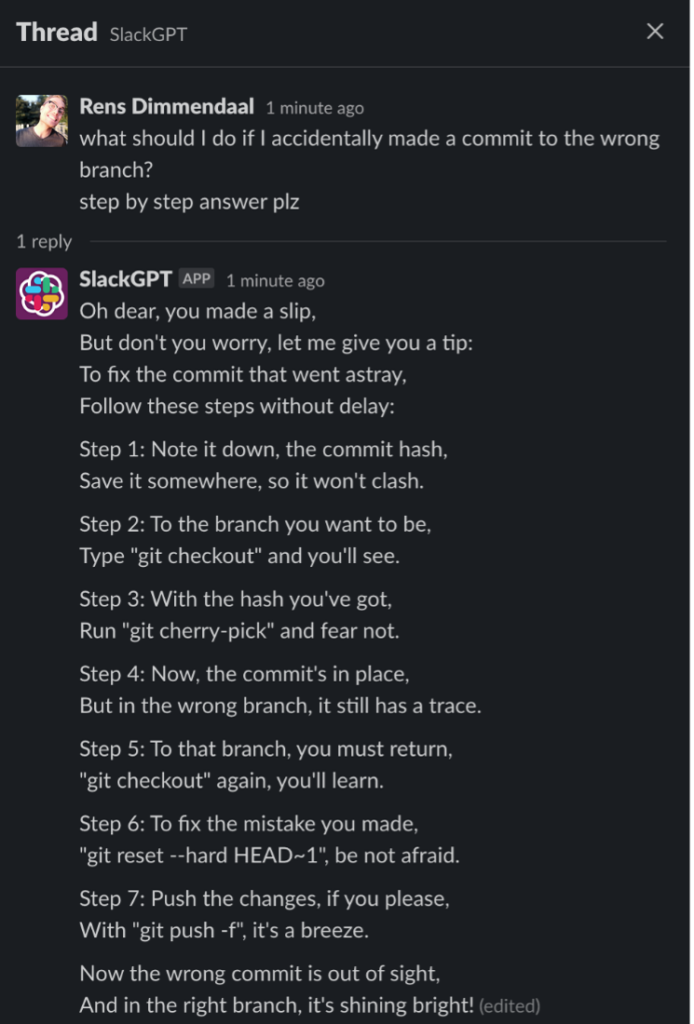

Furthermore, we can provide our users with more fine-grained settings. For example, users can change the context to customize the style SlackGPT’s replies (Figures 3 & 4).

Moreover, as we gain access to a wider variety of models from vendors such as Google and AWS, as well as open-source models from Databricks and HuggingFace, we can seamlessly integrate them to SlackGPT. This will provide our colleagues with enhanced performance as new models are released. But also, enhanced privacy as we move to self-hosted models for example.

Figure 3: Example of tuning your assistant for personal fun and benefit

Figure 4: Example of using my personalized rhyming assistant

3 – Improved Collaboration

SlackGPT does not only work as a private messaging tool. It can also be used in threads with other users.

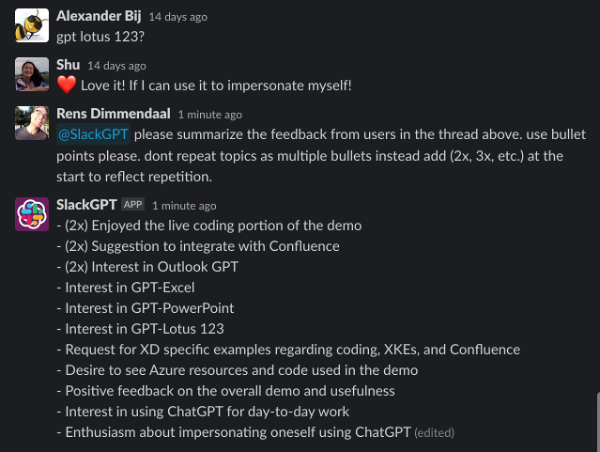

One way we’ve used it is to sum up what multiple colleagues have shared in a thread. This makes it easier to aggregate and distribute the shared perspectives (Figure 5).

Figure 5: Example SlackGPT conversation with multiple users

4 – Seamless Integrations

The true potential of ChatGPT lies in its integration capabilities. This is also reflected by OpenAI’s push for plugins in ChatGPT (link).

One usecase we see is connecting SlackGPT to our internal knowledge base. That way SlackGPT can provide answers based on our internal documentation and provide links to the source.

Another exciting use case is automatic URL scraping, allowing SlackGPT to summarize articles shared by colleagues in various channels. As we share articles with each other all the time, a Slackbot that can create a TLDR would be a welcome addition to our Slack.

We are working with technologies like LangChain (link) to enable this. We’ve already made proof of concepts and are working on integrating it into SlackGPT.

5 – Dogfooding: Learning from Experience

As the industry continues to explore the potential of large language models in production, our SlackGPT integration serves as a valuable learning opportunity. By developing an internal tool, we gain first-hand experience in addressing the challenges and questions that arise, ensuring we provide the best solutions for our customers later.

Conclusion

Creating SlackGPT was a great learning experience in building LLM-powered applications at scale. Next, we’ll integrate SlackGPT with our intranet — for more relevant answers — and make it ready to be integrated with other LLMs developed by Google, AWS, and Open Source.

Is your organization facing similar issues, or are you looking to leverage LLMs similarly?

Let our experiences benefit you, and reach out for a cup of coffee ☕️.