In this blog we configure Google Cloud Endpoints in Terraform to provide API management features like security, monitoring and quota limiting for our application’s API. We use Terraform in order to help you understand the components involved and see endpoints in action.

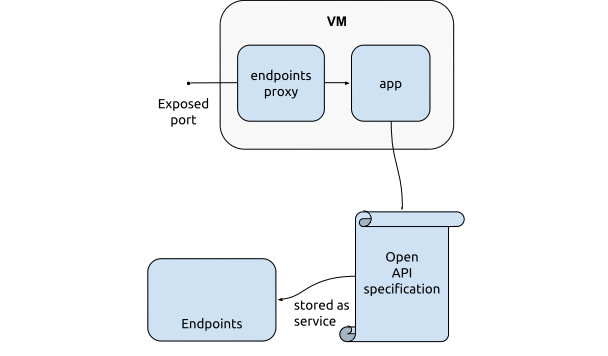

There are two ways that we can use Cloud Endpoints: One is to create the APIs directly in Google App Engine, the other is to front an existing application with the Google Cloud Endpoints proxy, also known as the Extensible Service Proxy. In this blog, we are exposing our example application with an Open API specification using this proxy.

Exposing our API through Google Cloud Endpoints

To expose our API through Google Cloud Endpoints, we just need to:

- Create the Open API specification

- Create an Google service endpoint

- Configure the proxy for our backend

Create the Open API specification

First we create the open API specification for our backend. This file is better known as a swagger file. it specifies all the HTTP operations, paths, and request and response types that are supported by the interface. One of the easiest way to do this, is to use the swagger editor.

Create a Google service endpoint

Next, we create an API service endpoint. The service needs a globally unique DNS name. We can use our own, or use one in the format .endpoints..cloud.goog. In the last case, Google will add our endpoint to the Google managed hosted zone of .cloud.goog.

resource "Google_endpoints_service" "paas-monitor" {

service_name = "paas-monitor.endpoints.${var.project}.cloud.goog"

openapi_config = "${data.template_file.open-api-specification.rendered}"

}

For the Endpoints Developer portal to work, the service name and global ip address of the load balancer must be set in the swagger file in the host field and the x-Google-endpoints object:

swagger: "2.0"

host: ${service_name}

x-Google-endpoints:

- name: ${service_name}

target: ${ip_address}

The actual values are passed in using the terraform template construct:

data "template_file" "open-api-specification" {

template = "${file("paas-monitor-api.yaml")}"

vars {

service_name = "paas-monitor.endpoints.${var.project}.cloud.goog"

ip_address = "${Google_compute_global_address.paas-monitor.address}"

}

}

Configure the proxy for our backend

Finally, we configure the proxy for our backend. This is done by starting the proxy on the original listen port 1337, and specify the original backend for the service endpoint,as shown below in the startup script of the VMs.

docker network create --driver bridge app-net

docker run -d \

--net app-net \

--name paas-monitor \

--env 'MESSAGE=gcp at ${region}' \

--env RELEASE=v3.1.0 \

--restart unless-stopped \

mvanholsteijn/paas-monitor:3.1.0

docker run -d \

--net app-net \

-p 1337:8080 \

--restart unless-stopped \

gcr.io/endpoints-release/endpoints-runtime:1 \

--service=${service_name} \

--rollout_strategy=managed \

--backend=paas-monitor:1337

A separate docker network is used, to allow the proxy to find the backend application through it’s DNS name without exposing the paas-monitor itself.

Adding a frontend application

Now the proxy is put in front of the backend, normal HTTP server requests will fail. Only requests which conform to the Open API specification are allowed:

curl localhost:1337/index.html

{

"code": 5,

"message": "Method does not exist.",

"details": [

{

"@type": "type.Googleapis.com/Google.rpc.DebugInfo",

"stackEntries": [],

"detail": "service_control"

}

]

}

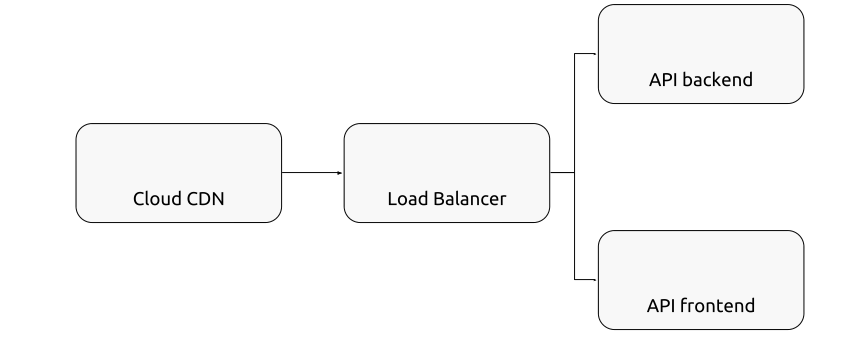

To resolve this, we put the website content in a Google storage bucket as depicted below.

The frontend application is served through the Google content delivery network, as described in our previous blog.

installation

To install this application, install terraform, create a Google project and configure your gcloud SDK to point it.

Then, run the following commands:

git clone https://github.com/binxio/blog-how-to-expose-your-api-using-google-cloud-endpoints.git

cd blog-how-to-expose-your-api-using-google-cloud-endpoints

GOOGLE_PROJECT=$(gcloud config get-value project)

terraform init

terraform apply -auto-approve

open http://$(terraform output ip-address)

It may take a few minutes before traffic reaches the application. Until that time http 404 and 502 errors may occur.

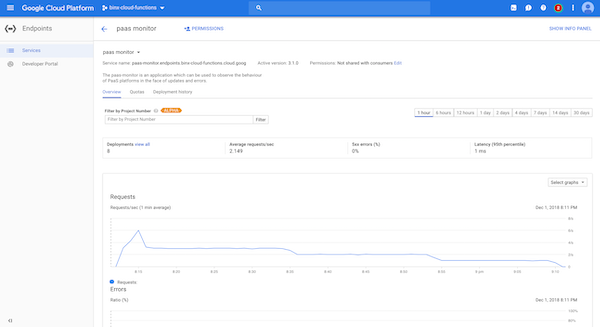

service overview

In the Google Cloud Console we find the overview of the usage of the API:

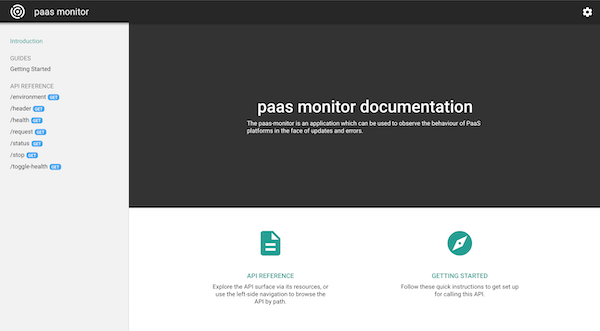

developer console

When the Google Endpoints developer console is enabled, users of the API can read the documentation and try out the individual methods.

conclusion

Using Google Cloud Endpoints is a very easy way to add security, monitoring and quota limiting on custom APIs. All that is required, is an upload of the application interface definition and the Google Cloud Endpoint proxy in front of your application.

We exposed a backend application with an Open API specification running in Google Compute Engine. The process for Kubernetes and Google App Engine are slightly different. Read the documentation for further details.

Next time, we will add authentication to our API.