Cloud Build is a serverless build system. It allows you to run builds in your private network (VPC) using Private Pools. The VPC connection allows you to access the resources in your VPC, but it doesn’t allow you to access (peered) managed services such as Google Kubernetes Engine (GKE) or Cloud SQL. In this blog I’ll show how to overcome this limitation and deploy to GKE using Cloud Build Private Pools.

Dealing With Transitive Peering

To access transitively peered resources, the Cloud Build documentation recommends a VPN-based solution. This solution uses Cloud Routers to exchange custom routes and route traffic to transitive peers. The downside of this solution is that you are now routing your traffic over the internet and paying for a VPN that you only need when you run a build..

An alternative solution is to deploy an IAP proxy. This allows your (public) Cloud Build pipeline to securely connect with your network. This solution solves the pricing aspect of the VPN solution by using tiny instance(s). This solution, however, still routes your traffic over the internet to the external HTTP load balancer..

Another alternative solution is to exchange next-hop routes using an Internal Load Balancer. This allows you to share routes with peers and route traffic to a custom network appliance. This solution solves the pricing aspect of the VPN solution by using tiny network appliance(s) and keeps your traffic in the cloud.

Solution Design

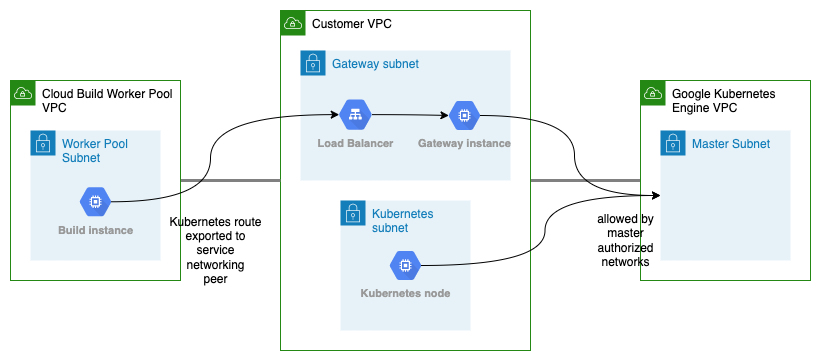

This solution uses an Internal Load Balancer to forward traffic from your Cloud Build Private Pool to your Google Kubernetes Engine master instance.

The Internal Load Balancer is backed by a NAT gateway that forwards source traffic to peered service networks. In effect, it acts as a hub for your peered resources. Allowing you to access your internal resources from your Cloud Build Private Pool.

Find the code on GitHub.

Gateway Configuration

The gateway is a VM with the can-ip-forward-flag enabled. This allows the VM to receive packets with alternative destination addresses. The source traffic is forwarded to the destination using iptables.

# Allow forwarding packets to other destinations

echo 1 > /proc/sys/net/ipv4/ip_forward

# Forward all packages to other destinations

iptables -F

iptables -t nat -A POSTROUTING -j MASQUERADERoutes Configuration

To allow the Cloud Build Private Pool to use the gateway, we have to share routes with the Google service producer(s) peering: the servicenetworking-googleapis-com-peer. Therefore we enable custom route exports and add a static route to the peered service.

# Export custom routes with the Google service producer(s) peering.

resource "google_compute_network_peering_routes_config" "vpc_service_networking" {

project = var.project_id

network = google_compute_network.vpc.name

peering = "servicenetworking-googleapis-com"

import_custom_routes = false

export_custom_routes = true

depends_on = [google_service_networking_connection.vpc_service_networking]

}

# Add static route to the GKE master network

resource "google_compute_route" "gateway_routes" {

project = var.project_id

name = "vpc-tgw-gke"

network = "vpc"

next_hop_ilb = google_compute_forwarding_rule.gateway.id

priority = 1000

dest_range = "10.20.0.0/24"

}Make sure to exchange a less specific route to prevent routing conflicts.

Build Results

The setup is validated by running a build and querying the nodes of the kubernetes cluster.

The build is defined as:

steps:

- name: gcr.io/cloud-builders/gcloud

entrypoint: bash

args:

- '-c'

- |

gcloud container clusters get-credentials cluster1 --project <your-project> --zone europe-west1-d

kubectl get nodes

options:

workerPool: projects/<your-project>/locations/europe-west1/workerPools/pool-euThe build logs report:

FETCHSOURCE

hint: Using 'master' as the name for the initial branch. This default branch name

hint: is subject to change. To configure the initial branch name to use in all

hint: of your new repositories, which will suppress this warning, call:

hint:

hint: git config --global init.defaultBranch <name>

hint:

hint: Names commonly chosen instead of 'master' are 'main', 'trunk' and

hint: 'development'. The just-created branch can be renamed via this command:

hint:

hint: git branch -m <name>

Initialized empty Git repository in /workspace/.git/

From https://source.developers.google.com/p/<your-project>/r/demo-b6e1

* branch 4e453fdb377e075cadeba98d86bd1e4dd8b8a98d -> FETCH_HEAD

HEAD is now at 4e453fd Added cloudbuild.yaml

BUILD

Already have image (with digest): gcr.io/cloud-builders/gcloud

Fetching cluster endpoint and auth data.

kubeconfig entry generated for cluster1.

NAME STATUS ROLES AGE VERSION

gke-cluster1-cluster1-node1-31add84f-tdjl Ready <none> 27m v1.21.6-gke.1500

PUSH

DONETry it yourself by deploying the infrastructure and triggering a build using the generated demo-resources.

Discussion

It’s confusing that Cloud Build Private Pools are unable to access managed services such as Cloud SQL and GKE. I would really like a managed connectivity service to overcome transitive peering limitations while being able to control the traffic flow.

Controlling the traffic flow is also an issue in the demonstrated solution. The gateway is deployed as a hub and allows all peered Google service producer(s) to reach GKE. The peered Google services are allowed, because they share a single Service Networking peering and therefore receive the custom routes to GKE. Gladly, we can apply firewall rules to restrict other Google services from accessing transitive peers.

An alternative solution could use a proxy, as shown in the IAP proxy solution. This solution, however, doesn’t need IAP because your build is already running in the cloud network. Therefore you can use regular IAM permissions to restrict users from submitting a build or from accessing the Kubernetes instances. This solution, additionally, doesn’t require any routing configuration. This configuration is delegated to the end-user, because they need to configure the proxy endpoint.

Conclusion

Deploying to Google managed services from Cloud Build Private Pools requires custom network appliances. Save your money and keep your traffic in the cloud by using a custom routing appliance.