Google offers global load balancers which route traffic to a backend service in the region closest to the user, to reduce latency. In this blog we configure an example application with a global load balancer using terraform in order to understand all of the components involved and see the load balancer in operation.

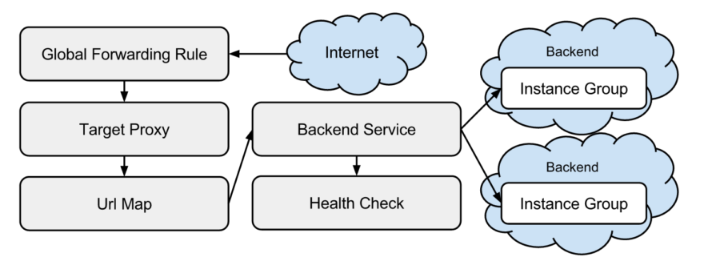

When we look up the documentation of the global http/s load balancer, we can see that the following components are required:

Using this picture as a guide, we will construct the terraform definition starting from the top: the Internet (address).

internet

To get internet traffic to our instances, we need to reserve an external internet address. In Terraform, this is declared as follows:

resource "google_compute_global_address" "paas-monitor" {

name = "paas-monitor"

}

global forwarding rule

To forward traffic directed at port 80 of the created ip address, we need to configure a global forwarding rule to target our HTTP proxy:

resource "google_compute_global_forwarding_rule" "paas-monitor" {

name = "paas-monitor-port-80"

ip_address = "${google_compute_global_address.paas-monitor.address}"

port_range = "80"

target = "${google_compute_target_http_proxy.paas-monitor.self_link}"

}

target proxy with URL map

The target HTTP proxy uses an URL map to send the traffic to the appropriate backend:

resource "google_compute_target_http_proxy" "paas-monitor" {

name = "paas-monitor"

url_map = "${google_compute_url_map.paas-monitor.self_link}"

}

resource "google_compute_url_map" "paas-monitor" {

name = "paas-monitor"

default_service = "${google_compute_backend_service.paas-monitor.self_link}"

}

In this case, all traffic is sent to our default backend.

backend service

A backend service consists of one or more instance groups which are possible destinations for traffic from the target proxy. In our example there are three regional instance groups. One in the US, one in Europe and one in Asia.

resource "google_compute_backend_service" "paas-monitor" {

name = "paas-monitor-backend"

protocol = "HTTP"

port_name = "paas-monitor"

timeout_sec = 10

session_affinity = "NONE"

backend {

group = "${module.instance-group-us-central1.instance_group_manager}"

}

backend {

group = "${module.instance-group-europe-west4.instance_group_manager}"

}

backend {

group = "${module.instance-group-asia-east1.instance_group_manager}"

}

health_checks = ["${module.instance-group-us-central1.health_check}"]

}

health checks

Health checks are used by the load balancer to determine

which instance can handle traffic. The health checks are also used to assist with auto healing and rolling updates:

resource "google_compute_http_health_check" "paas-monitor" {

name = "paas-monitor-${var.region}"

request_path = "/health"

timeout_sec = 5

check_interval_sec = 5

port = 1337

lifecycle {

create_before_destroy = true

}

}

To allow GCP to probe the health check, we need to open the firewall for GCP:

resource "google_compute_firewall" "paas-monitor" {

## firewall rules enabling the load balancer health checks

name = "paas-monitor-firewall"

network = "default"

description = "allow Google health checks and network load balancers access"

allow {

protocol = "icmp"

}

allow {

protocol = "tcp"

ports = ["1337"]

}

source_ranges = ["35.191.0.0/16", "130.211.0.0/22", "209.85.152.0/22", "209.85.204.0/22"]

target_tags = ["paas-monitor"]

}

instance groups

To direct traffic to an instance in the nearest region, regional managed instance groups are used. These groups will spread the virtual machines across all zones in the region, protecting against single zone failure. The regional instance group are configured with an instance template, a named port and a health check.

resource "google_compute_region_instance_group_manager" "paas-monitor" {

name = "paas-monitor-${var.region}"

base_instance_name = "paas-monitor-${var.region}"

region = "${var.region}"

instance_template = "${google_compute_instance_template.paas-monitor.self_link}"

version {

name = "v1"

instance_template = "${google_compute_instance_template.paas-monitor.self_link}"

}

named_port {

name = "paas-monitor"

port = 1337

}

auto_healing_policies {

health_check = "${google_compute_http_health_check.paas-monitor.self_link}"

initial_delay_sec = 30

}

update_strategy = "ROLLING_UPDATE"

rolling_update_policy {

type = "PROACTIVE"

minimal_action = "REPLACE"

max_surge_fixed = 10

min_ready_sec = 60

}

}

In this example, regional instance groups are deployed to us-central1, europe-west4 and asia-east1.

the backend

The backend application for this example is the paas-monitor which is a web application that will report the identity of the responding server and the latency of that call. It allows the user to toggle the health status and even kill the process on request, to see the behaviour of the hosting platform. It is packaged as a docker image and can be run as follows:

"docker run -d -p 1337:1337

--env 'MESSAGE=gcp at ${var.region}'

--env RELEASE=v3.0.4.16 mvanholsteijn/paas-monitor:latest"

This command is specified in the compute instance template as a startup command.

demonstration

In the following video, we demonstrate the application and the capabilities of the global load balancer: proximity routing to the region/zone nearest to the user, fail-over and rolling updates.

installation

If you want to install this application in your project, install terraform,

create a Google project and configure your gcloud SDK to point it.

Then, run the following commands:

git clone https://github.com/binxio/deployment-google-global-load-balancer-application.git

cd deployment-google-global-load-balancer-application

GOOGLE_PROJECT=$(gcloud config get-value project)

terraform init

terraform apply -auto-approve

open http://$(terraform output ip-address)

It may take a few minutes before traffic reaches your application. Until that time you will encounter http 404 and 502 errors

conclusion

Although the documentation speaks of a Global HTTP/S Load Balancer, we did not create or configure “the” load balancer in our

project. We clearly configured all of the components drawn in the diagram in our terraform plan. If you study carefully, we just created a number of traffic routing constructs which the Google Compute Platform uses to route the traffic to our application.

In our next blog, we will show how to configure the content delivery network in front of it.