Concurrency is the bread and butter of current software architecture. Even simple systems need to communicate with databases or web services, bringing new challenges in terms of reliability and data consistency. When microservices and caches enter the game, the landscape becomes even harder. In those situations, it’s very useful to think about our system at a higher level, focusing on the main features and not on the tiny details like the particular library you’re using for serialization. Formal methods are a really valuable tool in that journey.

Formal methods is an umbrella term for any technique applied during the development lifecycle and based on logic, theoretical computer science, or mathematics. The type system in a statically-typed programming language is a good example: in this case, the underlying logical foundation is type theory. Most forms of analysis, like nullability in Kotlin, checked exceptions in Java, and Rust’s borrow checker are also shaped by logic.

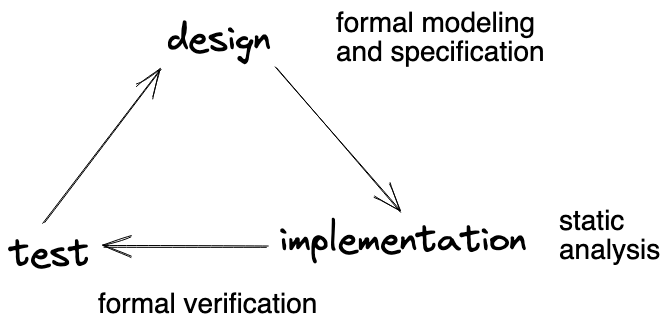

Since formal methods are such a blurry concept, it’s very common to refer to them using different names depending on the phase in which they are applied. The following diagram shows three broad categories, but as usual, they’re all quite connected. For example, trying to formally verify some piece of code may help us refine our model, which in turn could feed additional information to a particular static analysis.

Formal methods support a broad range of technologies and products, not only concurrent systems. Hardware design and verification is another area where these techniques have a bigger presence. In our case, we use our experience in designing distributed architectures to leverage the quality of the products developed in those areas.

Modeling

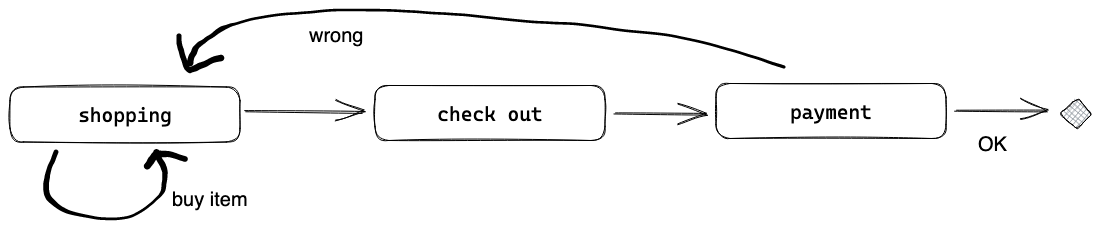

One of the hardest parts of a concurrent system is ensuring data consistency and integrity over a sequence of steps, each of them potentially performed by a different actor. Being more concrete, let’s focus on the system depicted below, which describes a very simple webshop. Each rectangle corresponds to a state in which the operation may be: the client shops by adding items, and at some point checks out, and then pays.

By visualizing the system in this form, we’re already removing lots of accidental complexity (for our purposes), like how we know which items are available and were selected at each step (maybe a mobile app, maybe a website). But even with such a simple model, we can investigate potential problems, like double checkouts or correct payments that don’t lead to a shipment. Every check that had to be added to our model will be replicated, in one form or another, in the actual implementation.

The process doesn’t stop there; we iterate our model until we’re confident of its correctness. In doing so, model checkers like TLA+ and Alloy provide an essential helping hand. Given a description like the diagram above, you can start posing questions to the model checker, like, “Is there a sequence of actions that leads me to sell more items than those available in the store?” If the answer is affirmative, an example of such a sequence is given. This has significant practical implications: in the TLA+ website, we can find two examples of bugs within ElasticSearch and Kafka – two important components in distributed architectures – which were found because of the model, even before any user reported any incorrect behavior. As another witness to the power of these tools, both Amazon and Microsoft have used them to increase their confidence on some of the algorithms underpinning their cloud offerings.

Static analyses

Formal modeling focuses mostly on understanding the system at a deeper level, usually during the design phase; the counterpart for the implementation phase are static analyses. With those, we can catch potential problems while writing or compiling the code before it’s run in any form. As discussed above, type systems are a well-known example, usually integrated in editors and IDEs.

In the functional programming devision at Xebia, we’re happy users of powerful type systems like Scala’s, which allow us to outsource checking many of the data invariants to the compiler. Not only that, a type system helps us by removing boilerplate with automatic derivation and other techniques. Refinement and dependent types is the next step in the ladder, allowing the developer to describe each data piece’s properties in isolation and the relations between them.

Alas, there’s a huge disconnect between what research offers and what the “common developer” has available in their everyday job. An important reason for this gap is the lack of tools, which are often half-baked or geared towards experts. Projects like Arrow Analysis bridge this gap by bringing useful and well-proven ideas to languages like Kotlin.

Formal methods @ Xebia

Even though there are other companies in the formal methods space, our aim is to bring these methods to the “common developer,” not keeping it hidden from everybody but a few experts. If your team wants to bring this additional level of understanding and confidence in your software, we’re ready to help you with formal methods. Conversely, if you want to help companies level up their formal game, we have open positions for formal methods engineers.