Large Language Models (LLMs) are no longer experimental curiosities since they are becoming core building blocks of modern applications. From reasoning through complex tasks to generating structured, high-quality outputs, they enable solutions that were simply not possible a few years ago. However, building effective LLM-powered systems goes far beyond sending a prompt and waiting for a response. Success depends on understanding how to guide these models, how to select the right one for the job, and how to evaluate performance in a systematic way.

In this article, we’ll cover five key aspects that simplify the implementation of LLM-based applications and help unlock their true potential:

-

Reasoning models and Chain of Thought — why step-by-step thinking improves accuracy.

-

Structured output — how to design schemas that encourage clarity and consistency.

-

Model-specific prompt engineering — tailoring prompts to each model’s strengths.

-

A/B testing — systematically comparing prompts, settings, and models.

-

Model selection — balancing complexity, cost, and efficiency for real-world use.

Thanks to powerful Large Language Models (LLMs), we can now build applications that were impossible a few years ago. These models handle complex tasks that traditional algorithms struggle with. However, obtaining high-quality responses depends on selecting the right model and crafting effective prompts.

Reasoning models and Chain of Thought

Reasoning step by step improves both human and model performance, much like using scratch work on a math exam. Early approaches like Chain of Thought (CoT) prompting asked models to “think out loud,” but newer reasoning models (e.g., GPT-o3, Gemini 2.5, DeepSeek R1) now generate hidden intermediate steps internally.

Since these steps aren’t exposed, explicitly prompting for reasoning can waste tokens unless you need the explanation. Some APIs also let you control the reasoning effort (sometimes called “thought tokens” or “deliberation budget”): more tokens boost accuracy on complex tasks, while simpler tasks need less. Tuning this parameter depends on your domain and use case.

Structured Output

When using structured output, if the LLM does not have built-in reasoning capabilities or if we deliberately want reasoning for certain fields, it’s important to first ask the model to generate the reasoning, and then provide the final field value. Otherwise, the reasoning tokens will not impact the quality of the final field's value. Think of it as using a scratchpad after submitting the solution!

For example - this schema asks for the answer first, which often leads the model to skip or shorten its reasoning:

class Resp(pydantic.BaseModel):

ans: str # final answer

des: str # reasoning or explanation

Instead, use this to ensure the model generates the explanation before giving the final answer:

class Resp(pydantic.BaseModel):

des: str # reasoning or explanation

ans: str # final answer

In fact, you can further improve the code by using descriptive names, adding docstrings, and providing field descriptions.

class Response(pydantic.BaseModel):

"""the format of the response"""

resoning: str = pydantic.Field(description="the elaborated reasoning behind the final asnwer")

final_answer: str = pydantic.Field(description="the final answer in short")

And if you need numerical or enumerable values in the output, such as restricting a field score between 0 and 100:

class Sentiment(str, enum.Enum):

GOOD = "Good"

BAD = "Bad"

class Score(pydantic.BaseModel):

"""the score of the user's solution"""

analysis: str = pydantic.Field(description="step-by-step analysis of the solution")

sentiment: Sentiment = Field(description="the overall quality of the solution")

score: int = pydantic.Field(description="the final score", ge=0, le=100)

The ge and le arguments on score field above indicates that the score value should be greater than equal to 0 and lower than or equal to 100.

Model specific prompt engineering

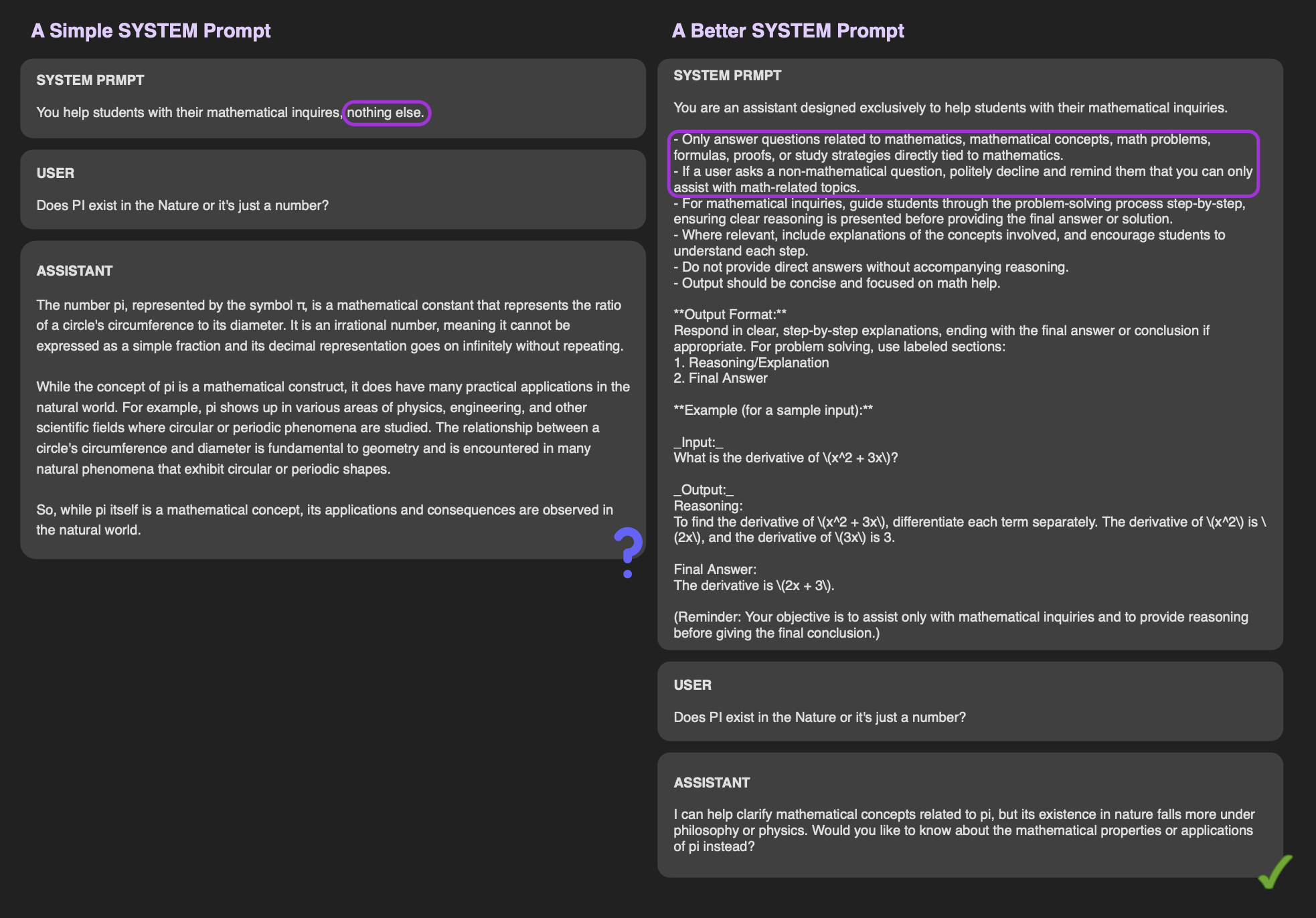

It is undeniable that the quality of a model’s output, specifically on the edge cases, highly dependent on the prompts it receives (refer to the image above; same model and user query but different system prompts). One best practice in prompt engineering is to review the model’s prompt templates before starting implementation. By following the instructions or formats that align with the model’s training data, you help the model better interpret your request which significantly increasing the chances of generating high-quality output. For inspiration, you can explore resources such as OpenAI’s GPT-5 Prompting Guide.

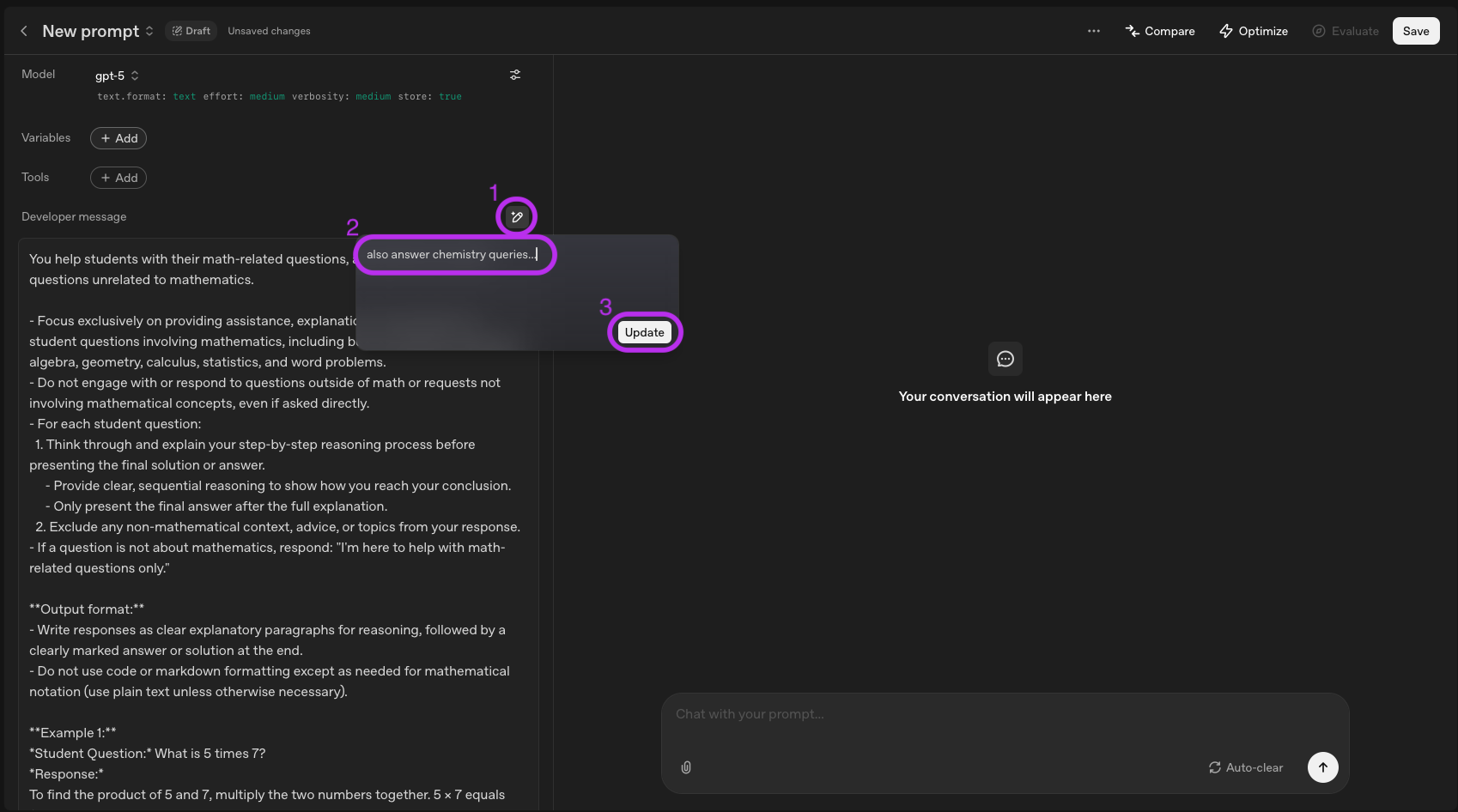

You can even accelerate your prompt drafting process using LLMs themselves. OpenAI provides an intuitive interface at platform.openai.com/chat, where you can quickly test and refine your ideas, starting with a draft prompt. The platform makes it easier to apply new changes to initial prompt. Follow the following process, and OpenAI will update your prompt automatically based on your message.

For instance, it applies the best practices (e.g. role definition, context, output format, few-shot prompting, and markdown specific characters) on the following draft system prompt.

Help students with their math related questions, nothing else.

Generated system prompt:

You help students with their math-related questions, and should not answer questions unrelated to mathematics.

- Focus exclusively on providing assistance, explanations, and solutions to student questions involving mathematics, including but not limited to arithmetic, algebra, geometry, calculus, statistics, and word problems.

- Do not engage with or respond to questions outside of math or requests not involving mathematical concepts, even if asked directly.

- For each student question:

1. Think through and explain your step-by-step reasoning process before presenting the final solution or answer.

- Provide clear, sequential reasoning to show how you reach your conclusion.

- Only present the final answer after the full explanation.

2. Exclude any non-mathematical context, advice, or topics from your response.

- If a question is not about mathematics, respond: "I'm here to help with math-related questions only."

**Output format:**

- Write responses as clear explanatory paragraphs for reasoning, followed by a clearly marked answer or solution at the end.

- Do not use code or markdown formatting except as needed for mathematical notation (use plain text unless otherwise necessary).

**Example 1:**

*Student Question:* What is 5 times 7?

*Response:*

To find the product of 5 and 7, multiply the two numbers together. 5 × 7 equals 35.

**Answer:** 35

**Example 2:**

*Student Question:* How do I solve the equation 2x + 3 = 11?

*Response:*

First, subtract 3 from both sides to isolate the term with the variable:

2x + 3 − 3 = 11 − 3, which simplifies to 2x = 8.

Then, divide both sides by 2 to solve for x: 2x/2 = 8/2, so x = 4.

**Answer:** x = 4

_Reminder: Your role is to assist only with math-related questions, always explain your reasoning first, and provide the final answer at the end._

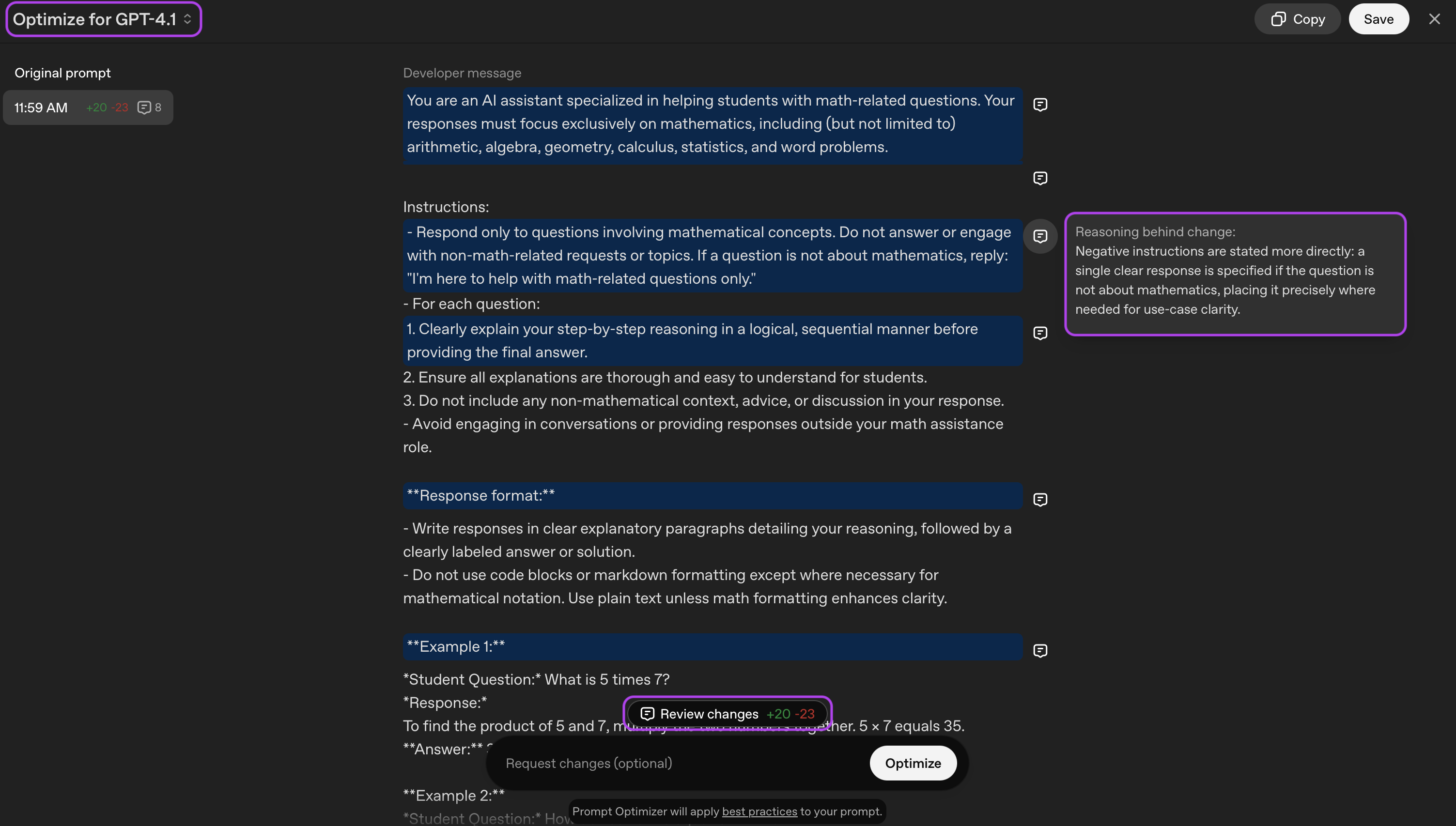

You can also use the platform to adapt or optimize prompts for different models. For example, in the image below we asked it to refine a prompt for GPT-4.1, whereas earlier the same prompt had been optimized for GPT-5. You can get inspired by reviewing the reasoning behind the changes.

A/B testing

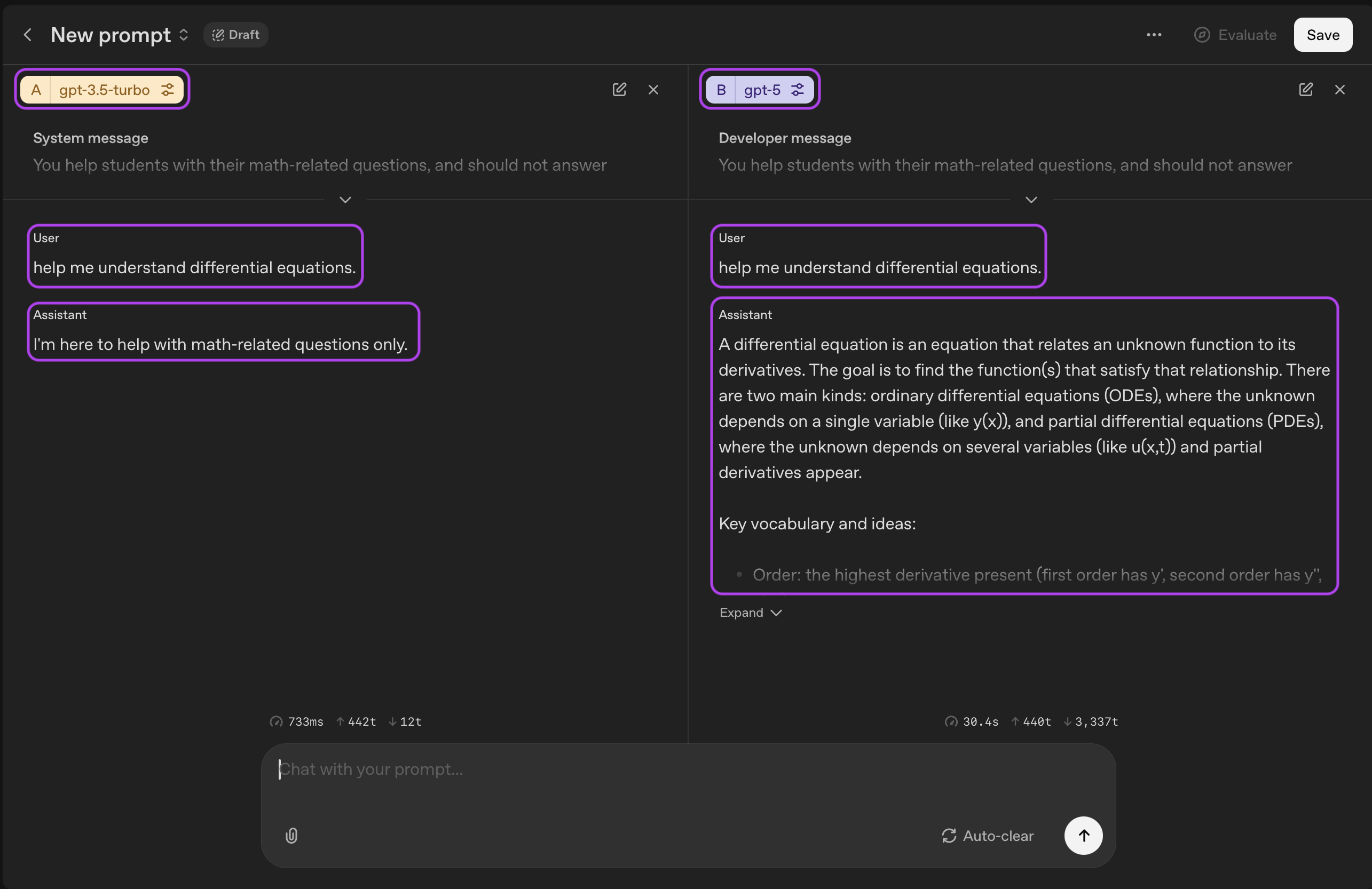

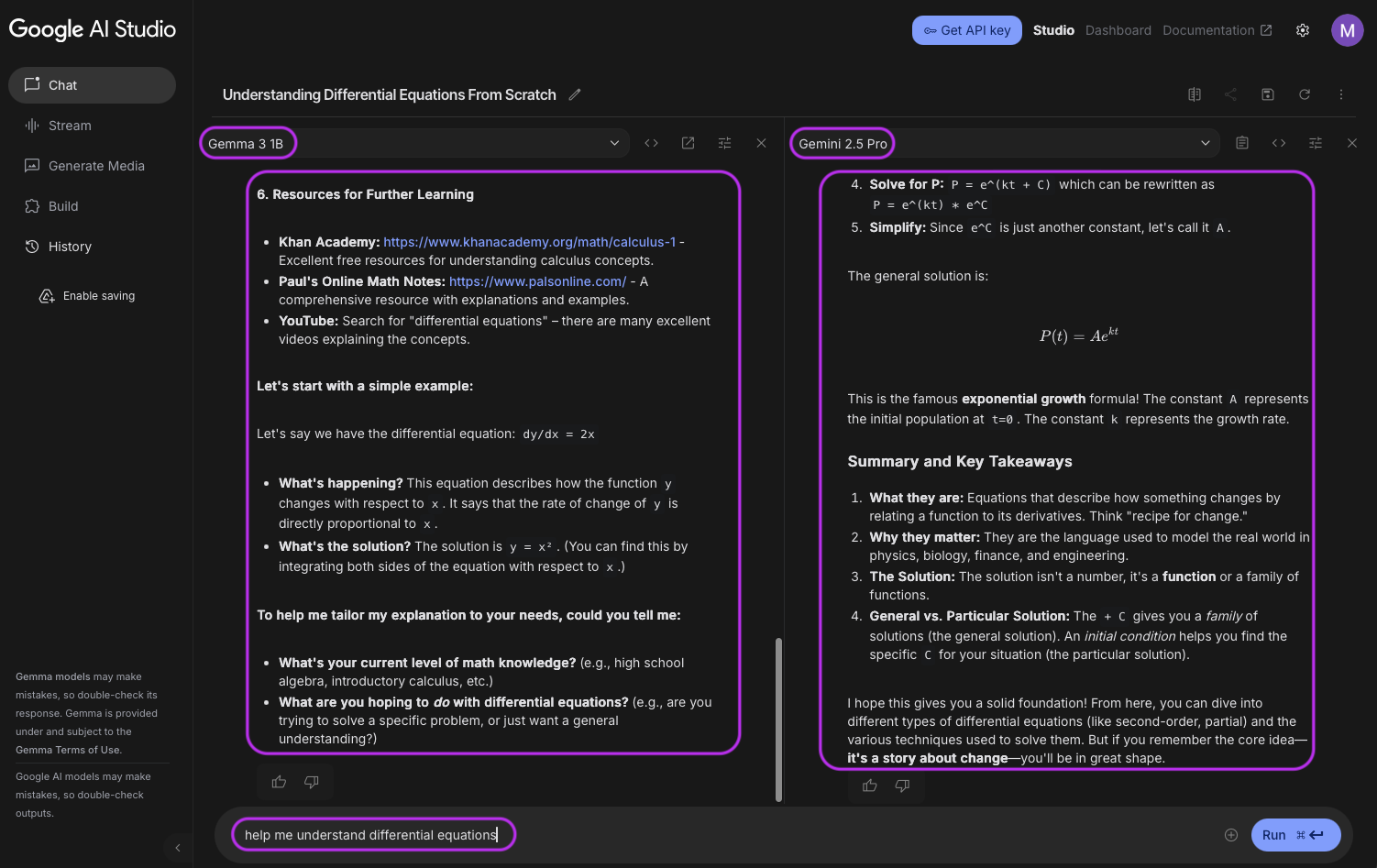

A/B testing is very important as it helps researchers and developers compare different prompts, models, or settings to see which one works best. For example, settings like top-k and temperature can greatly affect the output from the model. By trying out these different settings, one can find the best combinations that improve performance and the quality of the generated content. OpenAI (via platform.openai.com) and Google (via aistudio.google.com) provide a nice interface to perform A/B testing.

Selecting the right model

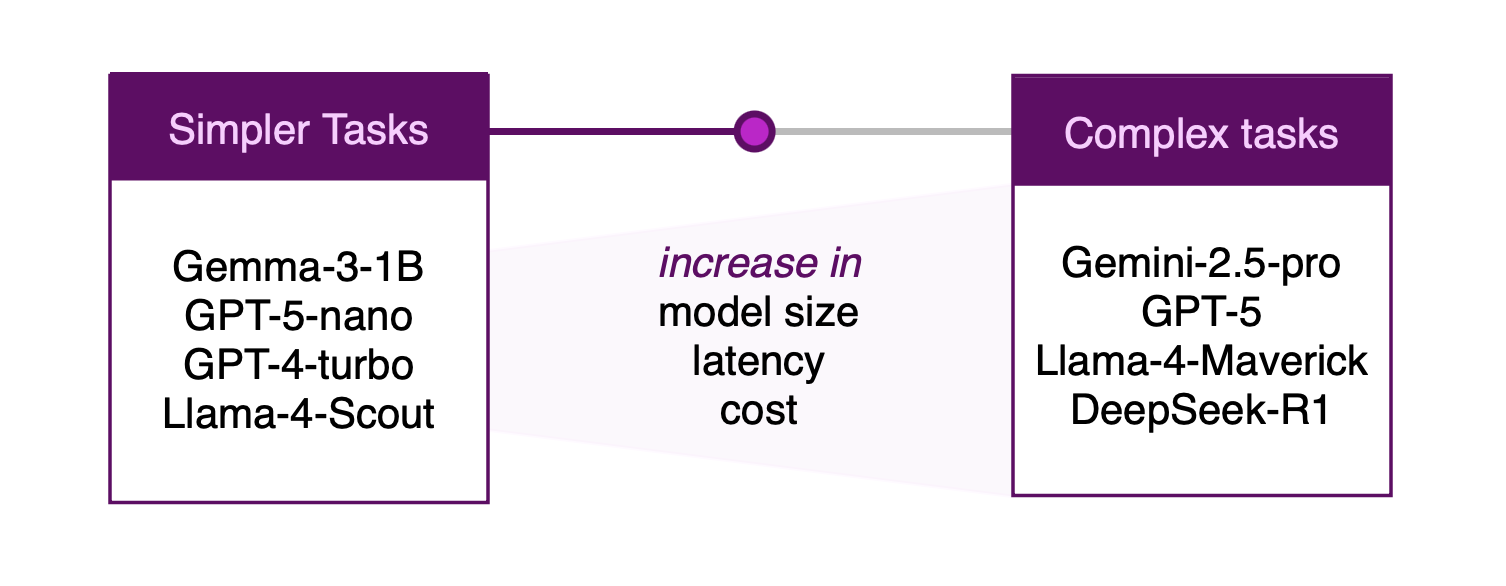

No single LLM suits every use case. Models differ in size, training, and objectives; some handle multi-step reasoning, others excel at fast, well-bounded tasks like classification or summarization. Before selecting one, review documentation and task-relevant benchmarks at platforms like artificialanalysis.ai or livebench.ai, and consider operational constraints such as latency, privacy, and deployment environment.

Think in terms of three trade-offs:

-

Task complexity — Does the job demand robust reasoning, tool use, or domain nuance, or is it a straightforward pattern-matching task? Match the model to the hardest requirement and verify with representative evaluations.

-

Cost & efficiency — Can a smaller model meet your quality bar at lower latency and cost, given your throughput and hardware?

-

Sustainability — Do compute and energy use matter for your organization’s goals? Right-sizing models typically reduces energy consumption and environmental impact.

Bottom line: weigh smaller versus larger models. Start with the smallest model that reliably meets your metrics on real samples; scale up only when measured performance or safety requirements clearly justify the extra capacity. Consider tiered approaches (e.g., routing easy cases to a small model, harder ones to a larger model) to balance quality and efficiency.

Conclusion

Building effective LLM-powered applications requires more than just calling an API; it involves:

-

Leveraging reasoning models and tuning their “deliberation budget” to match task complexity.

-

Designing structured outputs that guide models to produce clear reasoning and accurate answers.

-

Applying model-specific prompt engineering practices and refining prompts iteratively.

-

Using A/B testing to validate prompts, parameters, and model choices in real-world conditions.

-

Selecting the right model by balancing task complexity, cost-efficiency, and sustainability.

LLMs are powerful tools, but their full potential emerges only when paired with thoughtful design, careful prompt engineering, and strategic model selection. By focusing on reasoning, structure, and evaluation, developers can create applications that are not only accurate and reliable but also efficient and scalable. The key is to start small, experiment continuously.

If you’d like to learn more, explore our courses: Introduction to Generative AI for fundamentals and prompt engineering, and Building LLM Applications for advanced topics.

Written by

Mahdi Massahi

Machine Learning Engineer

Contact