As derived from the title, the objective of this post is to help you deploy a container instance inside Azure.

However, we’ll extend the typical scenario and make a slightly more extensive use of networking capabilities, by placing the container group inside a private subnet.

Note: For this example, and for simplicity only, we’ll use NGINX as our container of choice. Of course, you’re welcome to try with any other image.

Let’s get to work!

General layout

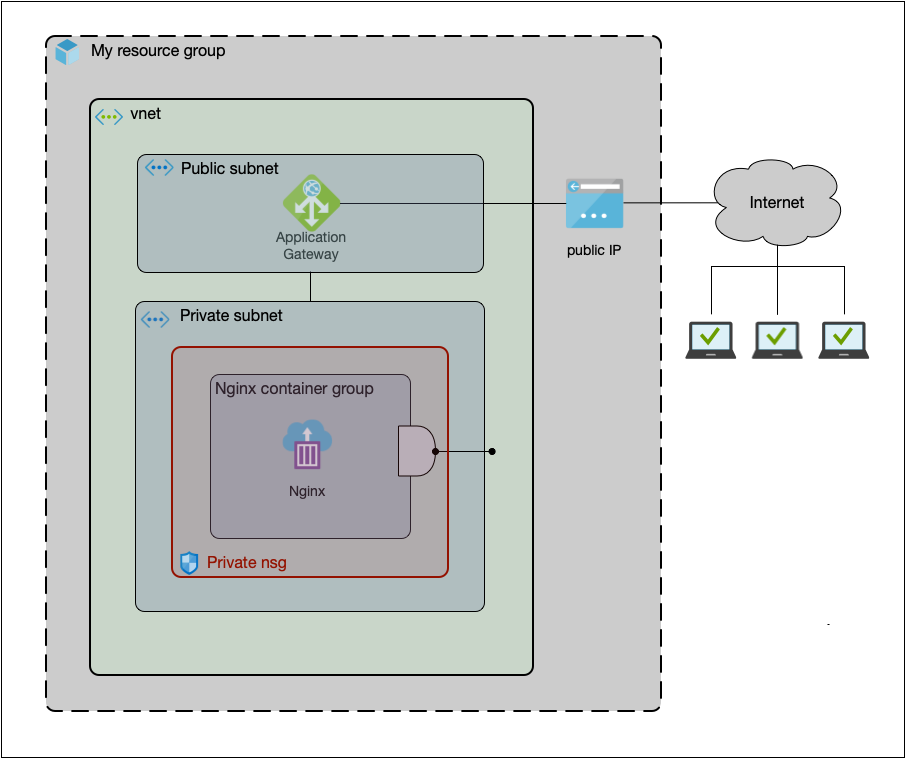

First things first: let’s begin with a clear picture of what we’re going to build

The diagram depicts all building blocks. However, some of these concepts – if not all – might be unfamiliar to you and, therefore require explaining. I’ll go over them one by one:

Let’s use a real life-scenario as an analogy: Imagine you’re invited to live in a mansion…

- VNet: The structure tying the whole thing up. Without a network, we have nothing.

We can think of it as the mansion itself. - Subnets: They are sub-divisions of the vnet. It is basically the way of grouping components inside the network. Think of them as different rooms inside the mansion.

- Container instance: Essentially, our docker container. The nginx server itself.

This would be you, one of the (potentially many) inhabitants of the place. - Public IP: Provides our network with a world-wide public endpoint.

This would be the front door of the house, so postmen can deliver correspondence. - application gateway: Routes traffic from our public frontend to our container.

Every mansion needs a butler, who (listens to and answers the door), receives packages from specific postmen and delivers them to the appropriate guest. - Network profile: Allows a container instance (with no public IP) to communicate with the subnet it lives in (it’s just how Azure works).

But this is a big mansion, with many rooms (subnets). This is how you claim yours. - Network security group (nsg): Acts as a kind of firewall by allowing/denying traffic into/out of subnets. It will be an extra layer of security for our container.

Imagine a special laser fence around your room which is tailor made to allow only certain people through (like the butler).

As a summary, the behaviour will be the following:

We’ll have two subnets: one public and one private.

The container (nginx) will live on the private one, where nothing outside the vnet can access it (the network security group takes care of that).

On the public one, we’ll place the application gateway, which will route traffic to our container thus making it reachable while still protected from direct external access.

An external computer will make a request to the public IP on port 80. The application gateway will take the request, forward it to the nginx instance, get the response and send it back to the requestor.

Deployment of components

Deployments will specified using arm templates and deployed by means of the command line interface tool as:

$ az group deployment create --resource-group--template-file

A nice benefit of template files is the possibility to deploy more than one resource in a single run. We could even specify the entire project a a single template file if we so wished.

For simplicity though, we’ll use separated files in this example.

Each deployment section will contain template snippets (the resources). Whether to combine them all in a single template file or manage them as separated ones, is left entirely to the reader.

Note: All values on the snippets are hardcoded to make them more readable. Some values can (and should) be specified as parameters in a parameters file. More specifically, when dealing with secured strings like passwords/keys. But we’ll cover that in another post. For now, let’s focus on the big picture.

In order to avoid dependency issues, we’ll shuffle the above list a bit. For instance, we might get the fence before the mansion, but it does not matter. In the end, the whole picture will come together.

VNet

Now, let’s start with the vnet, subnets and network security group:

{ "type": "Microsoft.Network/networkSecurityGroups", "apiVersion": "2019-07-01", "name": "my-private-nsg", "location": "[resourceGroup().location]", "properties": null }, { "type": "Microsoft.Network/virtualNetworks", "apiVersion": "2019-07-01", "name": "my-vnet", "location": "[resourceGroup().location]", "properties": { "addressSpace": { "addressPrefixes": "10.0.0.0/21" }, "subnets": [ { "name": "public", "properties": { "addressPrefix": "10.0.1.0/24", "privateEndpointNetworkPolicies": "Enabled", "privateLinkServiceNetworkPolicies": "Enabled" } }, { "name": "private", "properties": { "addressPrefix": "10.0.2.0/24", "networkSecurityGroup": { "id": "[resourceId('Microsoft.Network/networkSecurityGroups', 'my-private-nsg')]" }, "delegations": [ { "name": "private-subnet-delegation", "properties": { "serviceName": "Microsoft.ContainerInstance/containerGroups" } } ], "privateEndpointNetworkPolicies": "Enabled", "privateLinkServiceNetworkPolicies": "Enabled" } } ], "enableDdosProtection": false, "enableVmProtection": false }, "dependsOn": [ "my-private-nsg" ] }

Here we’ve defined a vnet in the space (10.0.0.0/21) and the two subnets using CIDR notation:

- Public subnet in 10.0.1.0/24

- Private subnet in 10.0.2.0/24

(we’re devoting three bits for subnetting. for this example, however, a single bit would have sufficed).

Notice the private subnet delegates to Microsoft.ContainerInstance/containerGroups. This is required by Azure in order to deploy container instances to the subnet. The delegation also implies (enforced by Azure), only container groups may be deployed to such subnet.

We’ve assigned the my-private-nsg to the private subnet (the fence is installed). However, we have not specified any rules for the it. Why? Because we’re relying on this nsg to simply deny all traffic which does not originate within the vnet. Since those rules come by default with Azure, nothin else is required.

Public IP address

Announcing ourselves to the world:

{ "type": "Microsoft.Network/publicIPAddresses", "apiVersion": "2019-07-01", "name": "my-ip", "location": "[resourceGroup().location]", "sku": { "name": "Standard", "tier": "Regional" }, "properties": { "publicIPAddressVersion": "IPv4", "publicIPAllocationMethod": "Static", "idleTimeoutInMinutes": 4, "dnsSettings": { "domainNameLabel": "nginx-demo" } } }

In this example, we’re giving this public IP a domain name label, which means we’ll be able to access it – once deployed, through a proper domain name instead of the IP address itself.

Application Gateway

We must now route traffic from the public subnet to the private one:

{ "type": "Microsoft.Network/applicationGateways", "apiVersion": "2019-08-01", "name": "my-ag", "location": "[resourceGroup().location]", "properties": { "sku": { "name": "Standard_v2", "tier": "Standard_v2" }, "gatewayIPConfigurations": [ { "name": "my-gateway-ip-config", "properties": { "subnet": { "id": "[resourceId('Microsoft.Network/virtualNetworks/subnets', 'my-vnet', 'public')]" } } } ], "frontendIPConfigurations": [ { "name": "my-gateway-frontend-ip-config", "properties": { "privateIPAllocationMethod": "Dynamic", "publicIPAddress": { "id": "[resourceId('Microsoft.Network/publicIPAddresses', 'my-ip')]", } } } ], "frontendPorts": [ { "name": "port-80", "properties": { "port": 80 } } ], "backendAddressPools": [ { "name": "nginx-backend-pool", "properties": { "backendAddresses": [ { "ipAddress": "10.0.2.4" } ] } } ], "backendHttpSettingsCollection": [ { "name": "nginx-http", "properties": { "port": 80, "protocol": "Http", "cookieBasedAffinity": "Disabled", "pickHostNameFromBackendAddress": false, "requestTimeout": 20 } } ], "httpListeners": [ { "name": "port-80-listener", "properties": { "frontendIPConfiguration": { "id": "[resourceId('Microsoft.Network/applicationGateways/frontendIPConfigurations', 'my-ag', 'my-gateway-frontend-ip-config')]" }, "frontendPort": { "id": "[resourceId('Microsoft.Network/applicationGateways/frontendPorts', 'nginx-ag', 'port-80')]" }, "protocol": "Http", "requireServerNameIndication": false } } ], "requestRoutingRules": [ { "name": "redirect-to-nginx-webserver", "properties": { "ruleType": "Basic", "httpListener": { "id": "[resourceId('Microsoft.Network/applicationGateways/httpListeners', 'my-ag', 'port-80-listener')]" }, "backendAddressPool": { "id": "[resourceId('Microsoft.Network/applicationGateways/backendAddressPools', 'my-ag', 'nginx-backend-pool')]" }, "backendHttpSettings": { "id": "[resourceId('Microsoft.Network/applicationGateways/backendHttpSettingsCollection', 'my-ag', 'nginx-http')]" } } } ], "enableHttp2": false, "autoscaleConfiguration": { "minCapacity": 0, "maxCapacity": 10 } } }

We have only one backend-pool pointing at IP address 10.0.2.4. This is the IP which will be assigned later on to the container instance. You can chose whichever IP address you wish for, as long as it is inside the correct subnet (in this case, the private one) and not reserved by Azure.

Network profile

One more and we’re done with the network. This component is quite essential.

Remember, it’s what we’ll use to place the container group inside the private subnet.

{ "type": "Microsoft.Network/networkProfiles", "apiVersion": "2018-07-01", "name": "my-net-profile", "location": "[resourceGroup().location]", "properties": { "containerNetworkInterfaceConfigurations": [ { "name": "my-cnic", "properties": { "ipConfigurations": [ { "name": "my-ip-config-profile", "properties": { "subnet": { "id": "[resourceId('Microsoft.Network/virtualNetworks/subnets', 'nginx-vnet', 'private')]" } } } ] } } ] } }

Brilliant. The network is now ready!

Containers

Alright. The network infrastructure is done and up. Now it’s time to deploy the container.

{ "name": "nginx", "type": "Microsoft.ContainerInstance/containerGroups", "apiVersion": "2018-10-01", "location": "[resourceGroup().location]", "properties": { "containers": [ { "name": "nginx", "properties": { "image": "nginx", "ports": [{ "port": 80 }], "resources": { "requests": { "memoryInGB": 1, "cpu": 2 } } } } ], "osType": "Linux", "ipAddress": { "ports": [{ "protocol": "tcp", "port": 80 }], "type": "private", "ip": "10.0.2.4" }, "networkProfile": { "id": "[resourceId('Microsoft.Network/networkProfiles', 'my-net-profile')]" } } }

During the creation of the application gateway, we pointed the backend pool at 10.0.2.4, remember? Hence, the private IP for the instance must match such config (otherwise, nginx would never receive any traffic). This will be a static address for the container group.

Being a completely internal address, it’s perfectly alright (it does not matter which bed you sleep in, as long as it’s inside your room).

Testing the solution

All set! It’s time to test the whole machinery!

Open a browser and go to nginx-demo.westeurope.cloudapp.azure.com.

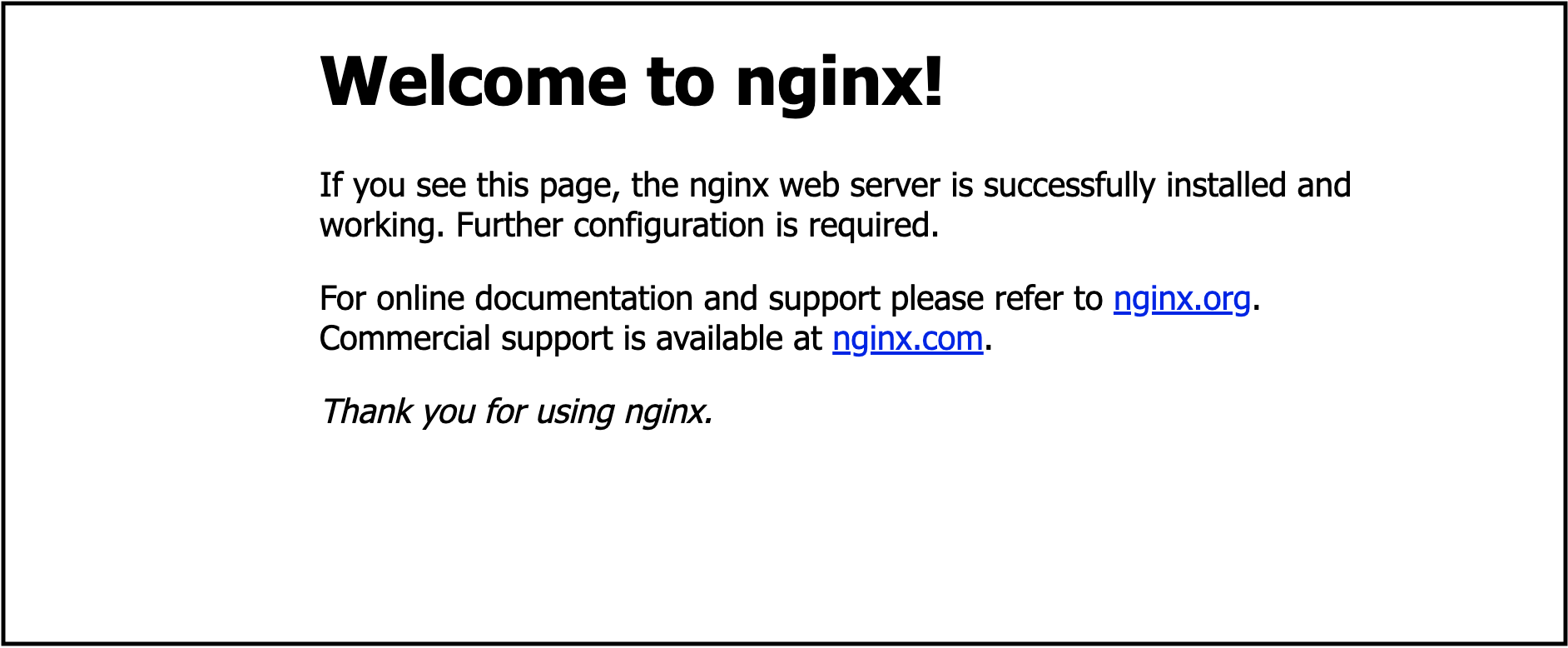

We should see nginx index page:

Boom! Congratulations: you now have a runnig nginx inside a container instance in Azure.

Conclusions

We have successfully deployed an nginx webserver as container instances inside a private subnet. Good job!