Blog

Connecting Continuous Integration to Continuous Delivery

At XebiaLabs, many of the questions we get about our enterprise deployment automation solution Deployit are from users looking for automated deployment as a prerequisite for Continuous Delivery. Often, this the result of initiatives to extend existing Continuous Integration tooling to support application deployments. Increasing the frequency of whole-application testing, decreasing time-to-production and delivering greater business value faster and more regularly are goals we definitely share, and in this post we'd like to pass on some key experiences and lessons from working together with our users to help them realize Continuous Delivery.

Extending Contiuous Integration

For many organisations, Continuous Integration (CI) tooling is the obvious "base platform" on which to build their Continuous Delivery (CD) automation suite. Often, CI is already established and running, for instance as part of introducing Agile development methodologies. Even if you're only now getting into CI, it's a good starting point for a CD effort: a delivery pipeline typically starts from the code, which is where Continuous Integration kicks in. More practically, it is also the most established component of a "Continuous Delivery toolsuite", with a wide variety of enterprise-proven, feature-rich products available.

From Continuous Integration to Continuous Delivery

The first important point to make about introducing CD is that it is more than "just tooling". As with Agile or Devops, Continuous Delivery is set of principles and processes and, more fundamentally, a mindset that requires effort, change and buy-in to implement.

Preparing for Continuous Delivery

Based on our experience in helping organisations extend Continuous Integration to Continuous Delivery, here are four of the most common points worth considering: 1. What goes into our deployment packages?- An application consists of more than just compiled code or binaries! Think about configuration files, database changes and configuration settings or resources that must be created in the target middleware environment.

- Can I collect all the components at integration time in order to prepare my deployment package automatically? Can I retrieve database scripts or configuration specifications from a repository?

- A useful test question: If I deploy my deployment package to a fresh "company vanilla middleware installation", do I have all the components and settings I need?

- Cloud is making it feasible to run multiple identical 1 environments in parallel, but for now most enterprise deployments require environment-specific settings and deployments: different database usernames and passwords, or deployment of all components to a single cluster in Dev vs. sets of components in different clusters for Q&A or Production.

- Having separate CI tasks for each environment to handle these differences leads to excessive duplication and management overhead. It can also leak sensitive information that should be hidden from developers and others with access to the build into the CI process.

- Aim for a single CI build that delivers your "gold standard" deployment package which can be customized (e.g. using configuration files with placeholders) for the target environment at deployment time. Environment-specific deployment actions (e.g. to handle different sizing of environments) should be handled by the deployment automation component.

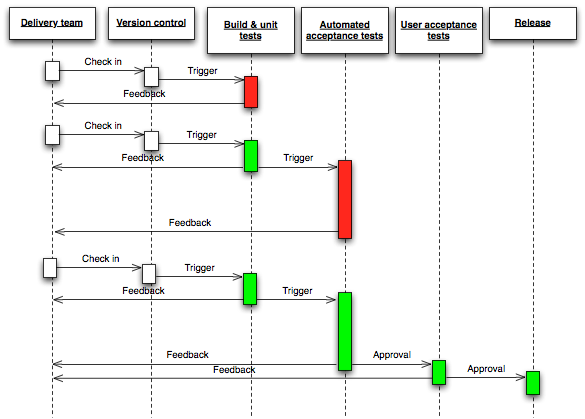

- Most CI tools allow you both to add additional actions to a task/job, as well as define a "task/job cascade" where each task is triggered by the completion of the one before it. You could thus have, at one extreme, one task with many actions representing the entire pipeline, or a cascade of many very short tasks.

- Breaking down the pipeline into a cascade keeps the configuration of each task smaller and more focussed. It also makes it easier to execute just that section of the pipeline, which is especially useful for testing and refining.

- Defining more focussed tasks does lead to many more tasks overall in your CI installation, though, so you need sorting, filtered views or other enterprise data management capabilities in your CI tool.

- Useful "chunks" to consider2:

- build, test and package

- deploy and verify

- integration/performance/functional test

- teardown/cleanup

- As the pipeline gets longer, i.e. further towards Q&A and Production, you will most likely get to points where Release Management (RM) authorization is required to proceed.

- The interaction between Release Management and Continuous Delivery is at least two-way: RM actions can trigger pipeline stages while CD actions can trigger RM verification.

- For example, setting a change request to Approved can automatically kick off or awaken a deployment and test cycle. Equally, a deployment to the Q&A environment triggered by successful functional tests can automatically verify that RM approval has been granted by validating a change request.

- identical enough that the application and its configuration need not be changed

- of course, there are no hard and fast rules here - every situation is different

Andrew Phillips

Contact